Machine vision is a key technology in modern robotics, providing real-time visual data that enables robots to perceive, interpret, and interact with their surroundings with remarkable precision. By integrating advanced imaging techniques, sophisticated computer vision algorithms, and cutting-edge machine learning models, machine vision allows robots to perform tasks with a level of accuracy and adaptability far beyond traditional automation methods.

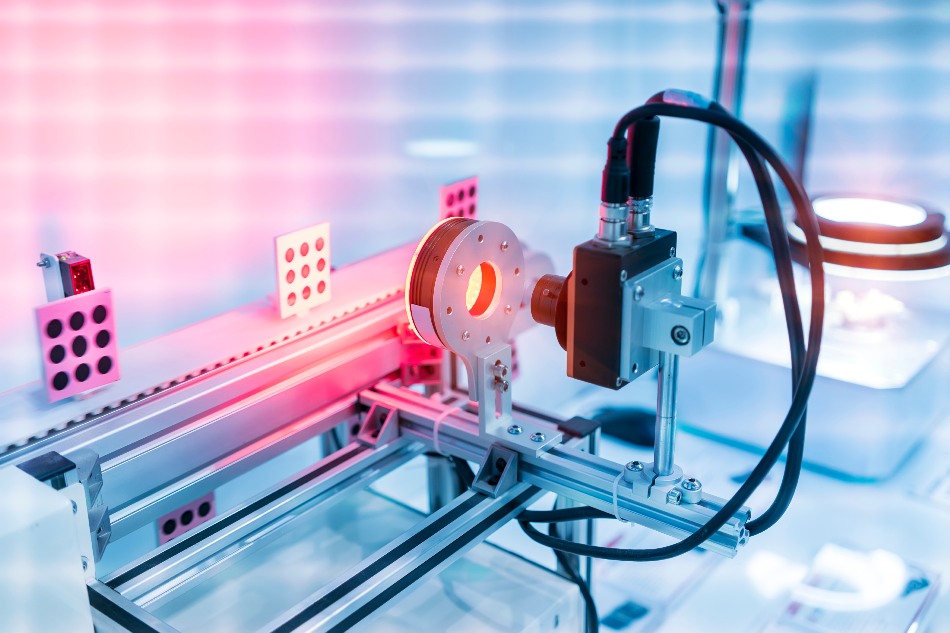

Image Credit: asharkyu/Shutterstock.com

In industrial settings, machine vision enhances inspection, assembly, and quality control, with recent advancements achieving defect detection rates as high as 99.9 % in some manufacturing processes. And as of 2025, machine vision technology is anticipated to expand further, driving automation in increasingly complex and unstructured environments.

Before we get into the applications of machine vision in industrial settings, let's first try to understand the technicality of what machine vision really is and how it can influence robotic performance.

What is Machine Vision?

Machine vision systems combine high-resolution optical sensors, advanced image processing, and artificial intelligence to interpret visual data with remarkable accuracy. Unlike traditional sensor-based automation, these systems don’t just detect objects—they analyze patterns, recognize fine details, and estimate spatial relationships in real time. This allows robots to adapt to changing environments, handle complex tasks, and operate with precision down to the sub-millimeter level.

One of the biggest challenges in machine vision is reconstructing three-dimensional (3D) structures from two-dimensional (2D) images. Since multiple real-world objects can appear identical in a 2D projection, robots need advanced imaging techniques to extract depth and shape information. Several key approaches address this issue:

- Stereo Vision uses two cameras to capture images from slightly different angles, allowing robots to calculate depth with accuracies as fine as 0.1 mm. This technique helps with autonomous navigation and precise object placement.

- Structured Light projects a known pattern onto a surface and measures distortions to map object shapes with up to 10-micrometer precision. It’s widely used in industrial inspection and 3D printing quality control.

- Time-of-Flight (ToF) Sensors measure how long light takes to bounce back from an object, creating real-time 3D maps at refresh rates up to 120 Hz. This enables robots to detect obstacles and track objects dynamically.

- Photogrammetry stitches together multiple images from different viewpoints to build detailed 3D reconstructions, achieving sub-millimeter accuracy. AI-driven photogrammetry has also made processing up to 60 % faster than traditional methods.

- Hyperspectral Imaging captures a wide range of wavelengths beyond the visible spectrum, allowing robots to identify materials, analyze chemical compositions, and assess crop health with unparalleled precision.

For even greater reliability, machine vision often works alongside other sensing technologies such as LiDAR, quantum-enhanced inertial measurement units (IMUs), and advanced haptic sensors. By combining multiple data sources, robots gain a more comprehensive understanding of their surroundings, even in challenging conditions where lighting, occlusions, or reflective surfaces could interfere with purely optical systems.

Key Applications of Machine Vision in Robotics

1. Automated Inspection and Quality Control

One of the most established applications of machine vision in robotics is automated inspection and quality control. In high-speed manufacturing, even minor defects can lead to significant production losses, making accurate, real-time inspection critical. Machine vision robotic systems use high-resolution cameras, structured lighting, and deep learning algorithms to detect surface defects, measure dimensions, and verify labeling with far greater precision than human inspectors.

For instance, in semiconductor fabrication, machine vision inspects wafer surfaces for microscopic imperfections, ensuring that faulty chips are identified before assembly. In automotive manufacturing, robots equipped with vision systems scan metal surfaces for scratches, dents, or welding inconsistencies. Unlike traditional inspection methods, which rely on fixed measurements, machine vision systems can adapt to variations in lighting, surface texture, and object positioning, making them highly flexible for different production environments.

Beyond defect detection, machine vision plays a crucial role in geometric verification. Using structured light or laser triangulation, robots measure components to ensure they fall within specified tolerances. When combined with AI-driven pattern recognition, these systems can classify defects, predict failure trends, and optimize manufacturing processes based on historical data. As machine vision continues to evolve, its role in predictive maintenance and real-time process optimization will further increase, reducing waste and improving production efficiency.

2. Object Identification and Classification

Beyond inspection, machine vision enables robots to identify and classify objects with high accuracy. This capability is critical in industries where automated sorting, inventory management, and material handling are essential. Unlike barcode scanning or RFID tracking, which rely on pre-labeled identifiers, vision-based recognition allows robots to differentiate objects based on intrinsic characteristics such as shape, texture, and color.

In logistics and warehousing, machine vision-equipped robots are transforming order fulfillment. Autonomous mobile robots (AMRs) navigate through warehouse aisles, identifying products based on visual cues and retrieving them for shipment. Similarly, in e-commerce distribution centers, conveyor-based vision systems classify packages by size and destination, reducing human intervention and increasing efficiency.

The ability to identify objects in real time is also crucial in robotic bin-picking applications, where parts may be randomly arranged. Using depth cameras and AI-driven vision models, robots can analyze cluttered scenes, recognize target objects, and adjust their grasping approach accordingly. This technology is particularly valuable in automotive and electronics manufacturing, where components vary in shape and orientation.

3. Navigation and Autonomous Movement

While object recognition helps robots interact with individual items, navigation requires a broader understanding of the surrounding environment. Machine vision plays a central role in enabling robots to move autonomously, whether in structured factory settings or unstructured outdoor environments.

For mobile robots, vision-based navigation is often combined with Simultaneous Localization and Mapping (SLAM) techniques. Using stereo cameras or LiDAR sensors, robots construct a real-time map of their environment while determining their position within it. Unlike GPS, which is limited indoors, visual SLAM allows robots to navigate accurately in warehouses, hospitals, and other enclosed spaces.

In dynamic settings such as construction sites or agricultural fields, robots must adjust to unpredictable obstacles. Optical flow analysis, which detects movement patterns from sequential images, helps robots estimate motion direction and speed. Combined with deep learning-based obstacle detection, this allows robots to react in real-time, preventing collisions and planning efficient paths.

Machine vision is also crucial in autonomous vehicles, where real-time scene understanding is required for safe operation. By fusing data from multiple cameras, LiDAR, and radar, self-driving systems can recognize traffic signs, detect pedestrians, and adapt to road conditions. As vision algorithms become more sophisticated, robotic navigation will continue to expand into new domains, from smart factories to last-mile delivery systems.

4. Quality Control and Precision Inspection

Machine vision is widely used in quality control, where its ability to inspect and identify products ensures manufacturing consistency and reliability. By integrating high-resolution imaging and AI-driven defect detection, robots can analyze surface finish, dimensions, and labeling accuracy with greater precision than human inspectors. These systems are applied across industries to assess products as varied as turbine blades, semiconductor wafers, auto-body panels, and food items like pizza and fresh produce.

Unlike traditional inspection methods, which rely on manual checks, vision-based systems operate continuously at high speeds, minimizing defects and improving production efficiency. Additionally, machine vision contributes to predictive maintenance by detecting subtle defects that could indicate equipment wear, allowing manufacturers to address potential failures before they disrupt production.

5. Position Detection and Robotic Assembly

Machine vision has also been found to enhance robotic assembly by ensuring precise component placement. In high-precision manufacturing environments, robots rely on vision-based position detection to align parts accurately during assembly.

Pick-and-place machines, for instance, use real-time imaging to identify electronic components and position them on printed circuit boards with sub-millimeter accuracy. By continuously analyzing position and orientation, these systems can make micro-adjustments, ensuring alignment even when components shift slightly.

This adaptability is especially beneficial in industries where high precision is required, such as automotive assembly and medical device manufacturing. In cases where parts vary in size or shape, 3D vision techniques enable robots to recognize and adjust to these differences, further expanding the range of tasks they can perform.

6. Part Recognition and Automated Sorting

Beyond assembly, machine vision also enhances automation in sorting and material handling. Robots equipped with vision systems can differentiate objects based on features such as color, texture, and shape, making them valuable for sorting components on production lines. This capability is especially useful in industries that require rapid classification of materials, such as electronics, logistics, and pharmaceuticals.

In warehouse environments, machine vision-guided robots can efficiently locate, pick, and organize inventory without human oversight. These systems continuously refine their accuracy by leveraging machine learning algorithms, which improve object recognition over time, reducing sorting errors and optimizing workflow.

7. Transportation of Parts

Machine vision is also making robots smarter when it comes to moving things around. Instead of following fixed paths like traditional automated systems, modern robots use vision-based navigation to understand their surroundings in real time. Advanced data processing frameworks help them interpret floor patterns, detect obstacles, and adjust their routes dynamically. By continuously analyzing visual data, these robots can figure out the best way to transport materials efficiently—whether in a factory, warehouse, or another fast-changing environment.

As machine vision keeps improving, robots are getting even better at combining visual input with other sensors like LiDAR and depth cameras, making their navigation smoother and more adaptable to unpredictable spaces.

ABB's YuMi Cobot: A Machine Vision Case Study

Back in 2015, ABB introduced YuMi®, the world’s first truly collaborative robot, designed to work safely alongside human operators without the need for physical barriers. This marked a significant shift in industrial automation, enabling seamless human-robot collaboration in environments where flexibility and precision are key.

Designed to work seamlessly alongside human operators, YuMi® is a unique 3D robot vision solution that can pick and place a wide range of products for various service applications. YuMi® excels in tasks such as bin picking, order picking, kitting, and assembly, demonstrating exceptional versatility in handling products from a range of different environments, including bins, buffer tables, trays, conveyor belts, pallets, and shelves.

One of its standout features is its integration with ABB’s 3D quality inspection (3DQI) technology. This system is so precise it can spot defects that are smaller than half the width of a human hair—flaws that would be invisible to the naked eye. With this level of accuracy, YuMi® doesn’t just speed up production; it ensures that every component meets exacting quality standards, making metrology faster and more efficient.

By automating inspections with 3DQI, YuMi® also removes the need for manual quality checks, significantly cutting down on human error and the risk of faulty products slipping through. All in all, this results in increased productivity, lower costs, and a reduced likelihood of product recalls. With a precision level below 100 micrometers and the flexibility to adapt to specific needs, YuMi® gives businesses a powerful, customizable solution that improves both efficiency and reliability.

ABB’s YuMi® cobot is an excellent demonstration of how machine vision is transforming industrial automation. Combining collaborative robotics with cutting-edge 3D vision technology has not only improved precision and quality control but has also driven cost savings and operational efficiency. As industries continue to adopt smart manufacturing solutions, YuMi® serves as a prime example of how vision-enabled robotics can bridge the gap between automation and human expertise.

Want to Learn More About Machine Vision?

If you have found this article helpful, check out some of the below for further information on what machine vision is and how it is being used beyond the industrial sector:

References and Further Reading

- Machine Vision [Online] Available at https://cse.usf.edu/~r1k/MachineVisionBook/MachineVision.files/MachineVision_Chapter1.pdf (Accessed on 28 January 2025)

- Steger, C., Ulrich, M., Wiedemann, C. (2018). Machine vision algorithms and applications. https://books.google.co.in/books/about/Machine_Vision_Algorithms_and_Applicatio.html?id=tppFDwAAQBAJ&redir_esc=y

- Mavridou, E., Vrochidou, E., Papakostas, G. A., Pachidis, T., Kaburlasos, V. G. (2019). Machine Vision Systems in Precision Agriculture for Crop Farming. Journal of Imaging, 5(12), 89. DOI: 10.3390/jimaging5120089, https://www.mdpi.com/2313-433X/5/12/89

- Pérez, L., Rodríguez, Í., Rodríguez, N., Usamentiaga, R., & García, D. F. (2016). Robot Guidance Using Machine Vision Techniques in Industrial Environments: A Comparative Review. Sensors, 16(3), 335. DOI: 10.3390/s16030335, https://www.mdpi.com/1424-8220/16/3/33

Disclaimer: The views expressed here are those of the author expressed in their private capacity and do not necessarily represent the views of AZoM.com Limited T/A AZoNetwork the owner and operator of this website. This disclaimer forms part of the Terms and conditions of use of this website.