Nov 26 2015

Research into pub communication with robot James continues at Bielefeld University

A robotic bartender has to do something unusual for a machine: It has to learn to ignore some data and focus on social signals. Researchers at the Cluster of Excellence Cognitive Interaction Technology (CITEC) of Bielefeld University investigated how a robotic bartender can understand human communication and serve drinks socially appropriately. For their new study, they invited participants in the lab and asked them to jump into the shoes of their robotic bartender. The participants looked through the robot’s eyes and ears and selected actions from its repertoire. The results have now been published in the open-access research journal “Frontiers in Psychology”.

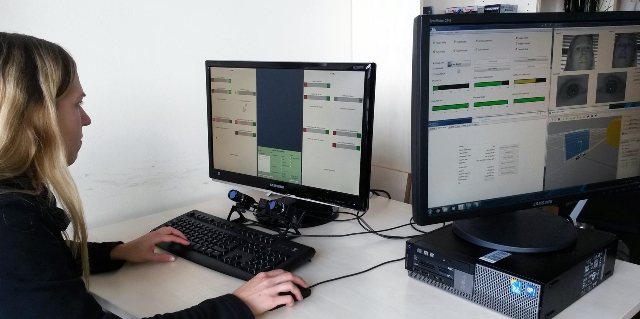

Test persons put themselves in the role of bartender robot James at the computer. Photo: CITEC/Bielefeld University

Test persons put themselves in the role of bartender robot James at the computer. Photo: CITEC/Bielefeld University

In a cooperative project funded by the EU, researchers from Bielefeld, Edinburgh (UK), Crete (Greece) and Munich are developing a robotic bartender called James. Bielefeld University is involved through Jan de Ruiter’s Psycholinguistics Research Group. ”We teach James how to recognise if a customer wishes to place an order”, says Jan de Ruiter. A robot does not automatically recognise which behaviour indicates that a customer near the bar wishes to be served. Is it the proximity to the bar? Or the angle the customer turns to the bar? Or perhaps is it more important whether the customer speaks? The robot perceives a list of details (e.g. “near to bar: no”, “speaks: no”) that is updated as soon as something changes (“near to bar: yes”, “speaks: yes”). Every piece of information is processed independently and as equally important. In order to understand the customers, the robot has to make sense of this piecemeal.

”We asked ourselves how a human bartender solves the problem and whether a robotic bartender can use similar strategies”, says Jan de Ruiter. The participants of the experiment were asked to put themselves into the mind of a robotic bartender. They sat in front of a computer screen and had an overview of the the robot data: Visibility of customer, position at bar, position of face, angle of body and angle of face to the robot. This data was recorded during a trial session with the bartending robot James at its own mock bar in Munich. For the trial, customers were asked to order a drink with James and to rate their experience afterwards. In the Bielefeld lab, the participants observed on the screen what the robot had recognised at the time. For example, they were shown if customers said something (‘I would like a glass of water, please’) and how confident the robotic speech recognition had been. The participants observed two customers at a time. "We designed the study as a role-playing game such that it was approachable. The participants really got into it despite having to rely only on the robotic data without a video. Thus, the design was very important because we wanted the participants to act intuitively without pondering about their actions.” says Jan de Ruiter. The customers’ behaviour was presented in turns that means step by step. The participants had to decide in each step what they would do as a (robotic) bartender. They selected an action from the robot’s repertoire. De Ruiter explains “This is similar to selecting an action from a character’s special abilities in a computer game. For example, they could ask which drink the customer would like (“What would you like to drink?”), turn the robot’s head towards the customer, serve a drink – or just do nothing.” In the next turn, the participants observed the reaction of the customer and selected actions again and so on. This interaction continued, until either a drink was served or the interaction was terminated. The participants of the study didn’t interact with real customers, but with recordings of customer behaviour. “The participants were immersed into the situation and really tried to interpret the sensor data. Ultimately, they tried to be friendly and behave socially appropriately with their customers.”

"We validated the new data with earlier findings: Customers wish to place an order if they stand near the bar and look at the bartender. It is irrelevant if they speak,’ says Sebastian Loth, co-author of the study. ‘Thus, we are confident that our new results are reliable. Our new study focussed on the bartenders’ actions. For example, the participants did not speak to their customers immediately but they turned the robot towards the customers and looked at them. This eye contact is a visual handshake. It opens a channel such that both parties can speak. You could think of this as briefly checking whether both parties are on the phone listening before they start chatting,’ says Loth.

Once it is established that the customer wishes to place an order, the body language becomes less important. ‘At this point, the participants focussed on what the customer said,’ says Loth. For example, if the camera lost the customer and the robot believed the customer was “not visible”, the participants ignored this visual information. They continued speaking, served the drink or asked for a repetition of the order. ‘That means that a robotic bartender should sometimes ignore data.’ However, the real robot did the opposite during the trials. ‘If a customer was not visible, the robot assumed that it could not serve a drink or speak into thin air. Thus, it waited for the cameras and restarted the entire process as if the customer had just arrived at the bar,’ says Loth. ‘Our participants were quicker and more efficient than the robot because they were not confused by some technical glitches but they intuitively focussed on the important information.’ This revealed which information is important. The researchers used these insights and designed a strategy for their robotic bartender James. Loth says ‘This is applicable to all service robots and many other applications.’