Dec 14 2020

A new study demonstrates that robots can motivate humans to take bigger risks in a simulated gambling scenario than they would if there was nothing to impact their actions.

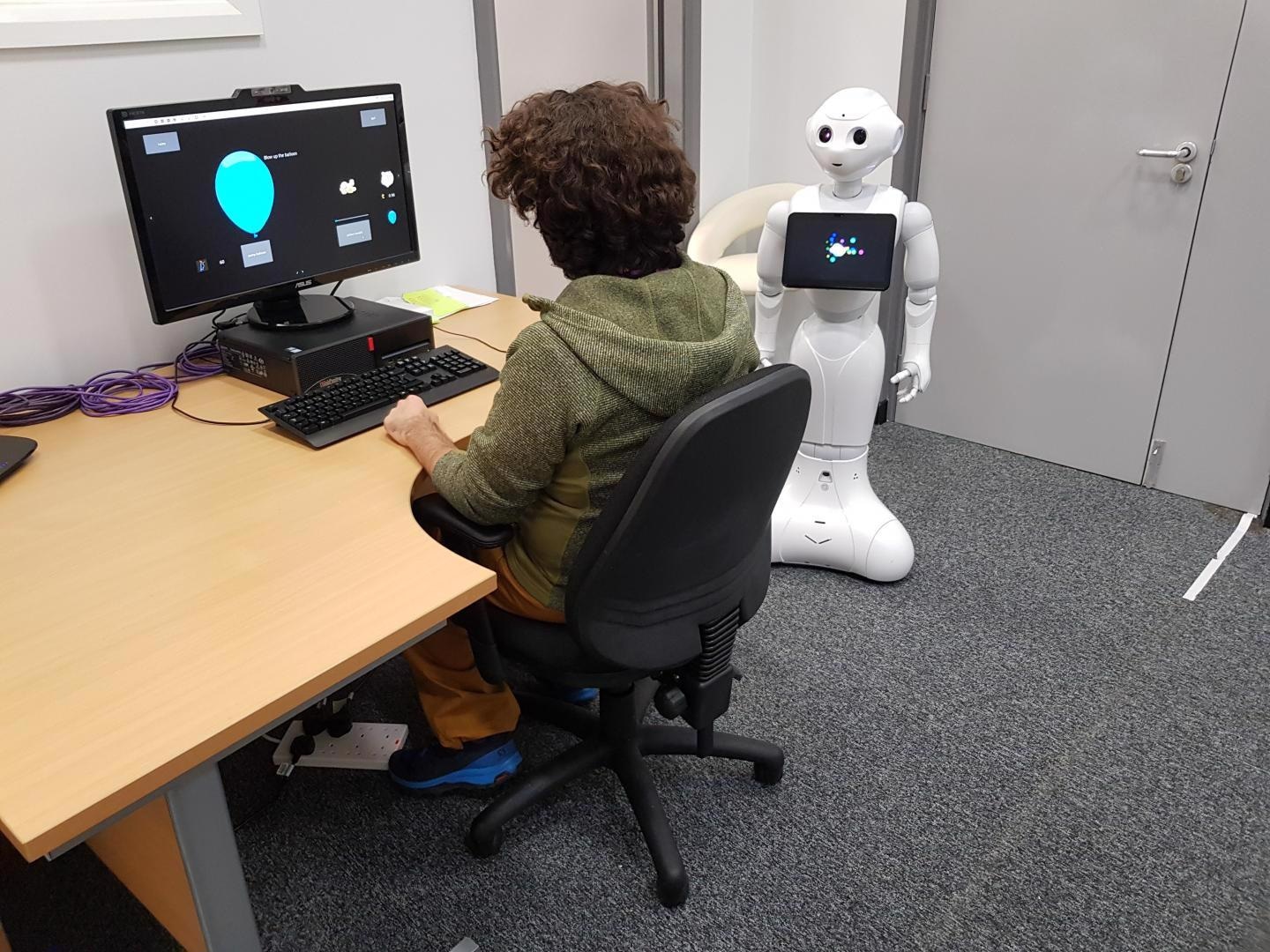

A SoftBank Robotics Pepper robot was used in the two robot conditions. Image Credit: University of Southampton.

A SoftBank Robotics Pepper robot was used in the two robot conditions. Image Credit: University of Southampton.

Improving the understanding of whether robots can influence risk-taking could have distinct practical, ethical and policy implications, which this study aimed to explore.

We know that peer pressure can lead to higher risk-taking behaviour. With the ever-increasing scale of interaction between humans and technology, both online and physically, it is crucial that we understand more about whether machines can have a similar impact.

Dr Yaniv Hanoch, Study Lead and Associate Professor in Risk Management, University of Southampton

Published in the Cyberpsychology, Behavior, and Social Networking journal, the new study included 180 undergraduate students taking the Balloon Analog Risk Task (BART), a computer evaluation that asks participants to click the spacebar on a keyboard to inflate a balloon shown on the screen. When the spacebar is pressed each time, the balloon inflates to some extent and 1 penny is put into the player’s “temporary money bank.”

The balloons can burst randomly, which would result in the player losing the money they had won for that balloon. They have the choice to “cash-in” before this transpires and carry on to the following balloon.

One-third of the participants did the test in a room on their own (the control group), one-third did the test alongside a robot that only gave them the instructions but was quiet the rest of the time and the remaining one-third—the experimental group—did the test with the robot providing instruction and speaking positive statements such as “why did you stop pumping?”

The results revealed that the group stimulated by the robot took more chances, blowing up their balloons considerably more frequently compared to those in the other groups. They also made more money totally. There was no major difference in the behaviors of the students attended by the silent robot and those without any robot.

We saw participants in the control condition scale back their risk-taking behaviour following a balloon explosion, whereas those in the experimental condition continued to take as much risk as before. So, receiving direct encouragement from a risk-promoting robot seemed to override participants’ direct experiences and instincts.

Dr Yaniv Hanoch, Study Lead and Associate Professor in Risk Management, University of Southampton

At present, the team believes that additional studies are necessary to verify whether similar outcomes would occur from human interaction with other artificial intelligence (AI) systems, such as on-screen avatars or digital assistants.

According to Dr. Hanoch, “With the wide spread of AI technology and its interactions with humans, this is an area that needs urgent attention from the research community.”

On the one hand, our results might raise alarms about the prospect of robots causing harm by increasing risky behavior. On the other hand, our data points to the possibility of using robots, and AI, in preventive programs such as anti-smoking campaigns in schools, and with hard to reach populations, such as addicts.

Dr Yaniv Hanoch, Study Lead and Associate Professor in Risk Management, University of Southampton

Journal Reference

Hanoch, Y., et al. (2020) The Robot Made Me Do It: Human–Robot Interaction and Risk-Taking Behavior. Cyberpsychology, Behavior, and Social Networking. doi.org/10.1089/cyber.2020.0148.