Sep 24 2019

In a new study, Army researchers are unraveling what it takes for humans to trust robots, which would enable humans and robots to work together.

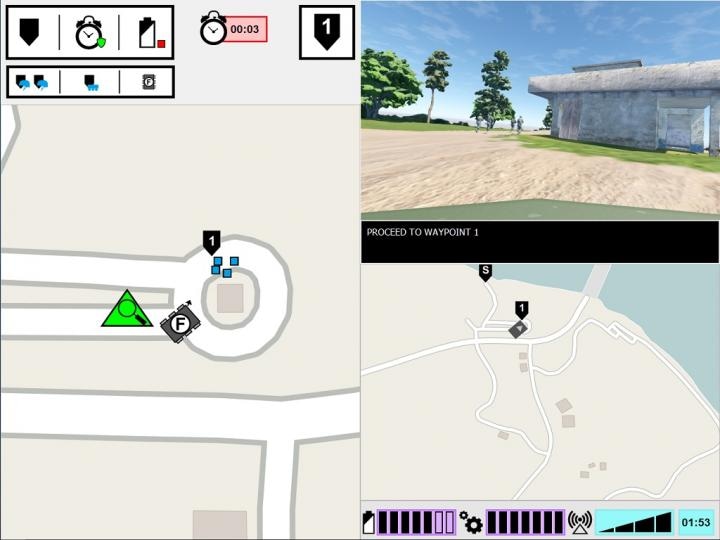

ASM experimental interface. The left-side monitor displays the lead Soldier’s point of view of the task environment. The right-side monitor displays the ASM’s communication interface. (Image credit: U.S. Army)

ASM experimental interface. The left-side monitor displays the lead Soldier’s point of view of the task environment. The right-side monitor displays the ASM’s communication interface. (Image credit: U.S. Army)

Studies into human-agent teaming (HAT) has investigated how the transparency of agents—for example, unmanned vehicles, robots, or software agents—has an impact on task performance, human trust, perceptions, and workload of the agent. Transparency of agents implies their potential to convey to humans their reasoning process, intent, and future plans.

A new study led by the U.S. Army has discovered that there is a dip in human confidence in robots if the robot makes a mistake, although it is transparent with its reasoning process. The paper titled “Agent Transparency and Reliability in Human-Robot Interaction: The Influence on User Confidence and Perceived Reliability” has been published in the August issue of IEEE-Transactions on Human-Machine Systems.

Until now, studies have mainly focused on HAT with absolutely reliable intelligent agents—that is, the agents do not make mistakes. However, this is one of the few research works that has investigated how the transparency of agents interacts with their reliability.

In this new research, humans observed a robot making a mistake. Researchers were keen on whether the humans understood the robot to be less reliable, even when the humans were offered insights into the reasoning process of the robot.

“Understanding how the robot’s behavior influences their human teammates is crucial to the development of effective human-robot teams, as well as the design of interfaces and communication methods between team members,” stated Dr Julia Wright, principal investigator for this project and researcher at U.S. Army Combat Capabilities Development Command’s Army Research Laboratory, also known as ARL.

This research contributes to the Army’s Multi-Domain Operations efforts to ensure overmatch in artificial intelligence-enabled capabilities. But it is also interdisciplinary, as its findings will inform the work of psychologists, roboticists, engineers, and system designers who are working toward facilitating better understanding between humans and autonomous agents in the effort to make autonomous teammates rather than simply tools.

Dr Julia Wright, Study Principal Investigator, Researcher, U.S. Army Combat Capabilities Development Command’s Army Research Laboratory

This study was a collaborative effort between ARL and the University of Central Florida Institute for Simulations and Training. It is the third and final study in the Autonomous Squad Member (ASM) project, financially supported by the Office of Secretary of Defense’s Autonomy Research Pilot Initiative. The ASM is a small ground robot that interacts and communicates with an infantry squad.

Earlier ASM projects analyzed the way a robot would communicate with a human squad member. The researchers used the Situation awareness-based Agent Transparency model as a guide to investigate and test a number of visualization techniques to convey the agent’s intents, goals, constraints, reasoning, and predicted outcomes. Based on the findings of the early studies, an at-a-glance iconographic module was created and was later used in subsequent studies to investigate the efficacy of agent transparency in HAT.

This study was performed in a simulated environment, where participants witnessed a human-agent Soldier team, including the ASM, traversing a training course. The task of the participants was to track the team and assess the robot. Along the course, the Soldier-robot team came across a number of events and responded accordingly.

Although the Soldiers always responded correctly to the event, at times, the robot misinterpreted the situation, resulting in incorrect actions. The amount of data shared by the robot varied from one trial to another.

The robot always gave an explanation for its actions, the reasons for the actions, and the predicted outcome of the actions. However, in certain trials, the robot also gave an account of the reasoning behind its decisions, its fundamental logic. Participants observed several Soldier-robot teams, and their evaluation of the robots was compared.

As part of the study, it was found that irrespective of the transparency of the robot in giving an explanation for its reasoning, the reliability of the robot was the ultimate deciding factor that had an impact on the participants’ predictions of its future reliability, trust in the robot, and perceptions of the robot. In other words, once participants observed an error, they continuously rated its reliability lower, though the robot did not make any further errors.

Although these assessments gradually improved over time as long as the robot did not make any subsequent errors, the confidence of the participants in their own evaluations of the robot’s reliability stayed less throughout the remainder of the trials, than that of the participants who never observed an error. Moreover, participants who observed a robot error reported lower trust in the robot than those who never observed one.

It was found that more and more agent transparency improves the trust of the participants in the robot, but only if the robot was gathering or filtering information. According to Wright, this could imply that sharing of detailed information could alleviate some of the effects of unreliable automation for particular tasks. Moreover, the unreliable robot was rated by the participants as less likable, animate, safe, and intelligent compared to the reliable robot.

Earlier studies suggest that context matters in determining the usefulness of transparency information. We need to better understand which tasks require more in-depth understanding of the agent’s reasoning, and how to discern what that depth would entail. Future research should explore ways to deliver transparency information based on the tasking requirements.

Dr Julia Wright, Study Principal Investigator, Researcher, U.S. Army Combat Capabilities Development Command’s Army Research Laboratory