May 22 2018

Over one million Americans need everyday physical assistance to get dressed due to advanced age, disease, or injury. Although it is possible to use robots for this purpose, both cloth and the human body are complicated.

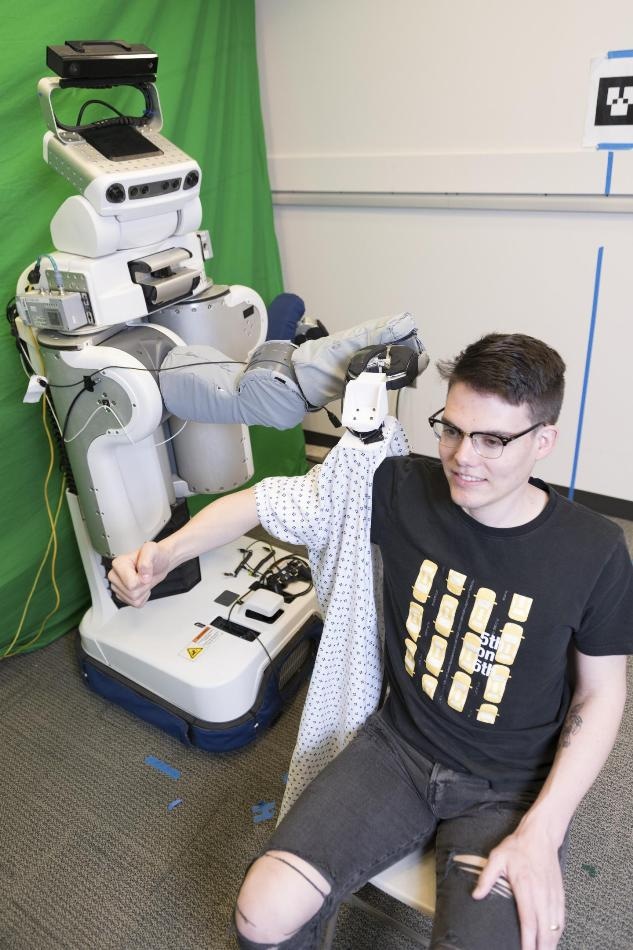

A PR2 robot puts a gown on Henry Clever, a member of the research team. (Image credit: Georgia Tech)

A PR2 robot puts a gown on Henry Clever, a member of the research team. (Image credit: Georgia Tech)

In order to assist in addressing this requirement, a robot developed at the Georgia Institute of Technology has been successfully sliding hospital gowns on the arms of people. Rather than using its eyes while pulling the cloth, the machine relies on the forces it experiences when it guides the garment onto the hand, around the elbow, and onto the shoulder of a person.

The machine, which is a PR2, learned by itself within a time span of one day by studying almost 11,000 simulated examples of a robot sliding a gown onto an arm of a person. Certain attempts of the machine were flawless. Others were astounding failures - dangerous forces were applied by the simulated robot to the arm when the cloth got stuck to the elbow or hand of the person.

Using these examples, the neural network of the PR2 learned to predict the forces applied to the person. Apparently, the simulations enabled the robot to learn the experience of the person receiving the assistance.

People learn new skills using trial and error. We gave the PR2 the same opportunity. Doing thousands of trials on a human would have been dangerous, let alone impossibly tedious. But in just one day, using simulations, the robot learned what a person may physically feel while getting dressed.

Zackory Erickson, Georgia Tech Ph.D. Student

In addition, the robot also learned to estimate the results of moving the gown in distinctive ways. Certain motions rendered the gown stiff, pulling hard against the body of the human. In other movements, the gown was smoothly slid along the arm of the human. These estimations were used by the robot to select motions that dressed the arm in a comfortable way.

Following successful simulation, the PR2 endeavored to dress humans. Participants were seated in front of the robot to watch it hold a gown and slide it onto their arms. Instead of using its vision, the robot used its sense of touch to carry out the task depending on what it learned regarding forces at the time of the simulations.

“The key is that the robot is always thinking ahead,” stated Charlie Kemp, an associate professor in the Wallace H. Coulter Department of Biomedical Engineering at Georgia Tech and Emory University and the lead faculty member. “It asks itself, ‘if I pull the gown this way, will it cause more or less force on the person’s arm? What would happen if I go that way instead?’”

The team altered the timing of the robot and enabled it to think almost one-fifth of a second into the future while contriving its next move. Anything less than this made the robot to fail more often.

The more robots can understand about us, the more they’ll be able to help us. By predicting the physical implications of their actions, robots can provide assistance that is safer, more comfortable and more effective.

Charlie Kemp, Associate Professor

At present, the robot puts the gown only on one arm. It takes 10 seconds to carry out the whole process. According to the researchers, there may be many steps from this study before the robot can fully dress a person.

Ph.D. student Henry Clever and Professors Karen Liu and Greg Turk also contributed to the study. Their paper, titled “Deep Haptic Model Predictive Control for Robot-Assisted Dressing,” is to be presented at the International Conference on Robotics and Automation (ICRA) in Australia, from May 21 to 25. The study is part of a larger attempt on robot-assisted dressing funded by the National Science Foundation (NSF) and headed by Liu.

This study was partially supported by NSF award IIS-1514258, AWS Cloud Credits for Research, and the NSF NRT Traineeship DGE-1545287.

Robot Puts Hospital Gown on a Person

A PR2 robot puts a hospital gown on human arms after teaching itself how to do it.