Sep 5 2014

A professional photographer doesn’t just carry a camera. A typical shoot involves carefully arranged lighting, perhaps some assistants to move the light stands around. Complicated enough in the studio and even more challenging on location. And if the subject is a moving target, good luck.

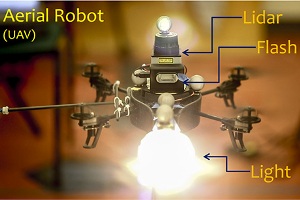

With four horizontal propellers, a quadrotor can hover motionless to light a photographic subject. A floodlight allows the photographer to preview the lighting effect, and a flash fires to take the picture.

With four horizontal propellers, a quadrotor can hover motionless to light a photographic subject. A floodlight allows the photographer to preview the lighting effect, and a flash fires to take the picture.

Now, researchers at Cornell and the Massachusetts Institute of Technology (MIT) are replacing the light stands with lights mounted on intelligent drones that hover around the subject and position themselves automatically for the best effect.

“I think drones are going to play a big role in photography in the future,” said Kavita Bala, associate professor of computer science at Cornell. Her work is part of the growing discipline of “computational photography,” where the techniques of robotics and computer graphics are deployed to assist photographers in picturing the real world.

For their first test with drones, the researchers chose the particularly difficult technique of rim lighting, where a light from one side dramatically outlines a subject.

“Rim lighting is possibly one of the most challenging lighting effects to pick,” Bala said. That’s particularly true when the subject is moving, she noted. “An assistant has to keep moving the light, tracking the subject and trying to stay out of the frame.”

Computational Rim Illumination with Aerial Robots

Bala is working with Frédo Durand, professor of electrical engineering and computer science at MIT – and an accomplished photographer – and Manohar Srikanth, postdoctoral researcher in robotics at MIT. The project grew out of discussions about how to light live sporting events, Bala said. The team described their system at the International Symposium on Computational Aesthetics in Graphics, Visualization and Imaging, Aug. 8-10 in Vancouver, Canada, where they received a Best Paper Award.

Their light-carrying vehicle, which Serikanth has dubbed “litrobot,” is an off-the-shelf quadrotor, the kind proposed to deliver packages for Amazon, carrying a small floodlight for previewing the effect, a flash to be fired when the photo is taken and a laser rangefinder. For rim lighting, the vehicle hovers at one side of the subject. The rangefinder keeps it at a constant distance from the subject for safety and to ensure a constant light level. The width of the rimlighting effect is adjusted by moving the light in an arc around the subject; the farther forward the light is placed, the wider the rim.

With the subject illuminated by the hovering floodlight, the image from the camera is fed to a computer that detects the extra bright pixels outlining the subject, computes the average width of the band of light and moves the vehicle to set the width to what the photographer has chosen. If the subject moves or changes posture, say, turning to face the camera so that more of the chest is exposed to the light, the drone will again automatically move to keep the width of the rim lighting constant.

The system includes an “aggressive control mode” to use when the subject is moving rapidly. Rather than having to wait until the quadrotor settles in its final position, the photographer signals by a half-press of the shutter button, and the camera and flash will fire automatically when the drone passes through the optimum point for the first time.

Taking the shot could also fire other hovering light sources, such as key and fill lights, but “getting multiple drones to cooperate is a non-trivial problem,” Bala said.

Other future possibilities include mounting the camera on a drone, using the system for video and, eventually, getting back to the original goal of providing auxiliary lighting for outdoor events.

“People increasingly accept that drones might be flying around while you’re doing things,” Bala said.