Advances in robots will soon allow it to function without any virtual human interference. A new system has been formulated wherein the robots can map their environment through Microsoft’s Kinect camera.

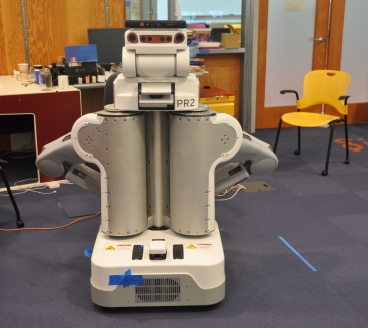

The researchers used a PR2 robot, developed by Willow Garage, with Microsoft's Kinect sensor to test their system.Image: Hordur Johannsson

The researchers used a PR2 robot, developed by Willow Garage, with Microsoft's Kinect sensor to test their system.Image: Hordur Johannsson

MIT’s Computer Science and Artificial Intelligence Laboratory (CSAIL) developed a system that enables blind people to move about in crowded environments without any assistance.

The simultaneous localization and mapping (SLAM) technique forms the basis of a new approach that enables robots to continuously update a map as they gather data over a period of time, says Maurice Fallon, a research scientist at CSAIL. The approach was previously tested with robots equipped with expensive laser-scanners; however the approach will be demonstrated using a low-cost Kinect-like camera at the International Conference on Robotics and Automation in St. Paul, Minn., during May.

The infrared sensor and Kinect sensor’s visible-light video camera will scan the environment when the robot travels in an unexplored area followed by constructing a 3-D model of the building’s structure. The robot will correlate the previous images with the new one, when it happens to travel in the same area once more. The robot’s motion will be evaluated using on-board sensors. The location of the robot can be analyzed using the combined motion data and visual information. The map can therefore be updated.

Using a PR2 robot, the team conducted experiments on the system with a robotic wheelchair. The robots can reach speeds up to 1.5M/S and can position within 3-D map area. The algorithm therefore facilitates the movements of robots within the hospital or office buildings without any input from humans.

According to the head of the Robotics, Seth Teller these robots can function as a wearable visual aid for blind people or can also be used for several military applications.