In a recently published article in the journal Actuators, researchers from the US introduced an advanced multisensory interface designed to enable robots to pour liquids with high accuracy.

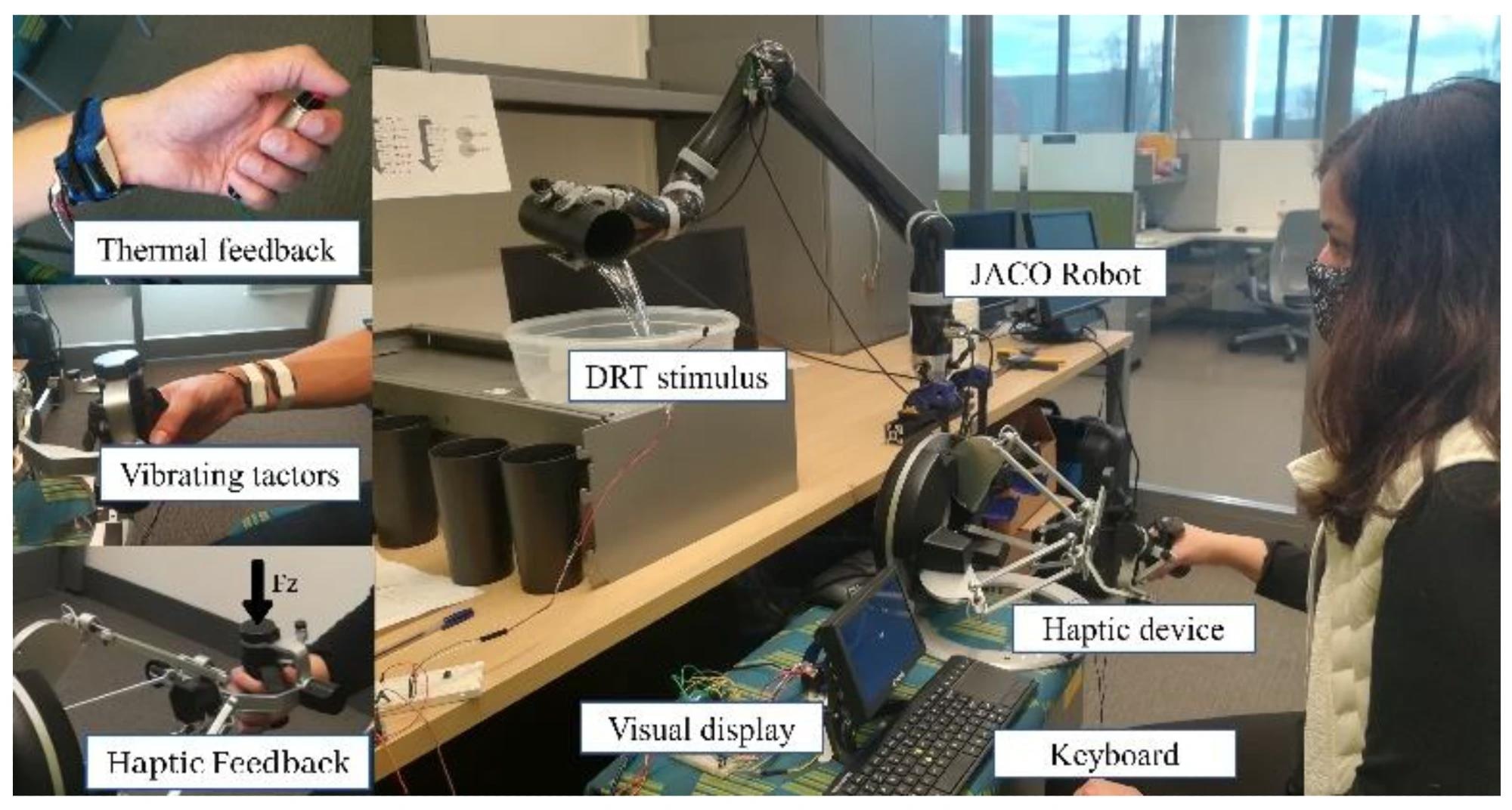

The experimental setup with robot, joystick haptic device, feedback devices, and pouring task apparatus. A tumbler used as a sample vessel is also pictured in the robot grip. Image Credit: https://www.mdpi.com/2076-0825/13/4/152#

The experimental setup with robot, joystick haptic device, feedback devices, and pouring task apparatus. A tumbler used as a sample vessel is also pictured in the robot grip. Image Credit: https://www.mdpi.com/2076-0825/13/4/152#

This system delivers detailed sensory feedback while ensuring the visual precision required for accurate pouring tasks. The research proposes an optimization method to determine the most effective way to match different types of feedback with the senses, aiming to provide the most useful feedback without overwhelming the user's cognitive processing.

Background

Robot-assisted manipulation is employed across a variety of tasks and environments, including surgery, exploration, hazardous situations, and assistive technology. However, users of robotic manipulators often face a lack of direct sensory information, such as touch, temperature, and force feedback, which can significantly impact their interaction experience. The provision of such sensory feedback is essential as it delivers crucial details about the objects being manipulated, thereby enhancing situational awareness and aiding decision-making during tasks.

To address this gap, multimodal feedback interfaces have been developed and tested in various applications. These interfaces aim to provide a more integrated sensory experience by combining different types of feedback.

However, research indicates that certain combinations of modalities might actually be counterproductive, potentially overloading the user. The effectiveness of these combinations can vary depending on the type of task, individual user differences, and the specific context in which they are used. Consequently, studies evaluating multimodal feedback have shown mixed results, reflecting the complexity of optimizing these systems for different applications.

About the Research

In this paper, the researchers have developed a multisensory interface aimed at enhancing robot-assisted pouring tasks. This interface provides nuanced sensory feedback crucial for precise robot operation while managing the high visual demand inherent in such tasks. The study employed an optimization approach to determine the most effective combination of feedback properties and modalities, aiming to maximize feedback perception while minimizing cognitive load.

The system integrates several components, including a haptic device, a visual display, vibrating tactors, and a thermal feedback module, all connected to the robotic arm. These elements work together to provide real-time information on temperature, weight, and liquid level during pouring tasks.

To refine the interface, researchers established a set of metrics to gauge cost-effectiveness, including resolution, perception accuracy, cognitive load, and subjective preference ratings. A linear assignment framework was then used to achieve the optimal configuration of these metrics.

The efficacy of the developed interface was tested through two experiments. The first collected data to establish a minimum-cost solution, while the second compared the effectiveness of this solution in decision-making tasks against a control setup with no feedback and an arbitrary design. This rigorous approach allows for a systematic evaluation of the interface’s performance, shedding light on its impact on both task efficiency and user experience.

Research Findings

The initial experiments generated notable results, demonstrating significant differences across modalities in terms of perception accuracy, detection response task (DRT) hit rate, and subjective preferences for each of the tested properties. However, no significant variations were observed in change response accuracy or DRT reaction time. Notably, subjective preference varied considerably between modalities, highlighting a range of factors that would merit further consideration.

To formalize these results, a cost function was defined, incorporating only the significant metrics identified from the screening experiments. The weights for these metrics were determined subjectively during the screening phase. The optimal solution, derived from this cost function, assigned liquid level feedback to vibration, weight feedback to audio, and temperature feedback to the visual display.

The second set of experiments demonstrated that the optimal solution significantly outperformed the control treatment of no feedback across all three metrics in the first and second use cases. Additionally, the tilt angle use case showed improvements in response time and subjective demand metrics compared to the control.

Furthermore, in comparison to the arbitrary design, the optimal solution achieved better outcomes in success and subjective demand metrics in tests involving temperature adjustments and handling empty vessels, although these differences did not achieve statistical significance. These results underscore the effectiveness of the optimized multisensory feedback system in enhancing the precision and user experience in robot-assisted tasks.

Applications

The proposed interface can be applied to various scenarios where users need to remotely manipulate objects with different properties and make decisions based on sensory feedback. For example, the interface can be used for assistive technology to help individuals with disabilities perform daily living activities, such as making a drink or preparing a meal.

The interface can also be used for exploration or hazardous situations, such as handling hot or cold substances or liquids with different levels. The interface can enhance the user’s situational awareness, task performance, and user satisfaction.

Conclusion

In summary, the paper introduced an optimization approach for designing a multisensory feedback interface tailored for robot-assisted pouring tasks. Leveraging a linear assignment problem framework, the method produced a minimum-cost solution by considering metrics such as feedback perception, cognitive load, and subjective preference. Results indicated that the developed feedback system significantly outperformed both the control case and an arbitrary design.

Moving forward, the researchers acknowledged limitations and challenges and suggested directions to further enhance the effectiveness and versatility of the interface in practical applications. They recommended expanding the scope by incorporating additional properties, modalities, and metrics, as well as conducting trials in more realistic and intricate task environments.

Journal Reference

Marambe, M.S.; Duerstock, B.S.; Wachs, J.P. Optimization Approach for Multisensory Feedback in Robot-Assisted Pouring Task. Actuators 2024, 13, 152. https://doi.org/10.3390/act13040152, https://www.mdpi.com/2076-0825/13/4/152

Disclaimer: The views expressed here are those of the author expressed in their private capacity and do not necessarily represent the views of AZoM.com Limited T/A AZoNetwork the owner and operator of this website. This disclaimer forms part of the Terms and conditions of use of this website.