Aerospace experts from across North America are coming to the University of Cincinnati's new Digital Futures building this week for a conference on artificial intelligence.

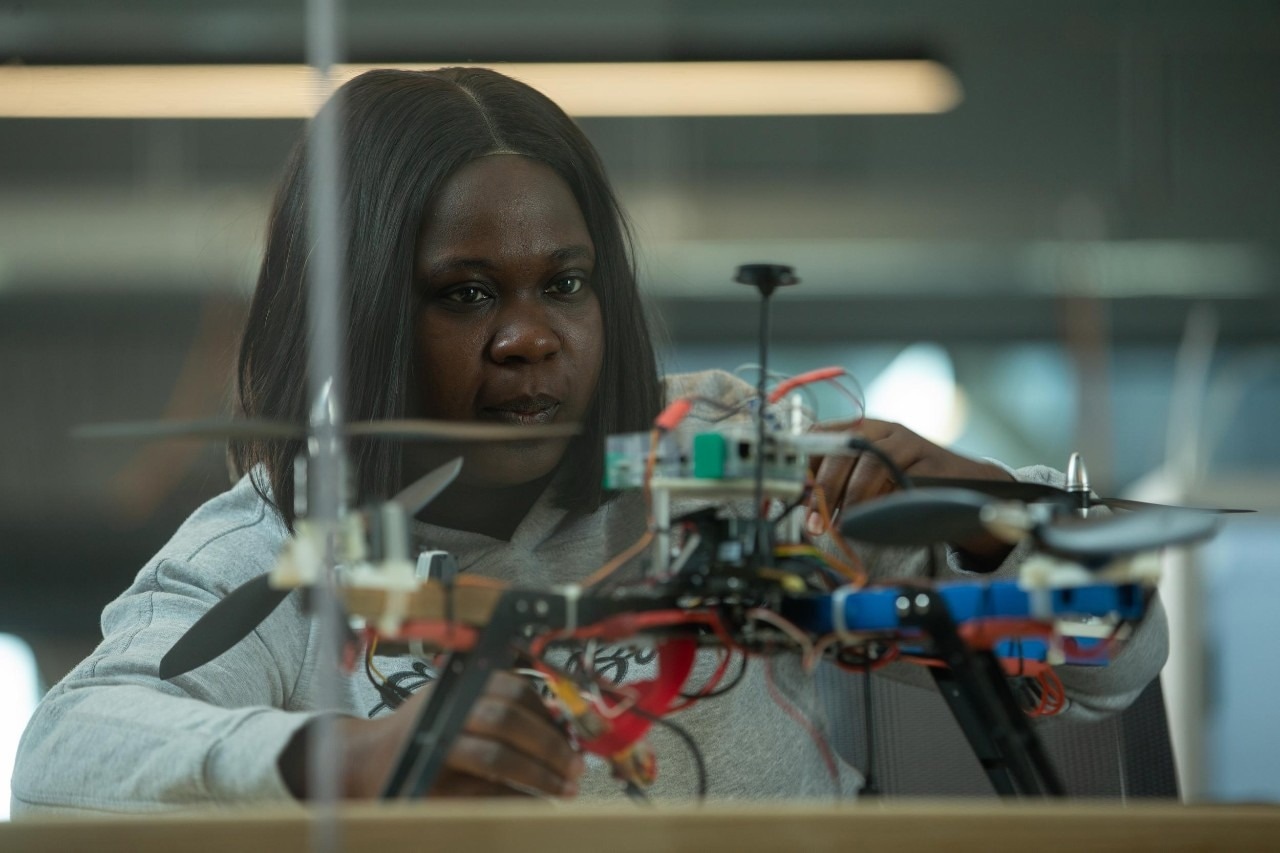

A UC College of Engineering and Applied Science graduate student works on a drone at UC's Digital Futures building, which features a high-bay drone lab. UC is hosting an aerospace conference investigating new applications for artificial intelligence. Image Credit: Andrew Higley/UC Marketing + Brand

A UC College of Engineering and Applied Science graduate student works on a drone at UC's Digital Futures building, which features a high-bay drone lab. UC is hosting an aerospace conference investigating new applications for artificial intelligence. Image Credit: Andrew Higley/UC Marketing + Brand

UC is playing host to the annual North American Fuzzy Information Processing Society's conference. It's an international research conference that will bring aerospace experts to Cincinnati to share the latest on artificial intelligence relating to drones, aviation, space exploration and other applications.

One of the conference sponsors, Thales, a French-based international holding company that engages in the manufacture, marketing and sale of systems for aeronautics, naval and defense sectors, recently struck a five-year research partnership with UC.

The conference demonstrates how Ohio continues to play an influential role in aerospace dating back to the Wright brothers and Ohio-native Neil Armstrong, the first man on the moon who taught aerospace engineering at UC after leaving NASA. Ohio ranks as the fourth-best state in the nation for new aerospace manufacturing.

Topics covered at the conference include using AI to refuel spacecraft, predicting passenger crowding at airports and creating drone collision avoidance systems, among others. These systems rely on a type of artificial intelligence known as fuzzy logic that relies on degrees of truth rather than a binary true-false dichotomy. As a result, this artificial intelligence is both explainable and transparent.

“They’re coming to Cincinnati because we’re the center of the fuzzy engineering application universe,” said Kelly Cohen, a professor of aerospace engineering in UC’s Digital Futures and College of Engineering and Applied Science.

Cohen said technology experts increasingly are warning about the risks of artificial intelligence systems that can’t easily be explained.

“Just last week the White House talked about the vast potential for AI,” Cohen said.

The Biden administration announced a road map to focus federal investments in responsible AI research and development, solicited public comment on mitigating AI risks while protecting individuals’ rights and safety and released a new report on AI in education that identifies the potential benefits and challenges of these emerging technologies.

“Artificial intelligence has to be responsible, as in trustworthy. And trustworthy is defined as, ‘Can you explain your decisions?’ Fuzzy goes a long way toward explaining what is going on in the decision making,” Cohen said.

“Mainstream AI has fallen apart because it’s not trustworthy. It’s a black box. It’s brittle. You can’t use it to make decisions that could put people’s lives in danger,” Cohen said.

Several UC students and graduates will be presenting their AI research at the conference, which wraps up Friday, June 2.