Scientists recently developed a mind-reading system to decode neural signals from the brain during arm movement. An individual can use the method, to control a robotic arm via a brain-machine interface (BMI).

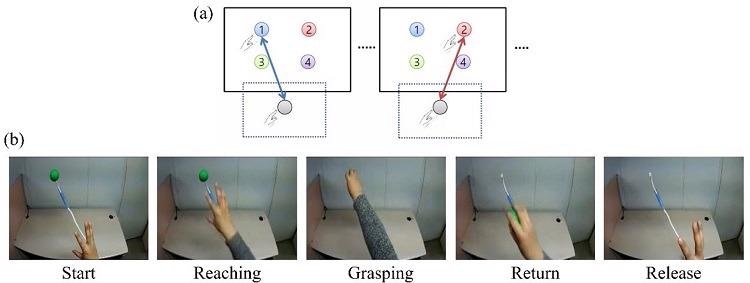

Experimental paradigm. Subjects were instructed to perform reach-and-grasp movements to designate the locations of the target in three-dimensional space. (a) Subjects A and B were provided the visual cue as a real tennis ball at one of four pseudo-randomized locations. (b) Subjects A and B were provided the visual cue as a virtual reality clip showing a sequence of five stages of a reach-and-grasp movement. Image Credit: Korea Advanced Institute of Science and Technology.

Experimental paradigm. Subjects were instructed to perform reach-and-grasp movements to designate the locations of the target in three-dimensional space. (a) Subjects A and B were provided the visual cue as a real tennis ball at one of four pseudo-randomized locations. (b) Subjects A and B were provided the visual cue as a virtual reality clip showing a sequence of five stages of a reach-and-grasp movement. Image Credit: Korea Advanced Institute of Science and Technology.

The study was published in the journal Applied Soft Computing.

A BMI is a device that converts nerve signals into commands for controlling a machine, like a robotic limb or a computer. Electroencephalography (EEG) and electrocorticography (ECoG) are the two primary methods for tracking neural signals in BMIs.

The EEG displays signals from electrodes on the scalp’s surface and is widely used as it is inexpensive, non-invasive, safe and simple to use. However, because the EEG has a low spatial resolution and identifies irrelevant neural signals, it is difficult to deduce people’s intentions from it.

The ECoG, on the other hand, is an invasive method that includes placing electrodes directly on the cerebral cortex’s surface beneath the scalp. Compared to the EEG, the ECoG has a much higher spatial resolution and less background noise for monitoring neural signals. This method, however, has numerous disadvantages.

The ECoG is primarily used to find potential sources of epileptic seizures, meaning the electrodes are placed in different locations for different patients and may not be in the optimal regions of the brain for detecting sensory and movement signals. This inconsistency makes it difficult to decode brain signals to predict movements.

Jaeseung Jeong, Professor and Brain Scientist, Korea Advanced Institute of Science and Technology

Professor Jeong’s team devised a new technique for decoding ECoG neural signals during arm movement to address these issues. The system is designed based on a machine-learning system for analyzing and forecasting neural signals known as an “echo-state network” and Gaussian distribution, a mathematical probability model.

The scientists recorded ECoG signals from four epilepsy patients while they carried out a reach-and-grasp task in the study. Only 22% to 44% of the ECoG electrodes were placed in the regions of the brain that control movement because the electrodes were positioned as per the potential sources of each patient’s epileptic seizures.

The participants received visual cues during the movement task, either by placing a real tennis ball in front of them or by wearing a virtual reality headset that displayed a clip of a human arm reaching forward in first-person view.

While wearing motion sensors on their wrists and fingers, the participants were requested to reach forward, grasp an object, then return their hand and release the object. They were then asked to imagine reaching forward without moving their arms in a second task.

The researchers analyzed the signals from the ECoG electrodes during real and imaginary arm movements to see if the new system could predict the movement’s direction based on the neural signals.

The researchers discovered that in both real and virtual tasks, the novel decoder efficaciously classified arm movements in 24 directions in three-dimensional space, with results that were at least five times more accurate than chance. They also demonstrated that the new ECoG decoder could regulate the movements of a robotic arm using a computer simulation.

The findings suggest that the new machine learning-based BCI system was successful in interpreting the direction of the intended movements using ECoG signals. The researchers intend to enhance the efficiency and accuracy of the decoder. It could be used in a real-time BMI device in the future to assist people with movement or sensory impairments.

The current study was supported by the KAIST Global Singularity Research Program of 2021, Brain Research Program of the National Research Foundation of Korea financially supported by the Ministry of Science, ICT, and Future Planning, and the Basic Science Research Program through the National Research Foundation of Korea supported by the Ministry of Education.

Journal Reference:

Kim, H.-H. & Jeong, J. (2022) An electrocorticographic decoder for arm movement for brain–machine interface using an echo state network and Gaussian readout. Applied Soft Computing. doi.org/10.1016/j.asoc.2021.108393.