Aug 31 2020

Soon after Hurricane Laura ravaged the Gulf Coast on August 20th, 2020, individuals started to fly drones to capture the damage and even posted videos on social media.

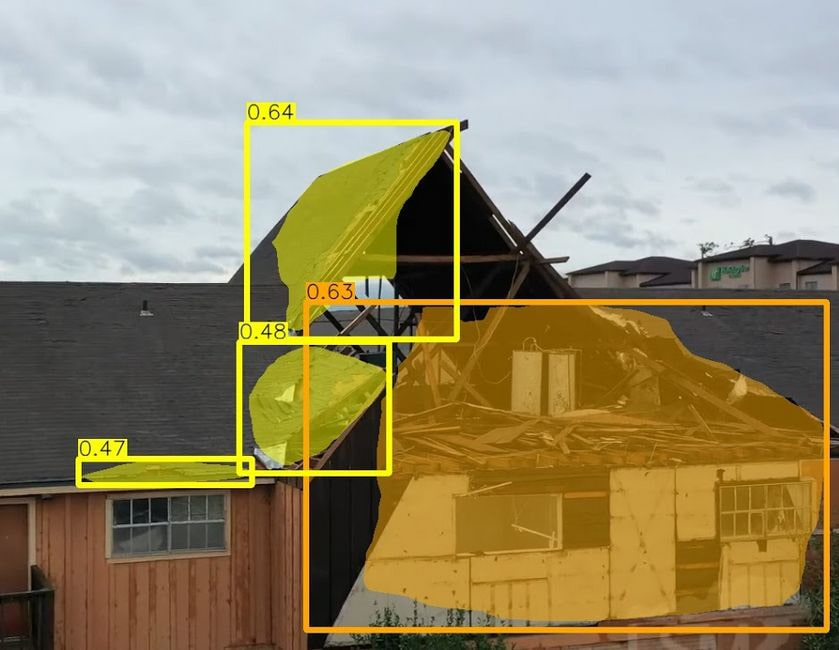

A team of CMU researchers is using AI to develop a system that automatically identifies buildings in drone footage of natural disasters and determines if they are damaged. The system can identify if the damage is slight (yellow) or serious (orange), or if the building has been destroyed. Image Credit: Carnegie Mellon University.

A team of CMU researchers is using AI to develop a system that automatically identifies buildings in drone footage of natural disasters and determines if they are damaged. The system can identify if the damage is slight (yellow) or serious (orange), or if the building has been destroyed. Image Credit: Carnegie Mellon University.

According to scientists from Carnegie Mellon University, such videos can serve as a valuable resource. The team is currently exploring ways to use those videos to rapidly assess the damage.

With the help of artificial intelligence (AI), the team is now designing a system that can automatically detect buildings and make a preliminary determination of whether these buildings are destroyed or the potential level of that destruction.

Current damage assessments are mostly based on individuals detecting and documenting damage to a building. That can be slow, expensive and labor-intensive work.

Junwei Liang, PhD Student, Language Technologies Institute, Carnegie Mellon University

Satellite imagery shows damage from only a single point of view—that is vertical, and thus does not offer sufficient detail. But drones can collect close-up data from several viewpoints and angles. While first responders can evidently fly drones to assess the damage, drones are now extensively available among residents and regularly flown following natural disasters.

The number of drone videos available on social media soon after a disaster means they can be a valuable resource for doing timely damage assessments.

Junwei Liang, PhD Student, Language Technologies Institute, Carnegie Mellon University

Xiaoyu Zhu, who is a master’s student in AI and Innovation in the Language Technologies Institute, stated that the original system can overlay masks on sections of the buildings in the video that seem to be destroyed and establish whether the damage is serious or mild, or whether the building has been damaged.

The researchers will present the study results at the Winter Conference on Applications of Computer Vision (WACV 2021), which will be conducted virtually in 2021.

Headed by Alexander Hauptmann, a research professor from the Language Technologies Institute, the researchers downloaded drone videos of damage caused by tornadoes and hurricanes in Illinois, Missouri, Florida, North Carolina, Alabama, and Texas. The team subsequently annotated the videos to detect the damage caused to buildings and the extent of that damage.

The ensuing dataset—the first that employed drone videos to evaluate the damage caused to buildings by natural disasters—was applied to train the AI system, known as MSNet, to identify the damage caused to buildings. Other research teams have made the dataset available for use through Github.

Liang stated that the videos do not contain GPS coordinates, but despite this fact, the team is still working on a geolocation strategy that would allow users to rapidly detect the location of the damaged buildings.

This means the system needs to be trained using pictures from Google Streetview. Following this, MSNet could match the cues of the location learned from Streetview to features in the video.

The study was sponsored by the National Institute of Standards and Technology.