Jul 27 2020

Artificial intelligence, or AI, plays a hugely positive role in the world. It has immense untapped potential, right from making roads safer with autonomous cars to decreasing pollution and allowing improved healthcare via medical big-data analysis.

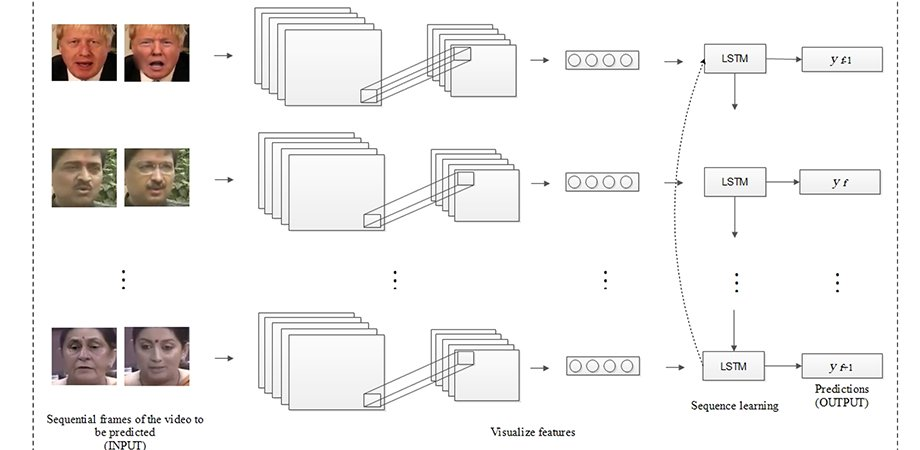

AI-based technology proposed by Kaur et al. can detect deepfake videos within seconds. Image Credit: SPIE.

AI-based technology proposed by Kaur et al. can detect deepfake videos within seconds. Image Credit: SPIE.

But regrettably, similar to all technologies available in the world, AI can be employed by individuals with ulterior motives.

Deepfake Face Swapping

An AI-based method known as “deepfake” (a mixture of “fake” and “deep learning”) is one such technology that employs deep neural networks to effortlessly produce fake videos. In these videos, the face of one individual is superimposed on that of another person.

Such tools can be used easily, even by people who have no background in video editing or programming. A method like this can be used for producing compromising videos of almost anyone, such as corporate public figures, politicians, and celebrities.

In the present period of instant communication and unparalleled connectedness—when news can become viral in just a few hours—such videos not only damage the reputation of those in the videos but also cause considerable harm to the cultural and social psyche of the related communities.

AI to the Rescue

While “deepfakes” definitely exist, the damage can be salvaged if such videos are automatically identified. And the most optimal way to do this is to use AI itself.

Although AI-based deepfake video detection techniques certainly exist, scientists from Thapar Institute of Engineering and Technology and also from Indraprastha Institute of Information Technology in India have designed a novel algorithm that has better precision and accuracy. The researchers’ study could set a new benchmark in the search for countering one of the many types of infodemics being faced at present.

At the wider level, the researchers’ method involves a classifier that establishes whether an input video is (deep) fake or real. To reach precise decisions, the algorithm developed by the team had to be trained first, similar to all AI-based applications.

In this context, the scientists started to produce a dataset of 200 videos of numerous, analogous-looking pairs of politicians; of these, 100 were real and the remaining 100 were produced using deepfake.

While a part of these video frames was marked and fed to the algorithm in the form of training data, the remaining ones were employed as a validation dataset to check if the program could accurately detect the face-swapped videos.

Deepfake Detection Method

Individuals can split the algorithm itself into two levels. At the first level, the frames of the video go through certain light image processing, like horizontal flipping, zooming, and rescaling, as preparation for the next stages. The second level includes a pair of key components—a long short-term memory (LSTM) stage and a convolutional neural network (CNN).

The CNN—a unique kind of neural network—can automatically acquire features from the sequential frames of videos. Only the CNN knows the type of features that are obtained and the way they are defined.

The LSTM can be described as a kind of recurrent neural network that is particularly handy for processing time-series data (sequential video frames, in this case). When the original videos and deepfake videos are compared, the LSTM network effortlessly identifies the inconsistencies present in the frames of deepfake videos. The new algorithm can detect such inconsistencies using just about 2 seconds of video material.

The researchers tested and compared the performance of this novel technique with that of other prevailing advanced AI-based methods to identify deepfake videos.

On the whole, for a total of 181,608 deepfake and real frames recovered from the video dataset collated in this work, the proposed technique acquired higher precision of 99.62% and an accuracy of 98.21%, with a relatively lower total training time.

The research emphasizes the necessary evil of AI. Although AI can be used for spreading misinformation and damaging reputations, it can also be used for preventing infodemics, the negative implications of which are intensely felt amid disasters like the current COVID-19 pandemic.

The study titled “Deepfakes: temporal sequential analysis to detect face-swapped video clips using convolutional long short-term memory” was penned by Kaur, Kumar, and Kumaraguru. It was published in the Journal of Electronic Imaging.

Journal Reference:

Kaur, S., et al. (2020) Deepfakes: temporal sequential analysis to detect face-swapped video clips using convolutional long short-term memory. Journal of Electronic Imaging. doi.org/10.1117/1.JEI.29.3.033013.