Jul 15 2020

Kitchen robots are famous foresight for the future. However, if an existing robot attempts to pick up a kitchen staple like a shiny knife or a clear measuring cup, it may not be successful. Reflective and transparent objects are the nightmares for the robots.

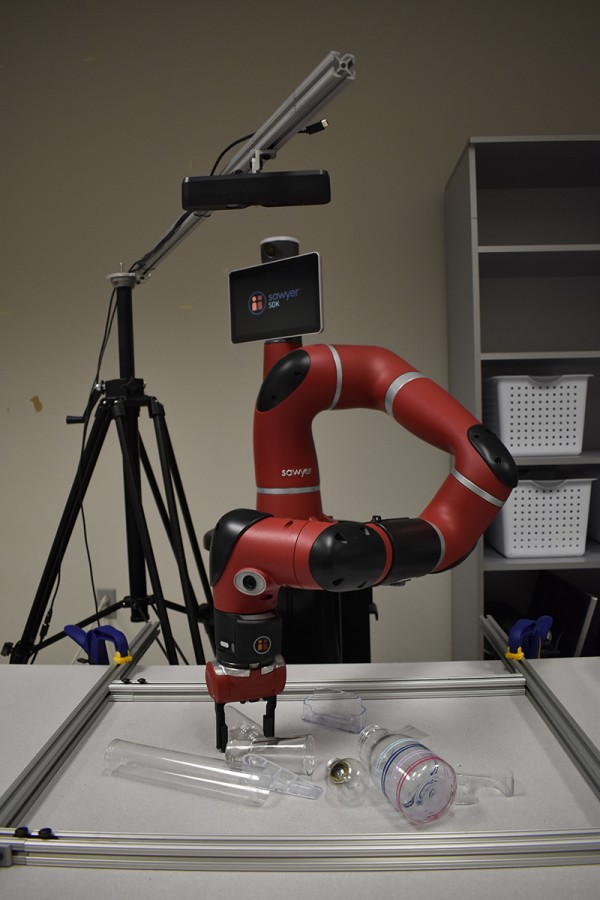

CMU roboticists have developed a new technique for teaching robots to pick up transparent objects. Image Credit: Carnegie Mellon University.

CMU roboticists have developed a new technique for teaching robots to pick up transparent objects. Image Credit: Carnegie Mellon University.

However, at Carnegie Mellon University, roboticists have developed a new technique to teach robots to successfully grasp these troublesome objects.

The technique avoids the need for fancy sensors, human guidance, or exhaustive training but purely relies on a color camera. The new system will be presented by the researchers during this summer’s International Conference on Robotics and Automation virtual conference.

According to David Held, an assistant professor in CMU’s Robotics Institute, depth cameras that determine the shape of an object by shining infrared light on it work well for identifying opaque objects as well.

However, infrared light passes directly through clear objects and gets scattered off reflective surfaces. Therefore, depth cameras will not be able to determine an accurate shape, leading to hole-riddled or largely flat shapes for reflective and transparent objects.

By contrast, a color camera can observe not just reflective and transparent objects but also opaque ones. Thus, the CMU researchers created a color camera system to identify shapes based on color.

A standard camera does not have the ability to quantify shapes like a depth camera. However, the team could train the new system to mimic the depth system and tacitly identify the shape to clutch objects. They achieved this by using the depth camera images of opaque objects coupled with color images of the same objects.

Upon being trained, the color camera system was used to quantify shiny and transparent objects. The system used those images and whatever minimal information could be offered by a depth camera to grasp these challenging objects with a higher level of success.

We do sometimes miss, but for the most part it did a pretty good job, much better than any previous system for grasping transparent or reflective objects.

David Held, Assistant Professor, The Robotics Institute, Carnegie Mellon University

According to Thomas Weng, a PhD student in robotics, the system cannot grasp reflective or transparent objects as efficiently as opaque objects. However, it is far more efficient than just depth camera systems. Moreover, the multimodal transfer learning employed to train the system was so efficient that the color system was found to be as good as the depth camera system at grasping opaque objects.

Our system not only can pick up individual transparent and reflective objects, but it can also grasp such objects in cluttered piles.

Thomas Weng, PhD Student in Robotics, Carnegie Mellon University

Other efforts at robotic picking up of transparent objects have depended on training systems developed based on exhaustively repeated attempts—of the order of 800,000 attempts—to grasp or on high-cost human labeling of objects.

A commercial RGB-D camera that is capable of both depth images (D) and color images (RGB) is employed by the CMU system. This single sensor can be used by the system to sort through recyclables or other groups of objects—transparent, opaque, and reflective.

Apart from Held and Weng, the research group included Oliver Kroemer, assistant professor of robotics at CMU; Amith Pallankize, a senior at BITS Pilani in India; and Yimin Tang, a senior at ShanghaiTech. This study was supported by the National Science Foundation, Sony Corporation, the Office of Naval Research, Efort Intelligent Equipment Co., and ShanghaiTech.

Gripping robots typically have troubles grabbing transparent or shiny objects. A new technique by Carnegie Mellon University relies on color camera system and machine learning to recognize shapes based on color. Video Credit: Carnegie Mellon University.