Nov 22 2019

Researchers at Massachusetts Institute of Technology (MIT) have developed a new method to efficiently improve the design and control of soft robots for specific tasks. This feat has customarily been a major undertaking in computation.

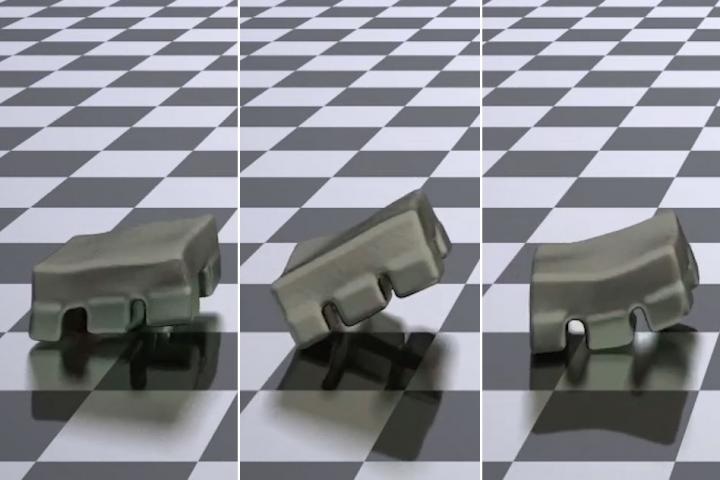

An MIT-invented model efficiently and simultaneously optimizes the control and design of soft robots for target tasks, which has traditionally been a monumental undertaking in computation. The model, for instance, was significantly faster and more accurate than state-of-the-art methods at simulating how quadrupedal robots (pictured) should move to reach target destinations. Image Credit: Andrew Spielberg, Daniela Rus, Wojciech Matusik, Allan Zhao, Tao Du, and Yuanming Hu.

An MIT-invented model efficiently and simultaneously optimizes the control and design of soft robots for target tasks, which has traditionally been a monumental undertaking in computation. The model, for instance, was significantly faster and more accurate than state-of-the-art methods at simulating how quadrupedal robots (pictured) should move to reach target destinations. Image Credit: Andrew Spielberg, Daniela Rus, Wojciech Matusik, Allan Zhao, Tao Du, and Yuanming Hu.

Soft robots have bodies that are stretchy, flexible, and springy, allowing them to actually move in unlimited ways at any specified time. From a computational standpoint, this represents an extremely complicated “state representation,” which illustrates the way each component of the robot moves.

For soft robots, state representations can possibly have an unlimited number of dimensions, making it challenging to compute the best way to make a robot to finish complicated tasks.

The MIT team will present a model at the Conference on Neural Information Processing Systems, which will be held next month. This model learns a compact, or “low-dimensional,” but comprehensive state representation based on the fundamental physics of the robot and its surroundings, among various factors. This assists the model to iteratively co-improve the parameters of material design and movement control meant for certain tasks.

Soft robots are infinite-dimensional creatures that bend in a billion different ways at any given moment. But, in truth, there are natural ways soft objects are likely to bend. We find the natural states of soft robots can be described very compactly in a low-dimensional description. We optimize control and design of soft robots by learning a good description of the likely states.

Andrew Spielberg, Study First Author and Graduate Student, Computer Science and Artificial Intelligence Laboratory, MIT

In simulation experiments, the MIT’s model allowed both 3D and 2D soft robots to finish tasks, like reaching a target spot or moving specific distances, more precisely and rapidly when compared to existing advanced approaches. The scientists have planned to apply their model in genuine soft robots.

Apart from Spielberg, the team included Allan Zhao, Tao Du, and Yuanming Hu, all CSAIL graduate students; Daniela Rus, CSAIL director and the Andrew and Erna Viterbi Professor of Electrical Engineering and Computer Science; and Wojciech Matusik, an associate professor in MIT’s electrical engineering and computer science and head of the Computational Fabrication Group.

“Learning-in-the-Loop”

Although soft robotics is considered a comparatively new field of research, it holds the potential for sophisticated robotics. For example, flexible bodies can provide various benefits like better manipulation of objects, safer interaction with humans, and more maneuverability, to name a few.

In simulations, robot control depends on an “observer,” a kind of program that calculates variables that perceive how the soft robot is moving to finish a task. In earlier studies, the soft robot was decomposed into hand-designed clusters of replicated particles. Particles contain crucial data that help in narrowing down the potential movements of the robot.

If a robot tries to bend in a specific manner—for example, actuators may sufficiently resist that movement such that it can be overlooked. However, for such complicated robots, it can be tricky to manually select the type of clusters to be tracked at the time of simulations.

Based on that study, the scientists developed a technique called “learning-in-the-loop optimization.” In this method, all the improved parameters are learned during one feedback loop over several simulations. And, with learning optimization—or “in the loop”—the technique concurrently learns the state representation.

The new model uses a method known as the material point method (MPM). This method replicates the behavior of particles of continuum materials—like liquids and foams—that are enclosed by a background grid. While doing so, the MPM technique captures the robot’s particles as well as its observable surroundings into 2D pixels or 3D pixels, called voxels, but without using any extra computation.

During a learning phase, this raw particle grid data is entered into a machine-learning component that first learns to input an image, then compresses it to a low-dimensional representation, and finally decompresses the representation back into the input image. If this “autoencoder” is able to retain a sufficient amount of detail while compressing the input image, it can precisely reproduce the input image from the compression.

In the latest study, the learned compressed representations of the autoencoder act as the low-dimensional state representation of the robot. That compressed representation—in an optimization phase—loops back into the controller, which, in turn, outputs a computed actuation for the way each robot’s particle should move in the subsequent MPM-simulated step.

The controller concurrently utilizes that data to tune the optimal stiffness for every particle in order to realize its required movement. In the days to come, that material data can prove handy for 3D-printing soft robots, in which every particle spot could be printed with a somewhat different stiffness.

This allows for creating robot designs catered to the robot motions that will be relevant to specific tasks. By learning these parameters together, you keep everything as synchronized as much as possible to make that design process easier.

Andrew Spielberg, Study First Author and Graduate Student, Computer Science and Artificial Intelligence Laboratory, MIT

Faster Optimization

To train the autoencoder, the entire optimization data is consequently fed back into the start of the loop. Over several simulations, while the controller eventually learns the optimal movement as well as material design, the autoencoder learns the growingly more comprehensive state representation.

The key is we want that low-dimensional state to be very descriptive.

Andrew Spielberg, Study First Author and Graduate Student, Computer Science and Artificial Intelligence Laboratory, MIT

The robot updates a “loss function” as soon as it reaches its replicated final state over a set duration of time, for example, as near as possible to the specified destination. That is an important part of machine learning, which attempts to reduce some errors.

In this example, it reduces, for example, the distance the robot halted from the target destination. It is that loss function that flows back to the controller. This controller subsequently utilizes the error signal to adjust all the improved parameters to optimally finish the task.

In case the investigators attempted to directly input all the raw simulation particles into the controller, but without the compression step, then, “running and optimization time would explode,” added Spielberg. With the help of the compressed representation, the scientists successfully decreased the running time for each optimization iteration, that is, from several minutes down to approximately 10 seconds.

The scientists have verified their new model on simulations of numerous 3D and 2D biped and quadruped robots. They also discovered that while robots utilizing conventional techniques are capable of taking up to 30,000 simulations to improve these parameters, robots that are trained on the new model took just around 400 simulations.

Implementing the new model into genuine soft robots would help address problems with real-world noise and also uncertainty that may reduce the accuracy and efficiency of the model. However, in the days to come, the team is hoping to develop a complete pipeline, right from simulation to fabrication, specifically for soft robots.