Apr 24 2019

Artificial intelligence (AI) software will be soon run by speakers, security cameras, smartphones, and many other devices to accelerate speech- and image-processing tasks.

(Image credit: Image: Ji Lin)

(Image credit: Image: Ji Lin)

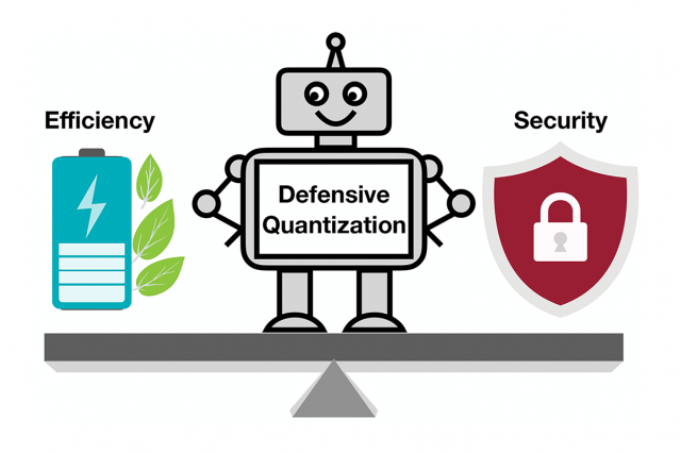

Now, a compression method called quantization is simplifying the way by miniaturizing deep learning models to reduce both energy and computation costs. However, it turns out, that smaller learning models will allow malicious attackers to easily trick an AI system into misbehaving. This represents a major concern because more intricate decision-making is often delegated to machines.

In a recent study, researchers from IBM and MIT have demonstrated how compressed AI models are highly vulnerable to adversarial attack, and as such, they have come up with a solution—adding a mathematical limitation at the time of the quantization process can reduce the possibilities that an AI system will be tricked by a slightly altered image and misclassify what it views.

Reducing a deep learning model from the regular 32 bits to a lower bit length could misclassify the modified images owing to an error amplification effect—that is, the manipulated image turns out to be more distorted with every additional layer of processing. Towards the end, the model will more probably mistake a frog for a deer, or a bird for a cat, for instance.

The team demonstrated that models quantized to 8 bits or fewer are more vulnerable to adversarial attacks, with precision reducing from an already low 30-40% to below 10% as the width of the bit reduces. However, regulating the Lipschitz constraint at the time of the quantization restores some amount of resilience. Upon adding the Lipschitz constraint, the researchers observed slight performance gains in an attack, with the smaller AI models in certain cases surpassing the 32-bit model.

Our technique limits error amplification and can even make compressed deep learning models more robust than full-precision models. With proper quantization, we can limit the error.

Song Han, Assistant Professor, Department of Electrical Engineering and Computer Science, MIT.

Han is also a member of MIT’s Microsystems Technology Laboratories.

The researchers are planning to further enhance the method by training it on bigger datasets and utilizing it on more varieties of models.

Deep learning models need to be fast and secure as they move into a world of internet-connected devices. Our Defensive Quantization technique helps on both fronts.

Chuang Gan, Study Coauthor and Researcher, MIT-IBM Watson AI Lab.

The research team, which also includes Ji Lin, a MIT graduate student, will present the study results at the International Conference on Learning Representations in May.

In rendering AI models smaller so that they utilize less energy and run faster, Han is employing AI itself to push the constraints of model compression technology. In new, similar studies, Han and his coworkers have demonstrated how reinforcement learning can be utilized to automatically locate the tiniest bit length for individual layers in a quantized model based on how rapidly the device running the learning model can process images.

When compared to a fixed, 8-bit model, this flexible bit width method decreases latency as well as energy consumption by as much as 200%, stated Han. At the Computer Vision and Pattern Recognition conference, which will be held in June, the investigators will present their results.