Mar 29 2019

For a number of people in traffic-dense cities such as Los Angeles, the big question is: When will self-driving cars be launched? But after a succession of high-profile accidents in the United States, safety issues could cause the autonomous dream to come to an immediate halt.

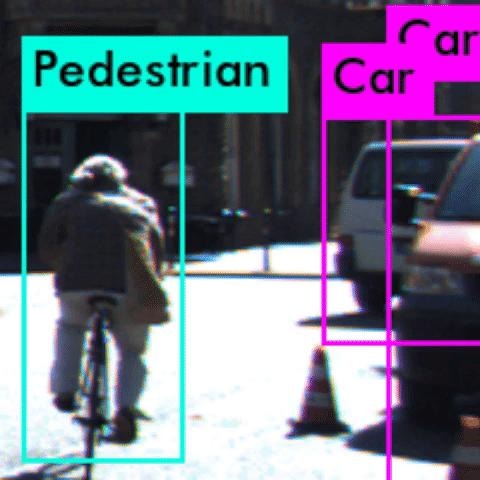

In this example, a perception algorithm misclassifies the cyclist as a pedestrian. (Image credit - Anand Balakrishnan)

In this example, a perception algorithm misclassifies the cyclist as a pedestrian. (Image credit - Anand Balakrishnan)

At University of Southern California (USC), scientists have published a new research paper that deals with an old problem for autonomous vehicle developers: examining the system's perception algorithms, which enable the car to "understand" what it "sees."

Working with scientists from Arizona State University, the team's new mathematical technique is able to identify irregularities or bugs in the system before the car goes on the road.

Perception algorithms are founded on convolutional neural networks, driven by machine learning, a type of deep learning. These algorithms are infamously hard to test, since it is not fully understood how they arrive at their predictions. This can result in terrible consequences in safety-critical systems such as autonomous vehicles.

Making perception algorithms robust is one of the foremost challenges for autonomous systems. Using this method, developers can narrow in on errors in the perception algorithms much faster and use this information to further train the system. The same way cars have to go through crash tests to ensure safety, this method offers a pre-emptive test to catch errors in autonomous systems.

Anand Balakrishnan, Study Lead Author and Computer Science PhD Student, USC.

On March 28th, at the Design, Automation and Test in Europe conference in Italy, the paper, titled “Specifying and Evaluating Quality Metrics for Vision-based Perception Systems”, was presented.

Learning about the world

Usually autonomous vehicles "learn" about the world through machine learning systems, which are fed massive datasets of road images before they can detect objects independently.

But the system can falter. In the case of a deadly accident between a self-driving car and a pedestrian in Arizona last March, the software categorized the pedestrian as a "false positive" and decided it did not need to halt.

"We thought, clearly there is some issue with the way this perception algorithm has been trained," said study co-author Jyo Deshmukh, a USC computer science professor and former research and development engineer for Toyota, expertise in autonomous vehicle safety.

"When a human being perceives a video, there are certain assumptions about persistence that we implicitly use: if we see a car within a video frame, we expect to see a car at a nearby location in the next video frame. This is one of several 'sanity conditions' that we want the perception algorithm to satisfy before deployment."

For instance, an object cannot pop and disappear from one frame to the next. If it does, it disrupts a "sanity condition," or standard law of physics, which indicates there is a bug in the perception system.

Deshmukh and his PhD student Balakrishnan, together with USC PhD student Xin Qin and master's student Aniruddh Puranic, collaborated with three Arizona State University scientists to explore the problem.

No room for error

The team framed a new mathematical logic, referred to as Timed Quality Temporal Logic, and used it to test two prevalent machine-learning tools— YOLO and Squeeze Det—using raw video datasets of driving scenes.

The logic effectively honed in on instances of the machine learning tools violating "sanity conditions" across numerous frames in the video. Most typically, the machine learning systems were disastrous in detecting an object or they misclassified an object.

For example, the system did not identify a cyclist from the back, when the bike’s tire resembled a thin vertical line. Rather, it misclassified the cyclist as a pedestrian. Here, the system might fail to appropriately forecast the cyclist's next move, which could result in an accident.

Phantom objects—where the system sees an object when there is none—were also common. This could cause the car to incorrectly hit the brakes—another possibly risky move.

The team's technique could be used to identify irregularities or bugs in the perception algorithm prior to deployment on the road and enables the developer to locate particular issues.

The idea is to arrest problems using perception algorithm in virtual testing, making the algorithms safer and more consistent. Importantly, as the technique banks on a library of "sanity conditions," humans will not have to label objects in the test dataset—a laborious and often-flawed process.

Going forward, the team expects to add the logic to retrain the perception algorithms when it finds a fault. It could also be stretched to real-time use, while the car is driving, as an instantaneous safety monitor.