Feb 19 2019

MIT has developed a unique system that utilizes radio-frequency identification (RFID) tags, using which robots are able to detect moving objects with unparalleled accuracy and speed.

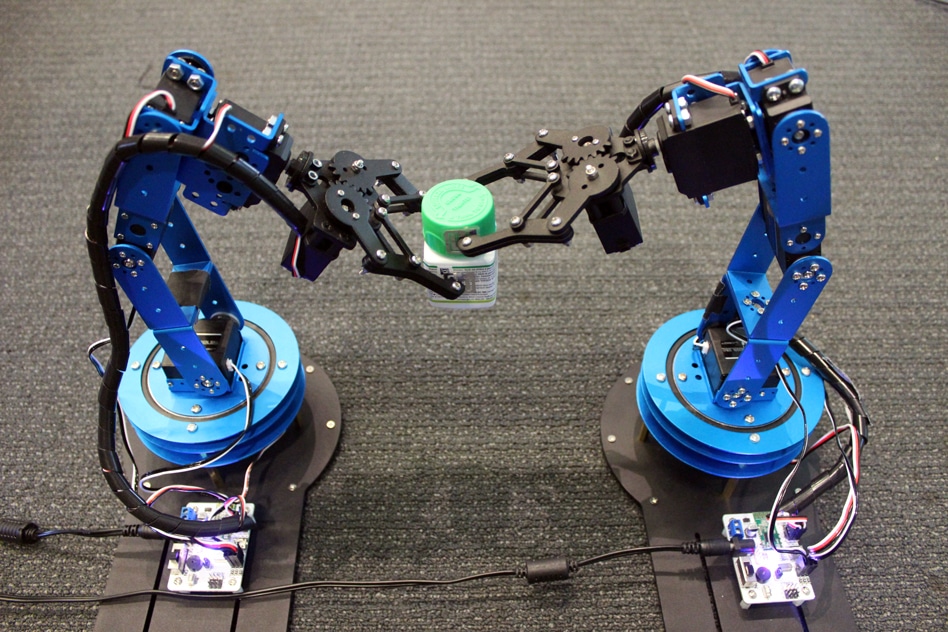

MIT Media Lab researchers are using RFID tags to help robots home in on moving objects with unprecedented speed and accuracy, potentially enabling greater collaboration in robotic packaging and assembly and among swarms of drones. (Image credit: MIT)

MIT Media Lab researchers are using RFID tags to help robots home in on moving objects with unprecedented speed and accuracy, potentially enabling greater collaboration in robotic packaging and assembly and among swarms of drones. (Image credit: MIT)

The novel system, called TurboTrack, may allow better precision and collaboration by swarms of drones performing search-and-rescue missions and by robots working on assembly and packaging operations.

In a study, which will be presented at the USENIX Symposium on Networked Systems Design and Implementation next week, the research team demonstrated that robots applying the system are capable of locating tagged objects within 7.5 ms, on average, and with less than 1 cm error.

In the TurboTrack system, an RFID tag can be applied to any kind of object. A wireless signal sent by a reader reflects off the RFID tag and other neighboring objects, and rebounds to the reader. Next, an algorithm locates the response of the RFID tag by sifting through all the reflected signals. Final computations subsequently exploit the movement of the RFID tag—although this generally reduces precision—to enhance its localization precision.

According to the researchers, the system may possibly substitute computer vision for certain robotic tasks. Computer vision, just like its human counterpart, is restricted by what it can visualize, and it will not be able to locate objects in cluttered surroundings. Such limitations are not present in radio frequency signals—that is, they are capable of detecting targets without visualization, through walls and within clutter.

In order to verify the system, the research team connected one RFID tag to a bottle and another one to a cap. The cap was located by a robotic arm, which subsequently positioned it onto the bottle seized by another robotic arm. In yet another demonstration, the investigators monitored nanodrones equipped with RFID tags at the time of docking, flying, and maneuvering. The researchers reported that in both the tasks, the system was as fast and precise as conventional computer-vision systems, while operating in circumstances where computer vision does not work.

If you use RF signals for tasks typically done using computer vision, not only do you enable robots to do human things, but you can also enable them to do superhuman things. And you can do it in a scalable way, because these RFID tags are only 3 cents each.

Fadel Adib, Principal Investigator and Assistant Professor, MIT Media Lab

Adib is also the founding director of the Signal Kinetics Research Group.

In the manufacturing field, the system may allow robot arms to be more versatile and accurate, for example, picking up, packaging, and assembling items along an assembly line. Utilizing handheld “nanodrones” for search-and-rescue missions is another potential application. Currently, computer vision and techniques are used by nanodrones to stitch together images, which were captured, for localization tasks. In chaotic places, these drones usually become confused, lose one another behind walls, and cannot exclusively detect each other. All these aspects restrict their potential to, say, spread out across an area and team up to look for a missing person. Now, using the novel system developed by the researchers, swarms of nanodrones could better locate one another, for more improved collaboration and control.

You could enable a swarm of nanodrones to form in certain ways, fly into cluttered environments, and even environments hidden from sight, with great precision.

Zhihong Luo, Study First Author and Graduate Student, Signal Kinetics Research Group

Postdoc Yunfei Ma, visiting student Qiping Zhang, and Research Assistant Manish Singh are the other Media Lab co-authors of the study.

Super resolution

For years, Adib’s team has been working on how to apply radio signals for identification and tracking purposes, like controlling warehouse inventory, communicating with devices within the body, and identifying contamination in bottled foods.

While analogous systems have tried to apply RFID tags for localization purposes, these come with certain trade-offs in terms of either speed or precision. To be precise, they may take a number of seconds to locate a moving object; similarly, they lose accuracy to boost speed.

Achieving both accuracy and speed at the same time was the real challenge. To accomplish this, the researchers were inspired by an imaging method known as “super-resolution imaging.” By stitching together images from various angles, these systems were able to obtain a finer-resolution image.

“The idea was to apply these super-resolution systems to radio signals,” stated Adib. “As something moves, you get more perspectives in tracking it, so you can exploit the movement for accuracy.”

The system integrates a typical RFID reader with a “helper” component used for localizing radio frequency signals. A wideband signal containing multiple frequencies is emitted by the helper, building on a modulation strategy applied in wireless communication, known as orthogonal frequency-division multiplexing.

All the signals rebounding off objects in the scenario, including the RFID tag, are captured by the system. Among these, one signal carries a signal that is specific only to the specific RFID tag, because an incoming signal is reflected and absorbed by RFID signals in a certain pattern, equivalent to bits of 1s and 0s that can be recognized by the system.

Since these signals are capable of traveling at the speed of light, the system can calculate a “time of flight”—determining distance by computing the time taken by a signal to travel between a receiver and transmitter—to estimate the tag’s location and also the other objects in the setting. However, this does not provide sub-centimeter precision and merely offers a ballpark localization figure.

Leveraging movement

Subsequently, the researchers developed the so-called “space-time super-resolution” algorithm to zoom in on the location of the tag. The location estimations for each rebounding signal, including the RFID signal, are integrated by the algorithm. The RFID signal is established through time of flight. Utilizing some probability calculations, that group was narrowed down to just a few possible locations for the RFID tag.

As the tag shifts, its angle of signal alters slightly—a change equally corresponding to a specific location. That angle change can be subsequently used by the algorithm to track the distance of the tag as it moves. The algorithm can locate the tag in a three-dimensional (3D) space by continuously comparing that varying distance measurement to all other distance measurements from other signals. All this occurs in a fraction of a second.

The high-level idea is that, by combining these measurements over time and over space, you get a better reconstruction of the tag’s position.

Fadel Adib, Principal Investigator and Assistant Professor, MIT Media Lab

The study was partly sponsored by the National Science Foundation.