AZoRobotics speaks to Dr. Kevin Field, an Associate Professor in the Department of Nuclear Engineering and Radiological Sciences at the University of Michigan, about his latest research into developing a novel machine learning platform that can detect, in real-time, the damage and defects caused by radiation on materials and components.

What inspired your research into using a machine learning platform to detect radiation-induced defects?

The research was originally inspired as part of a student’s course project for a machine learning class at the University of Wisconsin. The student was looking for an interesting project and his advisor reached out to ask if I had any problems that had a lot of image data, which we did.

Since then, we have continued the research and development of the algorithms for the machine learning platform with the student’s advisor, which has led to our current understanding of how the algorithms work and can be used for quantification of microscopy images.

In regard to executing the techniques in real-time, and the hardware-software architecture to do that, the inspiration came from my brother, Dr. Christopher Field, after he showed me a little edge computing device he was tinkering around with for one of his clients over a holiday break.

In late 2019 and early 2020, we realized that by using the right architecture with the device that we could perform the detection and quantification tasks in real-time. In August 2020, we formed Theia Scientific, LLC to bring the combined technologies to market.

Can you provide an overview of how this technique detects and quantifies radiation-induced defects?

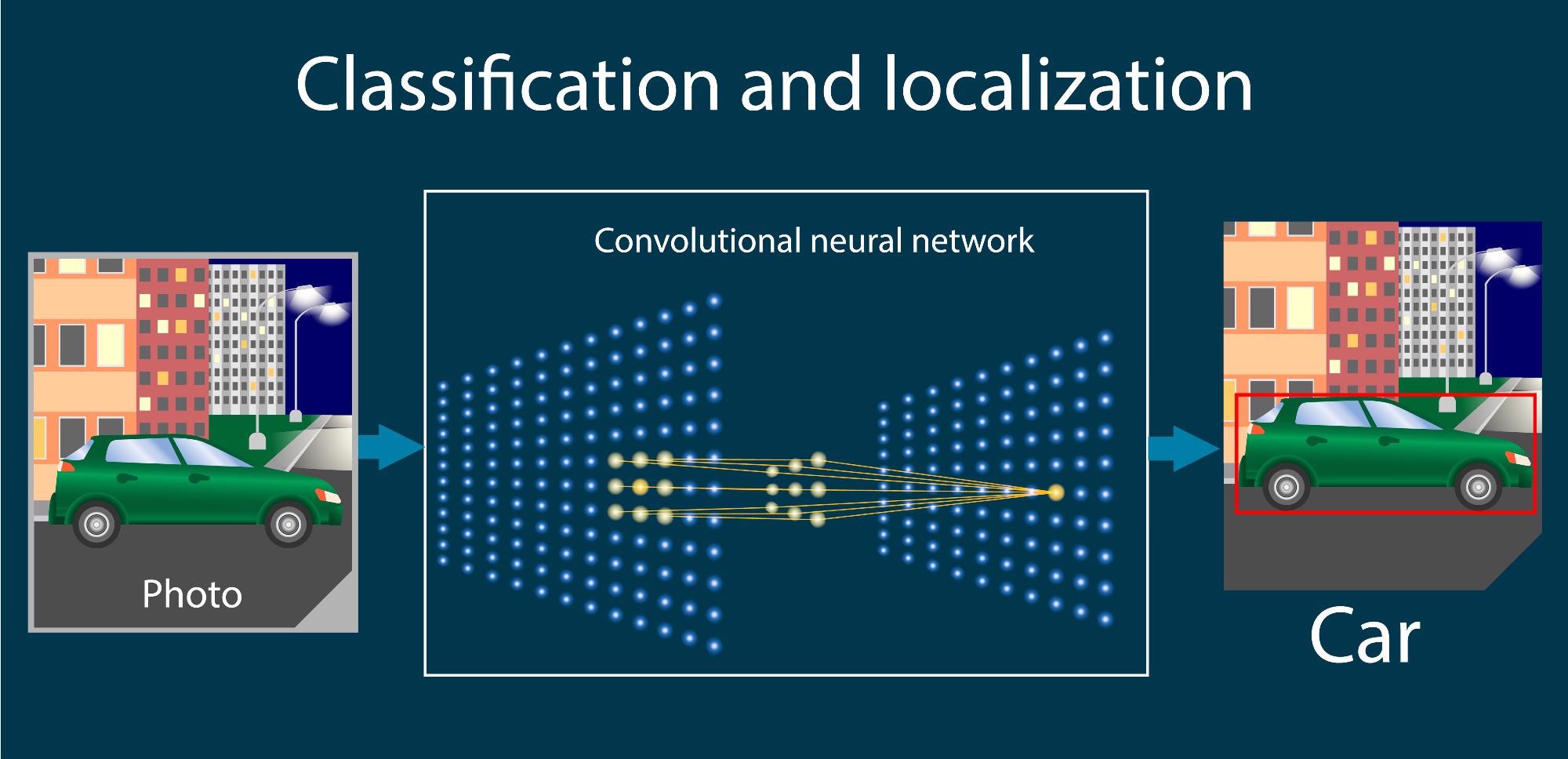

The technique uses a set of Convolutional Neural Networks (CNNs), which are a type of artificial neural network. These are the same neural networks that are used by companies like Apple for FaceID and Telsa for self-driving cars.

They are very good at identifying patterns in images, feature size and morphologies of radiation-induced defects. The method for hosting these CNNs and performing the detection and subsequent quantification is currently proprietary.

Convolutional neural networks (CNNs) have become a powerful method for image recognition tasks. A CNN is a type of artificial neural network that operates convolution directly on raw pixel intensity data and consists of several repeating layers of convolution, nonlinearity and pooling, followed by fully connected layers. Image Credit: Shutterstock.com/ Olga Salt

How does this machine vision technique improve on previous manual interpretation methods?

The main benefits are consistency and speed. We can easily achieve 10 fps, even for images with 200+ features, which is simply not possible by a human. A typical human will take several minutes or more for a single image with the same level of complexity. This is what allows real-time quantification at the microscope.

Our technique is also extremely repeatable, if you provide the same image to the CNN pipeline it will always provide the same answer. This is important for data quality in literature. Humans, on the other hand, might provide different answers at different times depending on their level of distraction, tiredness, etc. at any given time when performing the task.

In your research, you mention that the machine learning software uses a convolutional neural network to analyze the electron microscope video frames. What is this network, and how was it beneficial to your research?

The networks we used are ones commonly available through online resources. Our specialty is in adopting these CNNs for scientific workflows, either by adapting the CNNs themselves or the pre-input and/or post-output code base.

Considering that real-time assessment of structural integrity reduces the time from idea to conclusion by nearly 80 times, why has this technique never been used for real-time image-based detection and quantification of radiation damage before now?

The application of CNNs for image-based detection and classification saw a large boom in 2012. At the same time, graphical processing units – or GPUs – were also progressing rapidly.

Over the last 2 years, the rate of acceleration on both, which encompasses the software (CNNs) and hardware (GPU), has skyrocketed enabling rapid development of CNNs through either GPU cloud computing or local GPU computing as well as the development of low-cost, moderate powered GPUs that can host and run CNNs.

Without these technological innovations, we wouldn’t have been able to achieve real-time detection during our in-situ microscopy experiments.

Is it possible for this technique to be adapted for other types of image-based microscopy?

Yes, it is possible to adapt this technique for other types of image-based microscopy. We have already tested and demonstrated this method for live cell imaging among other problems across the biotechnology and energy sectors.

Aside from testing materials and parts for nuclear reactors, what other sectors could this machine learning platform be of use to?

Basically, the technique can be applied to any image-based quantification task. It is agnostic to the type of microscope or digital method for acquiring and recording the microscope images. Hence, it’s broadly applicable to materials science, biosciences and energy sciences, as well as repair and inspection in civil structures, to name a few.

How could this technique be used to reduce greenhouse gas emissions to fight climate change?

In the niche of nuclear materials, it can take over 10 years to develop and qualify a new high-performance material for nuclear reactor applications. This timeline is slow for many reasons, but one aspect is that tasks such as image quantification can take hours or months to complete for a single experiment.

Our methods can greatly accelerate these tasks, thus shortening the time for materials development. New materials, especially those that can withstand the unique challenges of advanced nuclear reactors, means new reactors will be able to operate for longer at higher temperatures with greater power efficiency and cost savings.

Nuclear power isn’t the only solution for reducing greenhouse gas emissions, but making them cost-competitive against fossil fuel power generation would aid in the global goal to reduce greenhouse gas emissions.

We also think our technology has applications to photovoltaic and semiconductor inspection as related to the solar energy field, which helps increase inspection speeds and robustness. In this sense, we might be able to help fight climate change through several different energy generation techniques.

This technology could be used for photovoltaic and semiconductor inspection which relates to the solar energy field, helping advance inspection speeds and robustness. Image Credit: Shutterstock.com/ Fotografie - Schmidt

Did you come across any challenges during your research, and if so, how did you overcome them?

Of course, we came across a few obstacles. There are things as simple as Python code dependencies to the current global GPU shortage. Other challenges are related to the microscopes themselves, some use out-of-date operating systems, which makes interfacing with the image acquisition software difficult using modern edge computing devices.

We overcame many of these by assembling a highly motivated team all working towards the common goal of pulling off this demonstration. It is the creative thought that only a team environment can provide that enabled us to accomplish our goals in such a relatively short time.

What does the future of real-time machine vision look like to you?

We are seeing a surge in machine learning and deep learning literacy not only in the scientific fields but in the general public as well. I think we will continue to find acceptance and adoption of these techniques in our daily life, with some techniques in the scientific fields becoming the de facto analysis technique.

We want to provide a solution that makes these technologies approachable for the common scientist, while also promoting transparency and accessibility in the collection and quantification of data.

Following on from this study, what are the next steps for your research?

Our number one priority is to get this in the hands of researchers, primarily through commercialization efforts with Theia Scientific, LLC. We have seen the benefits of using it in our research and want everyone to accelerate their microscopy-based workflows.

About Dr. Kevin Field

Dr. Kevin Field is an Associate Professor in the Department of Nuclear Engineering and Radiological Sciences at the University of Michigan, where his research specializes in alloy development and radiation effects in ferrous and non-ferrous alloys. His active research interests include advanced electron microscopy and scattering-based characterization techniques, additive/advanced manufacturing for nuclear materials and the application of machine/deep learning techniques for advanced innovation in characterization and development of materials systems.

Dr. Kevin Field is an Associate Professor in the Department of Nuclear Engineering and Radiological Sciences at the University of Michigan, where his research specializes in alloy development and radiation effects in ferrous and non-ferrous alloys. His active research interests include advanced electron microscopy and scattering-based characterization techniques, additive/advanced manufacturing for nuclear materials and the application of machine/deep learning techniques for advanced innovation in characterization and development of materials systems.

Prof. Field moved to the University of Michigan in 2019 after nearly seven years at Oak Ridge National Laboratory, where he first started as an Alvin M. Weinberg Fellow and left at the level of Staff Scientist. In 2020, Prof. Field also co-founded Theia Scientific, LCC with his brother Dr. Christopher Field. Their company has currently secured $400k USD in government funding to commercialize technologies for real-time detection and quantification for microscopy applications. Prof. Field has presented and published over 100 publications on radiation effects in various material systems relevant for nuclear power generation including irradiated concrete performance, deformation mechanisms in irradiated steels, and radiation tolerance of enhanced accident tolerant fuel forms.

Dr. Field’s work has been recognized through several avenues including receiving the prestigious Alvin M. Weinberg Fellowship from ORNL in 2013, the UT-Battelle Award for Early Career Researcher in Science and Technology in 2018, and awardee of the 2020 Department of Energy Early Career Award.

Disclaimer: The views expressed here are those of the interviewee and do not necessarily represent the views of AZoM.com Limited (T/A) AZoNetwork, the owner and operator of this website. This disclaimer forms part of the Terms and Conditions of use of this website.