Jun 28 2016

Sometimes all it takes to get help from someone is to wave at them, or point. Now the same is true for robots. Researchers at KTH Royal Institute of Technology in Sweden have completed work on an EU project aimed at enabling robots to cooperate with one another on complex jobs, by using body language.

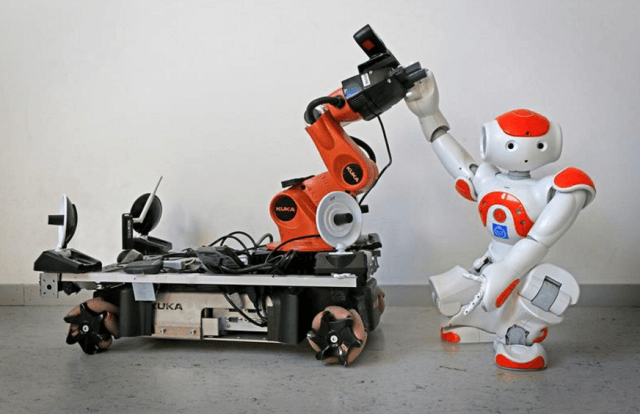

Two off-the-shelf robots were used to demonstrate how robots can pick up each other's signals for assistance, and even set aside their own tasks in order to lend a "hand".(Credit-KTH The Royal Institute of Technology)

Two off-the-shelf robots were used to demonstrate how robots can pick up each other's signals for assistance, and even set aside their own tasks in order to lend a "hand".(Credit-KTH The Royal Institute of Technology)

Dimos Dimarogonas, an associate professor at KTH and project coordinator for RECONFIG, says the research project has developed protocols that enable robots to ask for help from each other and to recognize when other robots need assistance — and change their plans accordingly.

"Robots can stop what they're doing and go over to assist another robot which has asked for help," Dimarogonas says. "This will mean flexible and dynamic robots that act much more like humans — robots capable of constantly facing new choices and that are competent enough to make decisions."

As autonomous machines take on more responsibilities, they are bound to encounter tasks that are too big for a single robot. Shared work could include lending an extra hand to lift and carry something, or holding an object in place, but Dimarogonas says the concept can be scaled up to include any number of functions in a home, a factory or other kinds of workplaces.

The project was completed in May 2016, with project partners at Aalto University in Finland, the National Technical University of Athens in Greece, and the École Centrale Paris in France.

In a series of filmed presentations, the researchers demonstrate the newfound abilities of several off-the-shelf autonomous machines, including NAO robots. One video shows a robot pointing out an object to another robot, conveying the message that it needs the robot to lift the item.

Dimarogonas says that common perception among the robots is one key to this collaborative work.

"The visual feedback that the robots receive is translated into the same symbol for the same object," he says. "With updated vision technology they can understand that one object is the same from different angles. That is translated to the same symbol one layer up to the decision-making — that it is a thing of interest that we need to transport or not. In other words, they have perceptual agreement."

In another demonstration two robots carry an object together. One leads the other, which senses what the lead robot wants by the force it exerts on the object, he says.

"It's just like if you and I were carrying a table and I knew where it had to go," he says. "You would sense which direction I wanted to go by the way I turn and push, or pull."

The important point is that all of these actions take place without human interaction or help, he says.

"This is done in real time, autonomously," he says. The project also uses a novel communication protocol that sets it apart from other collaborative robot concepts. "We minimize communication. There is a symbolic communication protocol, but it's not continuous. When help is needed, a call for help is broadcast and a helper robot brings the message to another robot. But it's a single shot."