Mar 28 2014

Johns Hopkins researchers have devised a computerized process that could make minimally invasive surgery more accurate and streamlined using equipment already common in the operating room.

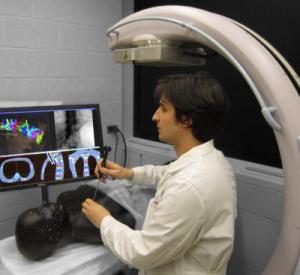

Graduate student Ali Uneri demonstrates the new surgical guidance system. Credit: Johns Hopkins Medicine

Graduate student Ali Uneri demonstrates the new surgical guidance system. Credit: Johns Hopkins Medicine

In a report published recently in the journal Physics in Medicine and Biology, the researchers say initial testing of the algorithm shows that their image-based guidance system is potentially superior to conventional tracking systems that have been the mainstay of surgical navigation over the last decade.

"Imaging in the operating room opens new possibilities for patient safety and high-precision surgical guidance," says Jeffrey Siewerdsen, Ph.D., a professor of biomedical engineering in the Johns Hopkins University School of Medicine. "In this work, we devised an imaging method that could overcome traditional barriers in precision and workflow. Rather than adding complicated tracking systems and special markers to the already busy surgical scene, we realized a method in which the imaging system is the tracker and the patient is the marker."

Siewerdsen explains that current state-of-the-art surgical navigation involves an often cumbersome process in which someone — usually a surgical technician, resident or fellow — manually matches points on the patient's body to those in a preoperative CT image. This process, called registration, enables a computer to orient the image of the patient within the geometry of the operating room. "The registration process can be error-prone, require multiple manual attempts to achieve high accuracy and tends to degrade over the course of the operation," Siewerdsen says.

Siewerdsen's team used a mobile C-arm, already a piece of equipment used in many surgeries, to develop an alternative. They suspected that a fast, accurate registration algorithm could be devised to match two-dimensional X-ray images to the three-dimensional preoperative CT scan in a way that would be automatic and remain up to date throughout the operation.

Starting with a mathematical algorithm they had previously developed to help surgeons locate specific vertebrae during spine surgery, the team adapted the method to the task of surgical navigation. When they tested the method on cadavers, they found a level of accuracy better than 2 millimeters and consistently better than a conventional surgical tracker, which has 2 to 4 millimeters of accuracy in surgical settings.

"The breakthrough came when we discovered how much geometric information could be extracted from just one or two X-ray images of the patient," says Ali Uneri, a graduate student in the Department of Computer Science in the Johns Hopkins University Whiting School of Engineering. "From just a single frame, we achieved better than 3 millimeters of accuracy, and with two frames acquired with a small angular separation, we could provide surgical navigation more accurately than a conventional tracker."

The team investigated how small the angle between the two images could be without compromising accuracy and found that as little as 15 degrees was sufficient to provide better than 2 millimeters of accuracy.

An additional advantage of the system, Uneri says, is that the two X-ray images can be acquired at extremely low dose of radiation — far below what is needed for a visually clear image, but enough for the algorithm to extract accurate geometric information.

The team is translating the method to a system suitable for clinical studies. While the system could potentially be used in a wide range of procedures, Siewerdsen expects it to be most useful in minimally invasive surgeries, such as spinal and intracranial neurosurgery.

A. Jay Khanna, M.D., an associate professor of orthopaedic surgery and biomedical engineering at the Johns Hopkins University School of Medicine, evaluated the system in its first application to spine surgery. "Accurate surgical navigation is essential to high-quality minimally invasive surgery," he says. "But conventional navigation systems can present a major cost barrier and a bottleneck to workflow. This system could provide accurate navigation with simple systems that are already in the OR and with a sophisticated registration algorithm under the hood."

Ziya Gokaslan, M.D., a professor of neurosurgery at the Johns Hopkins University School of Medicine, is leading the translational research team. "We are already seeing how intraoperative imaging can be used to enhance workflow and improve patient safety," he says. "Extending those methods to the task of surgical navigation is very promising, and it could open the availability of high-precision guidance to a broader spectrum of surgeries than previously available."