A team of researchers at Personal Robotics Lab of Ashutosh Saxena has already trained robots to recognize, pick and place common objects. A new human element has now been added, where the robots will be trained to "hallucinate" the movement and behavior of human beings and the related objects.

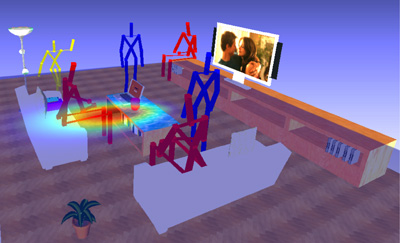

A robot populates a room with imaginary human stick figures in order to decide where objects should go to suit the needs of humans.

A robot populates a room with imaginary human stick figures in order to decide where objects should go to suit the needs of humans.

This research work will be presented on June 21, at the International Symposium on Experimental Robotics, in Quebec and also at the International Conference of Machine Learning in Edinburgh, Scotland on June 29.

According to the researchers, earlier work on robotic placement was based on modeling link between objects. Simpler computation and elimination of errors can be achieved by relating objects to humans. For instance, three-dimensional (3-D) images of rooms with objects can be observed by a computer to get acquainted with the human-object relationships. It envisions human figures in association to objects and furniture. The distance of the objects from various parts of the envisioned human figures and the orientation of the objects can be calculated using a computer.

This method was demonstrated using images from the Google 3-D Warehouse such as offices, living rooms, and kitchens, followed by images of apartments and local offices. Eventually, a robot was programmed to perform the predicted placements in local settings. The placement of each object was found to have ratings on a scale of 1to 5.

Based on the comparison of a variety of algorithms, the researchers resolved that placements relying on human context had higher precision than those relying only in relationships between objects. However, an average score of 4.3 was achieved by combining human context with object-to-object relationships. Some tests were performed in rooms with some objects and furniture as well as in rooms with a single major piece of furniture. The object-only method comparatively resulted in low scores, due to the lack of context.