Reviewed by Lexie CornerApr 23 2025

Researchers at Cornell University have developed a new AI-powered robotic framework called Retrieval for Hybrid Imitation under Mismatched Execution (RHyME). This system allows robots to learn tasks by observing a single demonstration video. The researchers believe that RHyME has the potential to improve the development and deployment of robotic systems by reducing the time, energy, and cost associated with training.

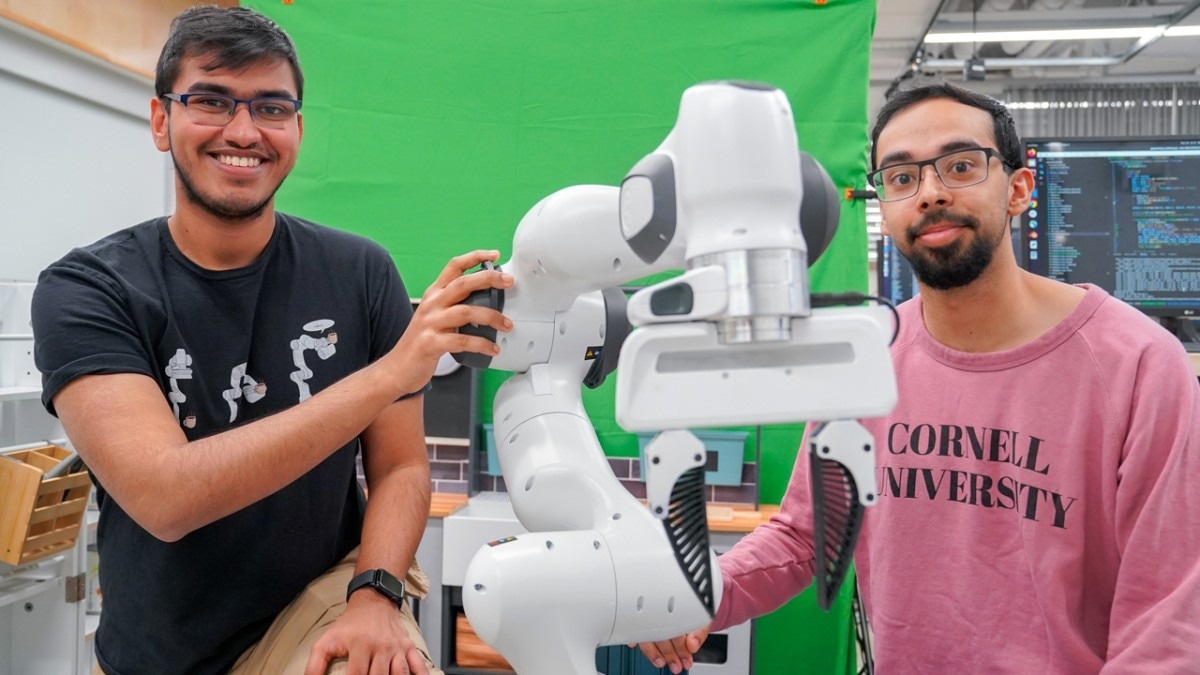

Kushal Kedia (left), a doctoral student in the field of computer science, and Prithwish Dan, M.S. ’26, are members of the development team behind RHyME, a system that allows robots to learn tasks by watching a single how-to video. Image Credit: Louis DiPietro/Provided

Kushal Kedia (left), a doctoral student in the field of computer science, and Prithwish Dan, M.S. ’26, are members of the development team behind RHyME, a system that allows robots to learn tasks by watching a single how-to video. Image Credit: Louis DiPietro/Provided

Training robots to perform even simple tasks has traditionally been a painstaking process, requiring precise, step-by-step instructions. Robots often struggle and fail when faced with unexpected events, such as dropping an object.

One of the annoying things about working with robots is collecting so much data on the robot doing different tasks. That’s not how humans do tasks. We look at other people as inspiration.

Kushal Kedia, Doctoral Student and Study Lead Author, Computer Science, Cornell University

Kedia will present the findings in a paper titled "One-Shot Imitation under Mismatched Execution" at the Institute of Electrical and Electronics Engineers’ International Conference on Robotics and Automation in Atlanta this May.

The development of home robot assistants has been limited by their inability to understand and adapt to the complex physical world. To address this, researchers like Kedia are using human demonstrations, or "how-to" videos, in lab settings to train robots. This method, called "imitation learning," aims to help robots learn task sequences more quickly and adapt to real-world conditions.

Our work is like translating French to English – we’re translating any given task from human to robot.

Sanjiban Choudhury, Study Senior Author and Assistant Professor, Computer Science, Cornell Ann S. Bowers College of Computing and Information Science

However, this video-based training faces significant challenges. Human movements are often too fluid and complex for robots to accurately track and replicate. Human movements are often too fluid and complex for robots to accurately track and replicate. Additionally, video-based training typically requires large amounts of data, and the video demonstrations—such as picking up a napkin or stacking plates—must be performed slowly and without errors. Any discrepancies between the actions in the video and the robot's execution have historically resulted in learning failures.

Choudhury adds, “If a human moves in a way that’s any different from how a robot moves, the method immediately falls apart. Our thinking was, ‘Can we find a principled way to deal with this mismatch between how humans and robots do tasks?”

RHyME is the team’s solution, designed to make robots more adaptable and less prone to failure. It allows a robotic system to use its own memory and make connections when performing tasks it has only seen once, by drawing on its existing library of videos. For example, if a RHyME-equipped robot watches a video of a person taking a mug from a counter and placing it in a sink, it will search its video database for similar actions, such as grasping a cup or lowering a utensil, to inform its own actions.

The researchers state that RHyME enables robots to learn multi-step tasks while significantly reducing the amount of robot-specific data required for training. RHyME needs only 30 minutes of robot data. In lab tests, robots trained with this system showed more than a 50 % improvement in task success compared to previous methods.

“This work is a departure from how robots are programmed today. The status quo of programming robots is thousands of hours of tele-operation to teach the robot how to do tasks. That’s just impossible. With RHyME, we’re moving away from that and learning to train robots in a more scalable way,” said Choudhury.

The co-authors of the paper, alongside Kedia and Choudhury, include Prithwish Dan, M.S. ’26; Angela Chao, M.Eng. ’25; and Maximus Pace, M.S. ’26.

Google, OpenAI, the U.S. Office of Naval Research, and the National Science Foundation supported the study.