According to a study published in AIAA Scitech Forum, aerospace and computer science engineering researchers at The Grainger College of Engineering at the University of Illinois, Urbana-Champaign trained a model to independently assess and scoop swiftly before watching it demonstrate its talent on a robot at a NASA site.

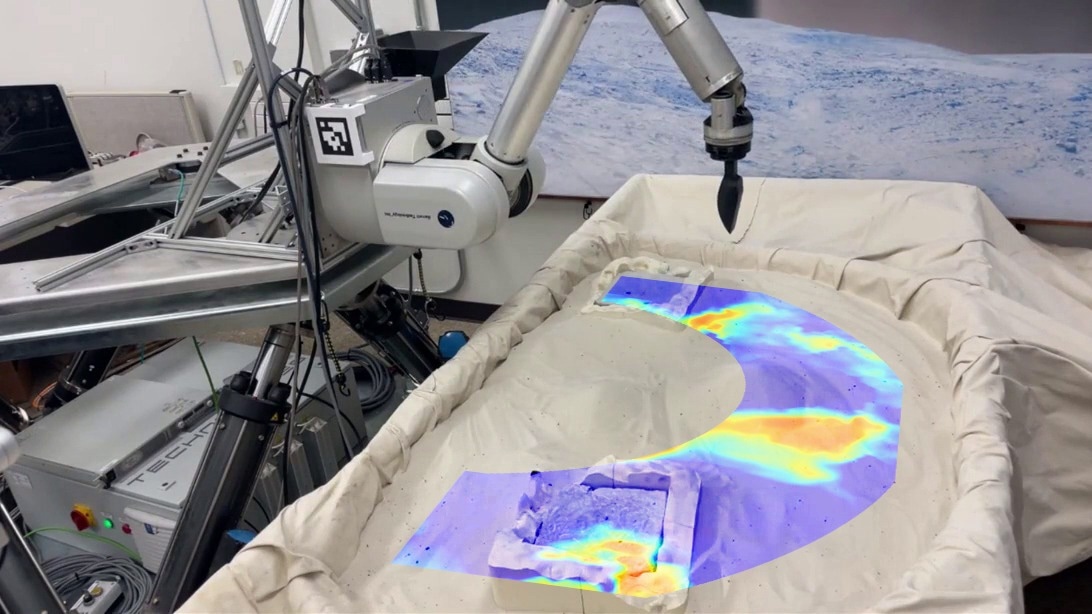

A snapshot of policy's scooping preferences during testing on NASA Ocean World Lander Autonomy Testbed at the Jet Propulsion Laboratory. Image Credit: University of Illinois Urbana-Champaign

A snapshot of policy's scooping preferences during testing on NASA Ocean World Lander Autonomy Testbed at the Jet Propulsion Laboratory. Image Credit: University of Illinois Urbana-Champaign

Extraterrestrial landers tasked with collecting samples from the surfaces of distant moons and planets have limited time and battery life.

Pranay Thangeda, an aerospace PhD student, stated that they taught their robotic lander arm to collect scooping data on various materials, including sand and boulders, resulting in a database of 6,700 points of knowledge. The two terrains in NASA’s Ocean World Lander Autonomy Testbed at the Jet Propulsion Laboratory were completely new to the model, which remotely controlled the JPL robotic arm.

We just had a network link over the internet. I connected to the test bed at JPL and got an image from their robotic arm’s camera. I ran it through my model in real time. The model chose to start with the rock-like material and learned on its first try that it was an unscoopable material.

Pranay Thangeda, Aerospace PhD Student, The Grainger College of Engineering, University of Illinois, Urbana-Champaign

Based on what it learnt from the image and the initial effort, the robotic arm proceeded to a more likely place and successfully scooped the other terrain, which was finer grit material. Because one of the mission criteria is for the robot to scoop a certain volume of material, the JPL team measured the volume of each scoop until the robot scooped the entire amount.

Thangeda stated that, while the investigation of water worlds originally drove their study, their approach could be applied to any surface.

Thangeda added, “Usually, when you train models based on data, they only work on the same data distribution. The beauty of our method is that we didn't have to change anything to work on NASA’s test bed because in our method, we are adapting online. Even though we never saw any of the terrains at the NASA test bed, without any fine tuning on their data, we managed to deploy the model trained here directly over there, and the model deployment happened remotely—exactly what autonomous robot landers will do when deployed on a new surface in space.”

Melkior Ornik, Thangeda’s adviser, is the lead on one of four projects received a grant through NASA's COLDTech program, but all four are addressing distinct difficulties. The only thing they have in common is that they are all part of the Europa program and are using the Lander as a test bed to investigate various difficulties.

“We were one of the first to demonstrate something meaningful on their platform designed to mimic a Europa surface. It was great to finally see something you worked on for months being deployed on a real, high-fidelity platform. It was cool to see the model being tested on a completely different terrain and a completely different platform robot that we would never trained on. It was a boost of confidence in our model and our approach,” Thangeda further added.

Thangeda also said that they received positive comments from the JPL team.

He further stated, “They were happy that we were able to deploy the model without a lot of changes. There were some issues when we were just starting out, but I learned it was because we were the first to try to deploy a model on their platform, so it was network issues and some simple bugs in the software that they had to fix. Once we got it working, people were surprised that it was able to learn within like one or two samples. Some even didn't believe it until they were shown the exact results and methodology.”

Thangeda stated that one of the most critical challenges he and his team faced was bringing their system up to line with NASA’s.

He noted, “Our model was trained on a camera in a particular location with a particular shaped scoop. The location and the shape of the scoop were two things we had to address. To make sure their robot had the exact same scoop shape, we sent them a CAD design and they 3D printed it and attached it to their robot.”

“For the camera, we took their RGB-D point cloud information and reprojected it in real time to a different viewpoint, so that it matched what we had in our robot before we sent it to the model. That way, what the model saw was a similar viewpoint to what it saw during training,” he explained.

Thangeda stated that they intend to expand on this research to include more autonomous excavation and the automation of construction tasks such as canal digging. Humans can accomplish these tasks far more easily. Since these interactions are so subtle, it is difficult for a model to learn to conduct them on its own.

The study authors were Ashish Goel, Erica L. Tevere, Adriana Daca, Hari D. Nayar, and Erik Kramer from NASA JPL and Pranay Thangeda, Yifan Zhu, Kris Hauser, and Melkior Ornik from the University of Illinois.

NASA supported the study.

AI model masters new terrain at NASA facility one scoop at a time

Video Credit: University of Illinois Urbana-Champaign

Journal Reference:

Thangeda, P. et. al. (2025) Learning and Autonomy for Extraterrestrial Terrain Sampling: An Experience Report from OWLAT Deployment. AIAA Scitech Forum. doi.org/10.2514/6.2024-1962