Reviewed by Danielle Ellis, B.Sc.Sep 17 2024

Researchers from UC San Diego's Rady School of Management revealed that artificial intelligence algorithms greatly outdo human ability to identify lies during high-stakes strategic encounters. The study, published in Management Science, could significantly impact efforts to curb misinformation, as AI might enhance attempts to reduce false content on major social media platforms like YouTube, TikTok, and Instagram.

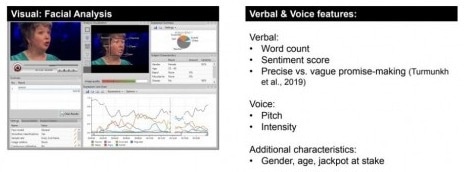

If someone is happier, they are telling the truth and there are other visual, verbal, vocal cues that we as humans are share when we are being honest. Algorithms work better at uncovering these correlations. Image Credit: University of California San Diego

If someone is happier, they are telling the truth and there are other visual, verbal, vocal cues that we as humans are share when we are being honest. Algorithms work better at uncovering these correlations. Image Credit: University of California San Diego

The study examined participants' capacity to spot deception on the well-liked British television program Golden Balls, which ran from 2007 to 2010. It discovered that algorithms outperform humans in predicting contestants' deceptive behavior.

We find that there are certain ‘tells’ when a person is being deceptive. For example, if someone is happier, they are telling the truth and there are other visual, verbal, vocal cues that we as humans are share when we are being honest and telling the truth. Algorithms work better at uncovering these correlations.

Marta Serra-Garcia, Study Lead Author and Associate Professor, Department of Behavioral Economics, Rady School of Management, University of California San Diego

When compared to the 51 %–53 % accuracy rate attained by the more than 600 human participants in the study, the algorithms demonstrated an impressive accuracy rate, correctly predicting contestant behavior 74 % of the time.

The study tested how people could distinguish between people who tell the truth and those who lie more effectively by comparing machine learning and human deception detection skills.

In one experiment, the same series of "Golden Balls" episodes were viewed by two distinct study participant groups. Before watching the videos, one group had machine learning flag them. The flags meant that the contestant was probably lying, according to the algorithm. After watching the same video, another group was informed that the video had been flagged by the algorithm for being deceptive. If participants received the flag message prior to viewing the video, they were significantly more likely to believe the machine learnings' insights and be better able to detect lying.

“Timing is crucial when it comes to the adoption of algorithmic advice. Our findings show that participants are far more likely to rely on algorithmic insights when these are presented early in the decision-making process. This is particularly important for online platforms like YouTube and TikTok, which can use algorithms to flag potentially deceptive content,” said Serra-Garcia.

Our study suggests that these online platforms could improve the effectiveness of their flagging systems by presenting algorithmic warnings before users engage with the content, rather than after, which could lead to misinformation spreading less rapidly.

Uri Gneezy, Professor and Study Co-Author, Department of Behavioral Economics, Rady School of Management, University of California San Diego

While some of these social media platforms already employ algorithms to identify questionable content, staff members frequently need to receive user reports before they can look into a video and decide whether to flag it or remove it. When staff members at tech companies like TikTok become overworked by investigations, these procedures may take a while.

The authors concluded, “Our study shows how technology can enhance human decision making and it’s an example of how humans can interact with AI when AI can be helpful. We hope the findings can help organizations and platforms better design and deploy machine learning tools, especially in situations where accurate decision-making is critical.”