Reviewed by Lexie CornerApr 5 2024

Researchers studying robotics have created a novel method for producing cameras that could help safeguard images and information gathered by Internet-of-Things and smart home gadgets.

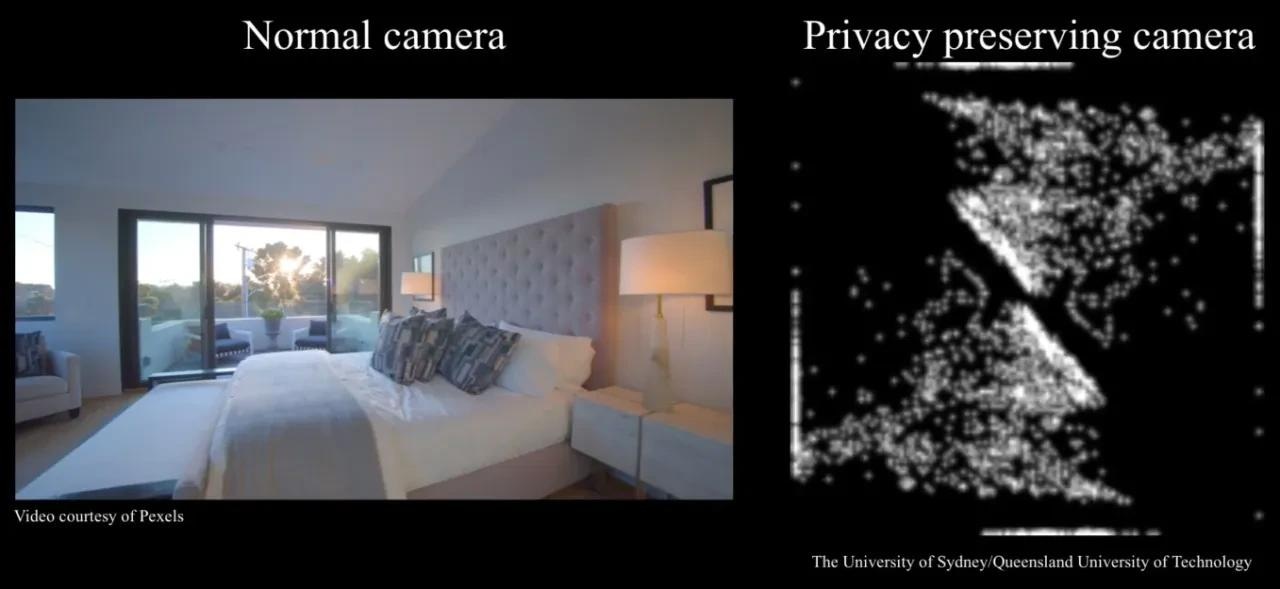

What a normal camera sees compared with what the privacy-preserving camera sees. Image Credit: University of Sydney and Queensland University of Technology

What a normal camera sees compared with what the privacy-preserving camera sees. Image Credit: University of Sydney and Queensland University of Technology

Smart technologies finding their way into homes and offices, such as delivery drones, robotic vacuum cleaners, and smart refrigerators, employ vision to scan their environment and capture videos and images of everyday activities.

Researchers at the QUT Centre for Robotics (QCR) at the Queensland University of Technology and the Australian Centre for Robotics at the University of Sydney have developed a novel method for creating cameras that process and scramble visual data before it is digitized, obscuring it to the point of anonymity to restore privacy.

Sighted systems, such as autonomous vacuum cleaners, are part of the “Internet of Things”—smart systems that link to the Internet. They are vulnerable to being hacked by bad actors or lost due to human mistakes, as well as having their pictures and videos stolen by third parties, sometimes maliciously.

The distorted images, which serve as a “fingerprint,” could still be utilized by robots to fulfill tasks, but they do not give a thorough visual depiction, compromising privacy.

Smart devices are changing the way we work and live our lives, but they shouldn’t compromise our privacy and become surveillance tools. When we think of ‘vision,’ we think of it like a photograph, whereas many of these devices don’t require the same type of visual access to a scene as humans do. They have a very narrow scope in terms of what they need to measure to complete a task, using other visual signals, such as color and pattern recognition.

Adam Taras, The University of Sydney

What a Normal Camera Sees vs. What the Privacy Preserving Camera Sees

The researchers were able to separate the processing that typically occurs within a computer from the optics and analog electronics of the camera, which is outside the reach of attackers.

This is the key distinguishing point from prior work, which obfuscated the images inside the camera’s computer, leaving the images open to attack. We go one level beyond to the electronics themselves, enabling a greater level of protection.

Dr. Don Dansereau, Senior Lecturer, The University of Sydney

The researchers attempted to hack their technique but were unable to recreate the images in an identifiable way. They have made this assignment available to the scientific community at large, daring others to hack their approach.

Taras added, “If these images were to be accessed by a third party, they would not be able to make much of them, and privacy would be preserved.”

Dr. Dansereau stated that privacy was becoming increasingly important since more devices now have built-in cameras, as well as the potential development of new technologies in the near future, such as parcel drones, which venture into residential areas to deliver packages.

“You wouldn’t want images taken inside your home by your robot vacuum cleaner leaked on the dark web, nor would you want a delivery drone to map out your backyard. It is too risky to allow services linked to the web to capture and hold onto this information,” he stated.

The method might also be used to create gadgets that function in settings such as factories, airports, hospitals, warehouses, and schools, where security and privacy are issues.

Subsequently, the researchers intend to construct physical camera prototypes to showcase the methodology in practice.

Current robotic vision technology tends to ignore the legitimate privacy concerns of end-users. This is a short-sighted strategy that slows down or even prevents the adoption of robotics in many applications of societal and economic importance. Our new sensor design takes privacy very seriously, and I hope to see it taken up by industry and used in many applications.

Niko Suenderhauf, Professor and Deputy Director, Centre for Robotics, Queensland University of Technology

Professor Peter Corke, Distinguished Professor Emeritus and Adjunct Professor at the QCR, who also advised on the project, added, “Cameras are the robot equivalent of a person’s eyes, invaluable for understanding the world, knowing what is what and where it is. What we don’t want is the pictures from those cameras to leave the robot’s body, to inadvertently reveal private or intimate details about people or things in the robot’s environment.”

Journal Reference:

Taras, A. K., et al. (2024) Inherently privacy-preserving vision for trustworthy autonomous systems: Needs and solutions. Journal of Responsible Technology. doi:10.1016/j.jrt.2024.100079