Unmanned Aerial Vehicles (UAVs) play a crucial role in search and rescue operations during natural disasters like earthquakes. Existing UAVs rely on visual data and are unable to detect victims buried under debris.

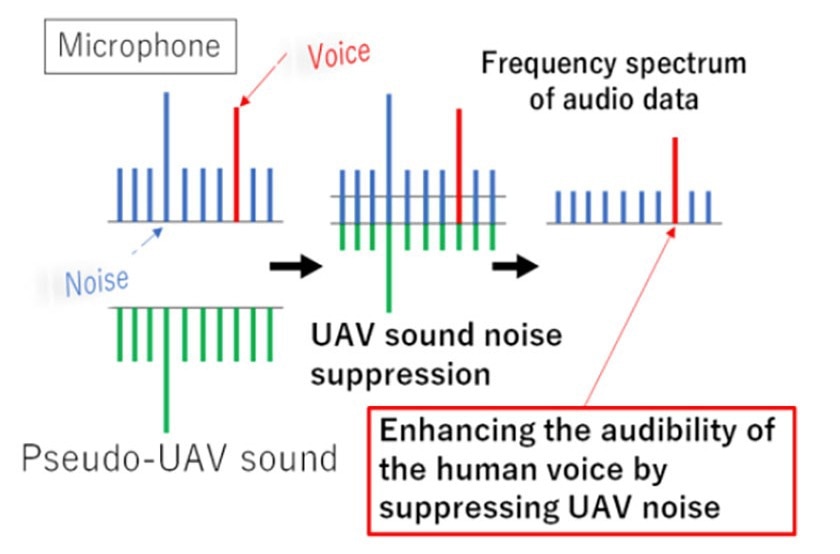

The innovative AI-based noise canceling method generates a similar sound as the UAV noise and then substracts that sound from the sound captured by the UAV microphone to eliminate noise and therefore amplify human sounds. Image Credit: Chinthaka Premachandra from Shibaura Institute of Technology (License type: CC BY-NC-ND 4.0).

The innovative AI-based noise canceling method generates a similar sound as the UAV noise and then substracts that sound from the sound captured by the UAV microphone to eliminate noise and therefore amplify human sounds. Image Credit: Chinthaka Premachandra from Shibaura Institute of Technology (License type: CC BY-NC-ND 4.0).

While some studies have explored the use of sound for detection, the noise generated by UAV propellers often hinders human sounds from being heard. To overcome this challenge, scientists have devised an innovative artificial intelligence (AI) system that efficiently suppresses the noise generated by UAVs while enhancing the detection of human sounds.

Unmanned aerial vehicles, or UAVs, have drawn a lot of attention lately from a variety of industries, including the military, agriculture, construction, and disaster management. These multipurpose machines provide remote access to challenging or dangerous environments and boast exceptional surveillance capabilities.

They are particularly effective in the search for victims in collapsed buildings and rubble following natural catastrophes like earthquakes. This capability allows for the early detection of victims, facilitating prompt response.

Most studies in this area have primarily concentrated on UAVs fitted with cameras, which use visual data to locate victims and evaluate the environment. However, depending solely on visual data may prove inadequate, particularly when victims are buried under debris or in areas outside the cameras’ field of view.

Acknowledging this challenge, a few studies have explored using sound to locate individuals who are trapped. However, the noise generated by a UAV’s fast-rotating propellers, which are mounted directly on the drone, can suppress distant human sounds, presenting a major obstacle. Thus, it becomes imperative to minimize propeller noise and isolate the sounds of trapped individuals for successful detection.

While a few studies have sought to address this issue by employing several microphones to separate victims’ sounds from propeller noise, coupled with speech recognition techniques, the processed sound could still pose challenges for operators in accurately identifying victim sounds. Additionally, existing software often relies on pre-programmed words to isolate human sounds, while the sounds victims make may differ depending on the circumstances.

To overcome these challenges, Professor Chinthaka Premachandra and Mr. Yugo Kinasada from the Department of Electronic Engineering at the School of Engineering in Shibaura Institute of Technology, Japan, have developed an innovative AI-based noise suppression device.

Suppressing the UAV propeller noise from the sound mixture while enhancing the audibility of human voices presents a formidable research problem. The variable intensity of UAV noise, fluctuating unpredictably with different flight movements complicates the development of a signal-processing filter capable of effectively removing UAV sound from the mixture. Our system utilizes AI to effectively recognize propeller sound and address these issues.

Chinthaka Premachandra, Professor, Department of Electronic Engineering, School of Engineering, Shibaura Institute of Technology

The specifics of their study were made available online on December 1st, 2023, and published in the journal IEEE Transactions on Services Computing in January 2024.

At the center of this innovative system is a modern AI model called Generative Adversarial Networks (GANs), which is capable of accurately learning different types of data. In this case, the GANs were trained on various UAV propeller sound data.

Once trained, the model can make a similar sound to the UAV propellers, referred to as pseudo-UAV sound. Then, this pseudo-UAV sound is removed from the actual sound recorded by the onboard microphones in the UAV, enabling operators to more distinctly hear and recognize human sounds.

This method offers many advantages over conventional noise suppression devices. It can effectively overpower UAV noise within a narrow frequency range with high accuracy. Notably, it can adjust to the shifting noise of the UAV in real-time. These advantages can greatly improve the effectiveness of UAVs in search and rescue tasks.

The team conducted tests on a real UAV using a combination of human and UAV sounds. While the system successfully eliminated UAV noise and amplified human sounds, some residual noise was still present in the final audio output.

The system’s current performance is sufficient to recommend it for human detection in real disaster areas. Additionally, the team is actively working to enhance the system further and resolve any remaining issues.

Overall, this innovative research shows immense potential for enhancing the use of UAVs in disaster management.

Premachandra concluded, “This approach not only promises to improve post-disaster human detection strategies but also enhances our ability to amplify necessary sound components when mixed with unnecessary ones. Our ongoing efforts will help in further enhancing the effectiveness of UAVs in disaster response and contribute to saving more lives.”

Journal Reference:

Premachandra, C. & Kinasada, Y. (2024) GAN Based Audio Noise Suppression for Victim Detection at Disaster Sites With UAV. IEEE Transactions on Services Computing. doi: 10.1109/TSC.2023.3338488