An extensive review concludes that there is currently no evidence that artificial intelligence (AI) can be controlled safely.

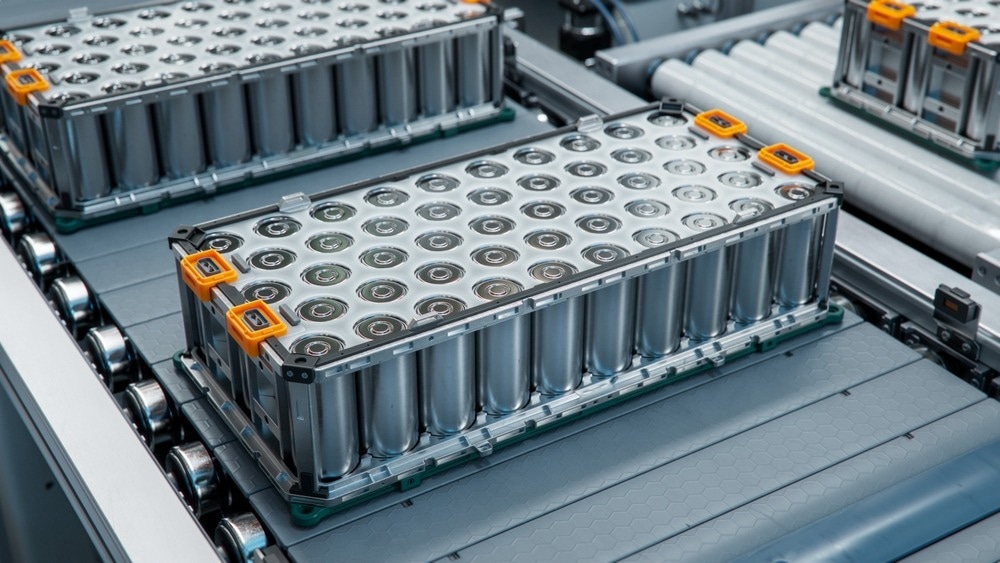

Image Credit: IM Imagery/Shutterstock.com

A researcher cautions that until AI can be proven to be controlled, it should not be developed.

Dr. Roman V. Yampolskiy explains that even though it is acknowledged that AI control may be one of the most significant issues facing humanity, it is still not well-defined, understood, or researched.

We are facing an almost guaranteed event with potential to cause an existential catastrophe. No wonder many consider this to be the most important problem humanity has ever faced. The outcome could be prosperity or extinction, and the fate of the universe hangs in the balance.

Dr. Roman V. Yampolskiy, Taylor & Francis Group

Uncontrollable Superintelligence

After a thorough analysis of the scientific literature on AI, Dr Yampolskiy claims to have found no evidence that AI can be safely controlled, and even if there were some partial controls, they would not be sufficient.

Why do so many researchers assume that AI control problem is solvable? To the best of our knowledge, there is no evidence for that, no proof. Before embarking on a quest to build a controlled AI, it is important to show that the problem is solvable. This, combined with statistics that show the development of AI superintelligence is an almost guaranteed event, show we should be supporting a significant AI safety effort.

Dr. Roman V. Yampolskiy, Taylor & Francis Group

Yampolskiy contends that the capacity to create intelligent software greatly exceeds the capacity to manage or even validate it. Following a thorough analysis of the literature, he concludes that regardless of the advantages they offer, advanced intelligent systems will always carry some degree of risk because they are impossible to fully control. Yampolskiy thinks the AI community should aim to reduce such risk as much as possible while maximizing potential gains.

What are the Obstacles?

The ability of AI (and superintelligence) to pick up new behaviors, modify its performance, and behave somewhat autonomously in unfamiliar circumstances sets it apart from other programs.

Making AI “safe” presents a challenge since there are an endless number of decisions and mistakes that a superintelligent entity could make as it develops. It might not be sufficient to just anticipate that the problems would not arise and to use security patches to mitigate them.

Yampolskiy elaborates that AI lacks the capability to elucidate its decisions, and even if explanations are provided, humans may struggle to comprehend them due to the complexity of the implemented concepts. Without understanding the reasoning behind AI's decisions and with only a “black box” at their disposal, humans are unable to grasp the underlying problem, consequently increasing the risk of future accidents.

AI systems are already being used, for instance, to make decisions in the areas of banking, employment, healthcare, and security, to mention a few. These kinds of systems ought to be able to justify their decisions, especially in order to demonstrate their impartiality.

Yampolskiy explains: “If we grow accustomed to accepting AI’s answers without an explanation, essentially treating it as an Oracle system, we would not be able to tell if it begins providing wrong or manipulative answers.”

Controlling the Uncontrollable

According to Yampolskiy, when AI's capability rises, so does its autonomy, but the ability to control it falls. Increasing autonomy is correlated with a decline in safety.

For instance, superintelligence could ignore all of this knowledge and rediscover/proof everything from scratch in order to avoid gaining false knowledge and eliminate all bias from its programmers, but doing so would also eliminate any bias in favor of humans.

Yampolskiy says, “Less intelligent agents (people) can’t permanently control more intelligent agents (ASIs). This is not because we may fail to find a safe design for superintelligence in the vast space of all possible designs, it is because no such design is possible, it doesn’t exist. Superintelligence is not rebelling, it is uncontrollable to begin with. Humanity is facing a choice, do we become like babies, taken care of but not in control or do we reject having a helpful guardian but remain in charge and free.”

Yampolskiy suggests that, at the expense of giving the system some autonomy, there may be an equilibrium point where scientists give up some capability in exchange for some control.

Aligning Human Values

Designing a machine that adheres strictly to human commands is one control suggestion, but Yampolskiy highlights the possibility of contradictory instructions, misunderstandings, or malevolent use.

Yampolskiy explains: “Humans in control can result in contradictory or explicitly malevolent orders, while AI in control means that humans are not.”

The author contends that for AI to be a useful advisor, it must possess superior values of its own. However, if AI functioned more as an advisor, it could avoid problems with misinterpretation of direct orders and the possibility of malevolent orders.

Yampolskiy explains, “Most AI safety researchers are looking for a way to align future superintelligence to values of humanity. Value-aligned AI will be biased by definition, pro-human bias, good or bad is still a bias. The paradox of value-aligned AI is that a person explicitly ordering an AI system to do something may get a “no” while the system tries to do what the person actually wants. Humanity is either protected or respected, but not both.”

Minimizing Risk

Yampolskiy claims that to reduce the risk associated with AI, it must be transparent, scalable, easy to understand in human language, and modifiable with “undo” options.

Yampolskiy proposes classifying all AI as either controllable or uncontrollable, keeping all options open, and considering short-term moratoriums or even full prohibitions on specific AI technology.

Instead of being discouraged, Yampolskiy says: “Rather it is a reason, for more people, to dig deeper and to increase effort, and funding for AI Safety and Security research. We may not ever get to 100% safe AI, but we can make AI safer in proportion to our efforts, which is a lot better than doing nothing. We need to use this opportunity wisely.”