Citrus fruit varieties are facing a crucial turning point as their production growth slows down and the focus shifts toward improving the quality of the fruit and post-harvest processes.

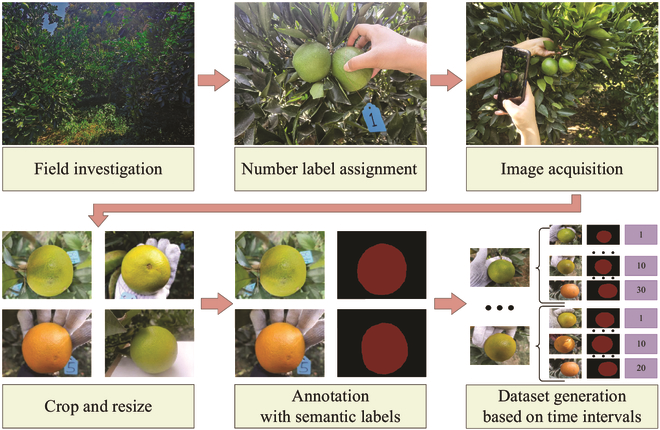

The process of building the dataset. Image Credit: Science Partner Journals

The process of building the dataset. Image Credit: Science Partner Journals

The color change in citrus is a crucial indicator of fruit maturity that has usually been assessed by human judgment. Although recent advancements in machine vision and neural networks offer a more objective and reliable method for color analysis, they fight with fluctuating conditions and transform color data into practical maturity assessments.

There are still significant research gaps in accurately forecasting color changes over time and creating accessible visualization methods. Moreover, integrating these sophisticated algorithms into agricultural edge devices poses a challenge due to their constrained computing capacities, underscoring the necessity for streamlined and effective technologies tailored for this sector.

The study was published in the journal Plant Phenomics.

Scientists established a novel framework for forecasting and envisaging citrus fruit color transformation in orchards, leading to the creation of an Android application in this research. The network model functions by analyzing citrus images within a specified time frame, generating a predictive color image of the fruit.

Training and validating the network relied on a dataset of 107 orange images that depicted color changes during transformation, playing a pivotal role in its development. This framework employs a deep mask-guided generative network, ensuring precise predictions, while its resource-efficient design enables seamless integration into mobile devices.

The outcome includes achieving a high Mean Intersection over Union (MIoU) for semantic segmentation, denoting the network’s proficiency in unpredictable conditions. The network was also surpassed in citrus color prediction and visualization, illustrated by a high peak signal-to-noise ratio (PSNR) and low mean local style loss (MLSL), denoting less distortion and high fidelity of produced images. The generative network’s robustness is evident in its ability to imitate color transformation precisely, even with various viewing angles and orange colors.

Moreover, the network’s merged design includes embedding layers for exact calculations over different time intervals with a single model, thus lowering the necessity for multiple models of different time frames. Sensory panels further authenticated the network’s effectiveness, with a majority finding great similarity between synthesized and real images.

This study's inventive method enables finer fruit development monitoring and ideal harvest timing, applicable not only to various citrus types but also to other fruit crops. Its compatibility with edge devices such as smartphones enhances its practicality for on-site use, showcasing the versatility of generative models in agriculture and other domains.

Journal Reference:

Bao, Z., et al. (2023). Predicting and Visualizing Citrus Color Transformation Using a Deep Mask-Guided Generative Network. Plant Phenomics. doi/10.34133/plantphenomics.0057