The Federal Highway Administration has granted Khaled Abdelghany, a professor of civil and environmental engineering at Southern Methodist University (SMU), a three-year, $1.2 million grant. The goal of the award is to create a computer application that makes use of artificial intelligence to improve intersection efficiency and safety for vehicles and pedestrians.

Image Credit: Southern Methodist University

The study’s lead researcher, Abdelghany, a fellow of the Stephanie and Hunter Hunt Institute for Engineering and Humanity and a professor in the Department of Civil and Environmental Engineering at the SMU Lyle School of Engineering, received federal funding. Co-researchers on the grant include Assistant Professor Mahdi Khodayar of The University of Tulsa and Professor Michael Hunter of the Georgia Institute of Technology, Director of the Georgia Transportation Institute.

The grant is part of the Federal Highway Administration’s Exploratory Advanced Research (EAR) Program, which works with universities, commercial companies, and government agencies to conduct cutting-edge research in these areas. The purpose of the EAR Program is to use artificial intelligence (AI) and machine learning to make transportation safer and more efficient.

Intersections are critical to highway safety and efficiency. According to the Federal Highway Administration, intersections account for around one-quarter of all traffic fatalities and one-half of all traffic injuries in the United States each year.

Improving Traffic Safety at Intersections with AI

PANORAMA: An Interpretable Context-Aware AI Framework for Intersection Detection and Signal Optimization is a program being developed by Abdelghany, Hunter, and Khodayar. This program is applicable to traffic signals at intersections around the country.

In most cases, traffic lights at junctions are programmed to change from red to green in response to past traffic patterns and the detection of approaching vehicles. However, this method ignores other intersection users, such as wheelchair users, cyclists, and pedestrians, as well as short-term alterations in the traffic pattern caused by factors like weather.

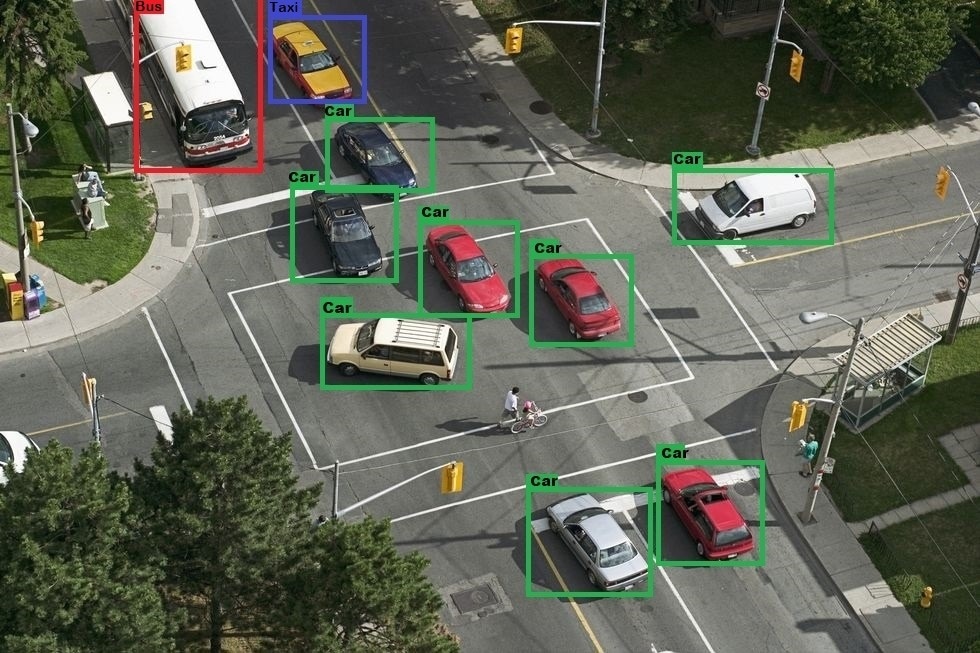

Using video cameras, PANORAMA will identify the traffic at these intersections, categorizing vehicles, scooters, or any other entities present. PANORAMA will then determine whether the traffic light should display green or red. We are devising an adaptive real-time control system.

Khaled Abdelghany, Professor, Civil and Environmental Engineering, Southern Methodist University

By combining optimum control with computer vision technologies, PANORAMA generates real-time timing plans that improve intersection efficiency and safety while accounting for a variety of variables like traffic characteristics, weather, and time of day.

Ensuring safety for all users, including pedestrians, cyclists, scooter users, and those with disabilities, is essential for equitable transportation. Moreover, PANORAMA will be cost-effective as it does not require infrastructure beyond that already found at many intersections.

Michael Hunter, Professor, Georgia Institute of Technology

PANORAMA is a significant project since it will use interpretable AI.

Utilizing AI PANORAMA will not be a black-box. Instead, it will provide an explanation for its recommendations regarding green or red-light signals, offering essential information to the traffic light controller operators.

Mahdi Khodayar, Assistant Professor, The University of Tulsa

Since a large amount of data is required for AI to be trained efficiently, the research team will build the model using high-performance computing resources at SMU. However, PANORAMA will be able to operate on any computer after the system has been sufficiently educated and certified.

Along with making intersections safer and traffic flow more smoothly, PANORAMA will reduce pollution from idling automobiles.

“We will be able to assess the performance of each intersection, knowing which ones are operating efficiently and which are not,” Abdelghany concluded.

The Federal Highway Administration is funding this project under Agreement No. 693JJ32350030.