Researchers from the University of Illinois Urbana-Champaign and Duke University have joined forces to create a robotic system for eye examinations. The National Institutes of Health (NIH) has granted them $1.2 million to further develop and enhance this system.

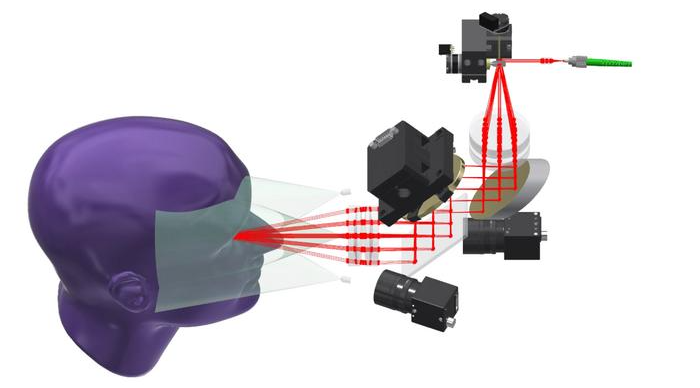

The robotic eye examination system developed by Hauser and his collaborators. Image Credit: The Grainger College of Engineering at the University of Illinois Urbana-Champaign

Scientists have come up with a robotic system that automatically positions the examination sensors to scan human eyes.

It utilizes an optical scan method that can function from a safe distance from the eye, and now scientists are working to add more features that will help it execute the majority of the steps of a standard eye exam. Such features will need the system to function in similar proximity to the eye.

Instead of having to spend time in a doctor’s office going through the manual steps of routine examinations, a robotic system can do this automatically. This would mean faster and more widespread screening leading to better health outcomes for more people. But to achieve this, we need to develop safer and more reliable controls, and this award allows us to do just that.

Kris Hauser, Study Principal Investigator and Professor, Computer Science, Grainger College of Engineering, University of Illinois

Automated medical examinations have the potential to increase access to routine healthcare services for a broader population while also enabling healthcare professionals to attend to a larger number of patients. Nevertheless, medical examinations introduce distinct safety considerations compared to other automated procedures.

It is crucial to place trust in these robots to perform their tasks with consistent reliability and safety, especially when working in close proximity to delicate and sensitive body parts.

A prior system that Hauser and his collaborators developed was a robotic eye examination system that deploys a method known as optical coherence tomography, which scans the eye to make a three-dimensional map of the eye’s interior.

This capability enables several conditions to be diagnosed, but the scientists wish to extend the capabilities of the system by including an aberrometer and a slit eye examiner. These extra features need the robot arm to be held inside two cm of the eye, stressing the need for improved robotic safety.

Getting the robot within two centimeters of the patient’s eye while ensuring safety is a bit of a new concern. If a patient’s moving towards the robot, it has to move away. If the patient is swaying, the arm has to match their movement.

Kris Hauser, Study Principal Investigator and Professor, Computer Science, Grainger College of Engineering, University of Illinois

In autonomous vehicles, Hauser compared the control system to those that were utilized. While the system could not react to all possible human behaviors, Hauser said, it should avoid “at-fault collisions” like self-driving cars.

The award will allow the scientists to perform large-scale reliability testing. A significant component of such tests is guaranteeing that the system functions for as many people as possible. For this to be achieved, the scientists have come up with a second robot that will utilize mannequin heads to emulate sudden human behaviors.

Furthermore, the second robot will randomize the heads’ appearance with various facial features, skin tones, hair, and coverings to help the scientists comprehend and reduce the effects of algorithmic bias present in their system.

The system will be developed for use in clinical settings, but Hauser imagines that one day, these systems could be utilized in retail settings similar to blood pressure stations.

Something like this could be used in an eyeglass store to scan your eyes for the prescription, or it could give a diagnostic scan in a pharmacy and forward the information to your doctor. This is really where an automated examination system like this would be most effective: giving as many people access to basic health care services as possible.

Kris Hauser, Study Principal Investigator and Professor, Computer Science, Grainger College of Engineering, University of Illinois