Reviewed by Alex SmithNov 7 2022

Scientists from Tokyo Metropolitan University have designed a virtual reality (VR) remote collaboration platform that allows users on Segways to share what they see and feel while accelerating as they move.

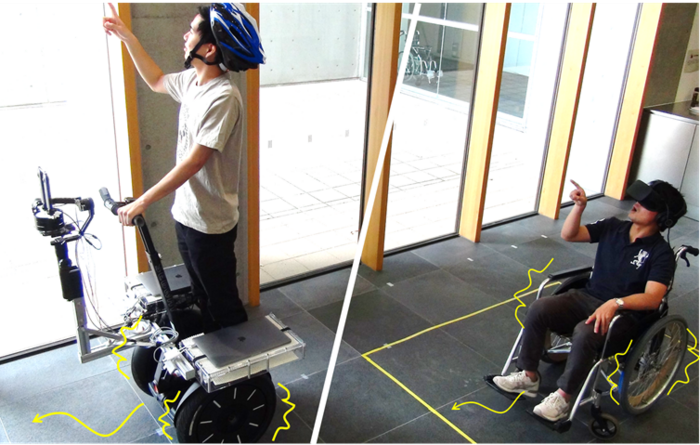

A modified wheelchair unit recreates the acceleration of a Segway for a remote user, reducing VR sickness. Image Credit: Tokyo Metropolitan University.

Riders fitted with accelerometers and cameras can feedback on their sensations to a remote user in an altered wheelchair wearing a VR headset. User surveys demonstrated a substantial reduction in VR sickness, promising an enhanced experience for remote collaboration tasks.

VR technology is rapidly progressing, allowing users to experience and share an immersive, three-dimensional (3D) environment. In remote operations, one of the big advances it provides is an opportunity for workers in various locations to instantly share what they see and hear.

An example is users on personal mobility devices in large warehouse facilities, construction sites, and factories. Riders can cover large spaces easily while emphasizing problems instantly to a remote co-worker.

However, one major disadvantage can destroy the entire experience: VR sickness. VR sickness is a type of motion sickness that arises when users see “motion” through their headsets without essentially moving.

Symptoms include nausea, headaches, and occasionally vomiting. The issue is mainly acute in the above example when the person sharing the experience is moving about.

To avoid this problem, scientists from Tokyo Metropolitan University, guided by Assistant Professor Vibol Yem, have developed a system that allows users to share what they see and the sensation of movement.

They concentrated on Segways as a usual, extensively available personal mobility vehicle, fitting two 3D cameras and a set of accelerometers to assess not only visual cues but comprehensive information on the vehicle's acceleration.

This data was transmitted via the internet to a remote user wearing a VR headset on an altered wheelchair with individual motors attached to the wheels. As the user on the Segway picked up speed, so did the wheelchair, enabling remote users to see the same scenery and feel the same acceleration.

Certainly, the wheelchair was not permitted to move the same distances as the Segway; it was smoothly returned to its former position when the Segway was not speeding.

The researchers tested their device by asking volunteers to become remote users and rate their experience. There was a drop in VR sickness of 54% when the sensations of the movement were incorporated, with superior ratings for the user experience.

They also observed subtleties in the way the data should be fed back. For instance, users felt it was best when about 60% of the acceleration recommended by the visual cues was fed back to the wheels, mainly because of the sensitivity of the vestibular system (how people sense orientation, balance, and motion) compared to their vision.

Though enhancements are still required, the team’s system holds exciting new opportunities for remote collaboration, freeing remote users from a huge disadvantage of VR technology.

This study received support from the Local-5GProject at Tokyo Metropolitan University, MIC/SCOPE # 191603003 and JSPS KAKENHI Grant Number 18H04118.

Siggraph Asia E-tech 2019: TwinCam Go

Video Credit: Tokyo Metropolitan University.

Journal Reference:

Yem, V., et al. (2022) vehicle-ride sensation sharing system with stereoscopic 3D visual perception and vibro-vestibular feedback for immersive remote collaboration. Advanced robotics. Doi.org/10.1080/01691864.2022.2129033.