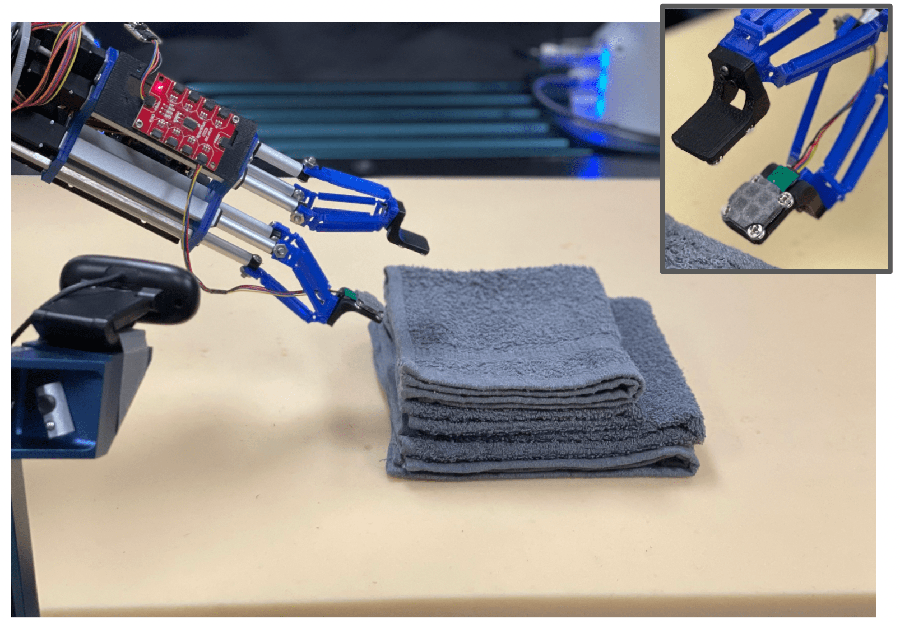

New research from the Robotics Institute can help robots feel layers of cloth, which could one day allow robots to assist people with household tasks like folding laundry. Image Credit: Carnegie Mellon University's Robotics Institute

David Held is also an assistant professor in the School of Computer Science at Carnegie Mellon University’s Robotics Institute.

For instance, to fold laundry, robots require a sensor to replicate how a human finger can sense the top layer of a shirt or towel and grip the layers underneath it. Scientists could program a robot to sense the top layer of cloth and grip it, but without the robot sensing the other layers of cloth, the robot would grasp only the top layer and never completely fold the cloth.

“How do we fix this?” Held asked. “Well, maybe what we need is tactile sensing.”

ReSkin, created by scientists at Carnegie Mellon and Meta AI, was the perfect solution. The open-source touch-sensing “skin” is composed of a thin, elastic polymer implanted with magnetic particles to quantity three-axis tactile signals. In the latest research paper, scientists employed ReSkin to help the robot feel layers of cloth instead of depending on vision sensors to perceive them.

“By reading the changes in the magnetic fields from depressions or movement of the skin, we can achieve tactile sensing,” said Thomas Weng, a Ph.D. student in the R-Pad Lab, who was part of the project with RI postdoctoral fellow Daniel Seita and graduate student Sashank Tirumala. “We can use this tactile sensing to determine how many layers of cloth we've picked up by pinching with the sensor.”

Other studies have used tactile sensing to grasp solid and firm objects, but cloth is “deformable,” meaning it shifts when touched, making the activity even more challenging. Modifying the robot’s grip on the cloth alters its pose and the sensor readings.

Scientists did not instruct the robot where or how to grasp the cloth. Rather, they programmed the robot to sense how many layers of fabric it was gripping by using the sensors in ReSkin, then modifying the grip to attempt again. The team tested the robot, making it pick up one and two layers of cloth, and used various colors and textures to show that it could generalize beyond the training data.

The flexibility and thinness of the ReSkin sensor rendered it possible to program the robots to grip something as flimsy as layers of cloth.

The profile of this sensor is so small, we were able to do this very fine task, inserting it between cloth layers, which we can't do with other sensors, particularly optical-based sensors. We were able to put it to use to do tasks that were not achievable before.

Thomas Weng, Ph.D. Student, Robots Perceiving and Doing Lab, Robotics Institute, Carnegie Mellon University

There is still more research to be performed before the robot can be used for commercial purposes. This begins with steps like smoothing wrinkled fabric, selecting the correct number of layers of cloth to fold, then folding the cloth in the correct direction.

It really is an exploration of what we can do with this new sensor. We're exploring how to get robots to feel with this magnetic skin for things that are soft, and exploring simple strategies to manipulate cloth that we'll need for robots to eventually be able to do our laundry.

Thomas Weng, Ph.D. Student, Robots Perceiving and Doing Lab, Robotics Institute, Carnegie Mellon University

At the 2022 International Conference on Intelligent Robots and Systems in Kyoto, Japan, held between October 23rd and 27th, 2022, the team will present their research paper entitled “Learning to Singulate Cloth Layers Using Tactile Feedback.” The article also received the Best Paper award at the conference’s 2022 RoMaDO-SI workshop.

Journal Reference

Tirumala, S., et al. (2022) Learning to Singulate Cloth Layers Using Tactile Feedback. arXiv. doi.org/10.48550/arXiv.2207.11196.