Reviewed by Mila PereraSep 19 2022

Professor James Elder, who is a co-author of a study published by York University, says that deep convolutional neural networks (DCNNs) do not perceive objects as humans do, with configural shape perception, which could be risky in real-time AI applications.

Image Credit: York University.

The study was reported in the iScience — a Cell Press journal. “Deep Learning Models Are Unsuccessful in Capturing the Configural Manner of Human Shape Perception" is a joint study by Elder, a York Research Chair in Human and Computer Vision and a Co-Director of York’s Centre for AI & Society, and Nicholas Baker, an Assistant Psychology Professor at Loyola College in Chicago, a former VISTA postdoctoral fellow at York.

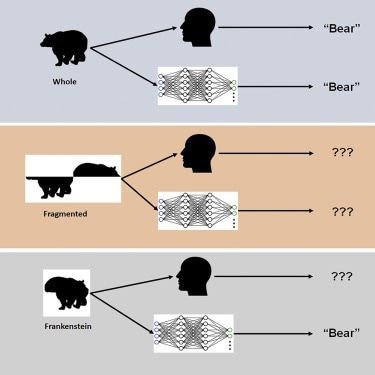

To discover how the human brain and DCNNs process complete, configural object properties, the scientists used novel visual stimuli known as “Frankensteins.”

Frankensteins are simply objects that have been taken apart and put back together the wrong way around. As a result, they have all the right local features, but in the wrong places.

Elder, Study Co-Author and York Research Chair, Human and Computer Vision, York University

The researchers discovered that although the human visual system is confused by Frankensteins, DCNNs are not confused. This was established by showcasing an insensitivity to configural object properties.

Our results explain why deep AI models fail under certain conditions and point to the need to consider tasks beyond object recognition in order to understand visual processing in the brain. These deep models tend to take ‘shortcuts’ when solving complex recognition tasks. While these shortcuts may work in many cases, they can be dangerous in some of the real-world AI applications we are currently working on with our industry and government partners.

Elder, Study Co-Author and York Research Chair, Human and Computer Vision, York University

Elder explains that traffic video safety systems are one such application: “The objects in a busy traffic scene—the vehicles, bicycles, and pedestrians—obstruct each other and arrive at the eye of a driver as a jumble of disconnected fragments.”

“The brain needs to correctly group those fragments to identify the correct categories and locations of the objects. An AI system for traffic safety monitoring that is only able to perceive the fragments individually will fail at this task, potentially misunderstanding risks to vulnerable road users.”

According to the scientists, training and architecture changes to make more brain-like networks did not result in configural processing, and none of the networks could precisely guess trial-by-trial human object judgments.

“We speculate that to match human configural sensitivity, networks must be trained to solve a broader range of object tasks beyond category recognition,” Elder concludes.

Journal Reference

Baker, N., and Elder, J. (2022) Deep learning models fail to capture the configural nature of human shape perception. iScience. doi.org/10.1016/j.isci.2022.104913.