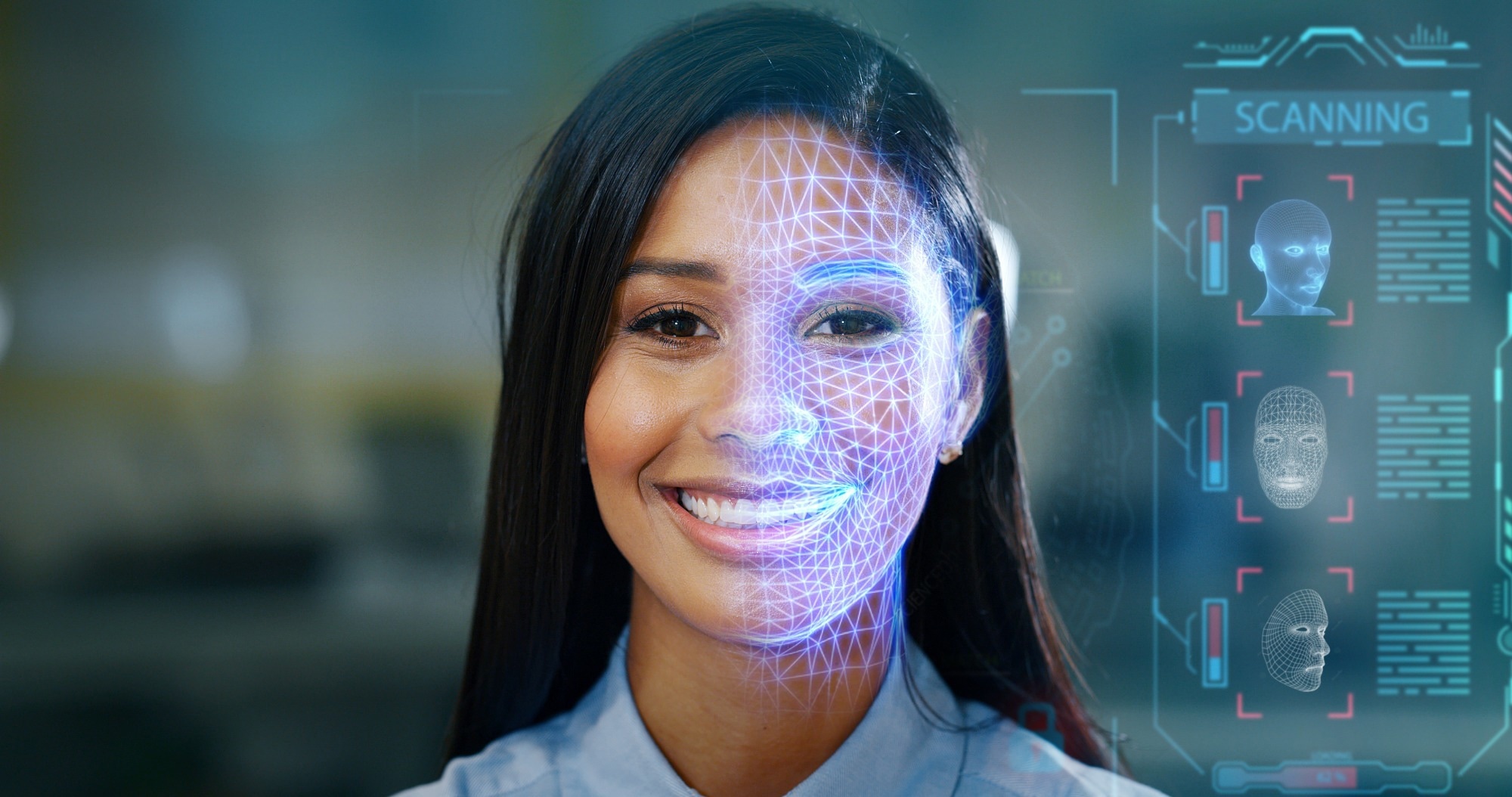

Artificial intelligence is changing the way we see the world by offering meaningful access to data that makes people’s lives much more efficient, powering a number of programs and services that help them do everyday things, such as social networking with friends and using or traveling via ride share service apps. The most common examples of AI in everyday life are travel navigation, smart home devices, smartphones, drones, and smart cars.

Image Credit: Shutterstock.com/ HQuality

However, what if AI could offer greater and deeper access to the way people’s emotions are perceived and expressed. Typically we can read emotions through facial expressions and most of us can understand how someone might be feeling by the look on their face. Yet, for autistic people, reading facial expressions can be a difficult task, but it is not entirely clear as to why this is.

Recently, Kohitij Kar, a researcher in the lab of MIT Professor James DiCarlo published his work in The Journal of Neuroscience, which may provide greater insight into how the brain works. The researchers believe that machine learning could be an effective tool that could open up new pathways to modeling the computational capabilities of the human brain.

The answer may lie in the proposition that there are two different brain areas where most of the differences may be spread across: the primate (human) brain known as the inferior temporal cortex, which is associated with facial recognition, and the amygdala, which receives partial input from the inferior temporal cortex processes emotions.

Replicating the Function of the Human Brain

Kar’s work began by investigating data from Ralph Adolphs at Caltech and Shuo Wang at Washington University in St. Louis. The data was the result of an experiment in which Wang and Adolphs presented two control groups with images of faces; one comprised of those considered neurotypical and one comprised of autistic adults.

A software program generated the images, which varied across a spectrum from fearful to happy. Each control group had to discern whether the faces depicted happiness or not. Compared with the neurotypical control group Wang and Adolphs found that autistic adults reported more faces as being happy.

Kar then took the data from Wang and Adolphs’ study and trained an artificial intelligence model to complete the same task. The neural network is comprised of units that are similar to biological neurons that have the capacity to process visual information and recognize particular cues and decide which faces appear to be happy.

The findings demonstrated that the artificial intelligence developed by Kar was able to discern happy from non-happy faces with an accuracy closer to the neurotypical control group than that of the autistic adults. Furthermore, using the results, Kar was able to strip back the neural network to see how and where it differed from the autistic adults.

Interestingly, Kar discovered that the inferior temporal cortex was in some part responsible for processing the visual cues that are able to differentiate and identify emotions in facial expressions.

“These are promising results,” Kar says. Better models of the brain will come along, “but oftentimes in the clinic, we don’t need to wait for the absolute best product.”

In effect, the artificial intelligence could be used in a clinical setting for a more efficient and effective diagnosis of autism and to detect certain traits of autism at an earlier stage. This was demonstrated when individual neural networks were taught to match the judgments of autistic adults and neurotypical controls.

The control “weights” in the network correlated with neurotypical controls were much stronger than that in autistic adults; this was evident in both the negative or “inhibitory” and positive or “excitatory” weights. This implies that the brains of autistic adults has inefficient or noisy sensory neural connections.

To establish how effective the neural network was for revealing the inferior temporal cortex as the part-human brain primarily responsible for recognizing facial expressions, Kar used Wang and Adolphs’ data to evaluate the role of the amygdala. Kar concluded that the inferior temporal cortex was the main driver of the amygdala’s function in this task.

Ultimately the work will help to demonstrate how useful computational models, especially image-processing neural networks, are in the clinical setting for better diagnoses of autism and perhaps other cognitive behaviors.

Even if these models are very far off from brains, they are falsifiable, rather than people just making up stories… To me, that’s a more powerful version of science.

Kohitij Kar, A Researcher in the Lab of MIT Professor James DiCarlo

References and Further Reading

Kar, K., (2022_ A computational probe into the behavioral and neural markers of atypical facial emotion processing in autism. The Journal of Neuroscience, [online] pp.JN-RM-2229-21. Available at: https://www.jneurosci.org/content/early/2022/05/23/JNEUROSCI.2229-21.2022

Hutson, M., (2022) Artificial neural networks model face processing in autism. [online] MIT News | Massachusetts Institute of Technology. Available at: https://news.mit.edu/2022/artificial-neural-networks-model-face-processing-in-autism-0616

Disclaimer: The views expressed here are those of the author expressed in their private capacity and do not necessarily represent the views of AZoM.com Limited T/A AZoNetwork the owner and operator of this website. This disclaimer forms part of the Terms and conditions of use of this website.