Thunder can be heard for a short length of time after a lightning strike. This is because the surrounding substance that was struck by lightning absorbs the light, which is then converted into heat, causing the material to expand and make a sound.

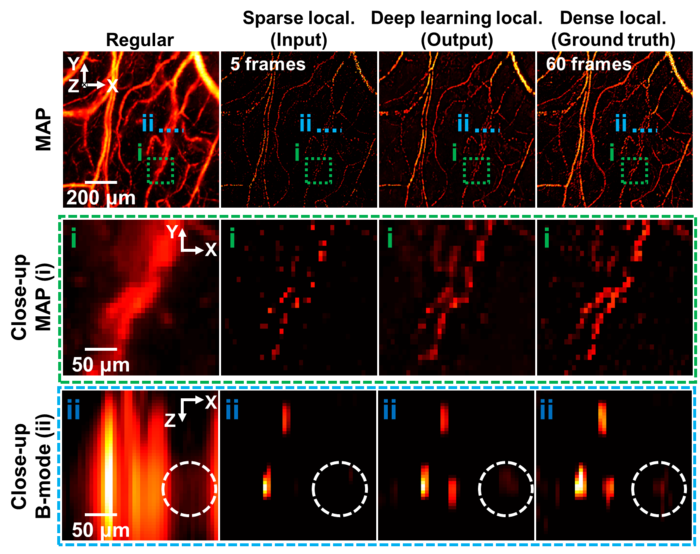

Frame counts of 60 and 5 are used for the dense and sparse localization-based images, respectively. Close-up views of the regions outlined by the green dashed boxes and cross-sectional B-mode images of the region highlighted by the blue dashed lines. The two adjacent blood vessels are clearly resolved in the deep learning and dense localization-based images, whereas they are not in the regular OR-PAM image in close-up MAP (ⅰ). In close-up B-mode (ii), a blood vessel highlighted by the white dashed circles, in which the sparse image has a low signal-to-noise ratio, is well-restored in the deep learning localization-based image. Even though the sparse image does not contain the vessels, they are restored in the deep learning localization-based image because our network is based on 3D convolutions, allowing for the reference of adjacent pixels in 3D space. Image Credit: Jongbeom Kim, Gyuwon Kim, Lei Li, Pengfei Zhang, Jin Young Kim, Yeonggeun Kim, Hyung Ham Kim, Lihong V. Wang, Seungchul Lee, Chulhong Kim.

Photoacoustic imaging (PAI), a technique that employs this phenomenon to take photos of the inside of the body, is being investigated as a new premier medical imaging device in preclinical and clinical applications.

PAI technology has lately employed the “localization imaging” method, which entails repeatedly imaging the same area to reach extremely high spatial resolution beyond the physical limit, regardless of imaging depth.

However, because numerous frames, each carrying the localization target, must be superimposed to generate an adequately sampled high-density super-resolution image, this higher spatial resolution is accomplished at the expense of temporal resolution. This has made it difficult to use for research that requires an immediate response.

Professor Chulhong Kim, Professor Seungchul Lee, Ph.D. candidate Jongbeom Kim, and M.S. Gyuwon Kim of the Department of Electrical Engineering, Convergence IT Engineering, and Mechanical Engineering at Pohang University of Science and Technology (POSTECH), South Korea, collaborated with Professor Lihong Wang and Postdoctoral Research Associate Lei Li of the California Institute of Technology, USA, and created an AI-based localization PAI to address the drawbacks of sluggish imaging speed and published their paper in Light Science & Application.

It has been able to handle all three of these concerns concurrently by applying deep learning to increase imaging speed and decrease the number of laser beams on the body.

The research team was able to cut the number of photos utilized in this procedure by more than tenfold and boost the imaging speed by a factor of twelve using deep learning technologies.

Photoacoustic computed tomography and localization photoacoustic microscopy imaging periods were lowered from 30 seconds to 2.5 seconds and 30 minutes to 2.5 minutes, respectively.

This development opens up the possibility of using PAI techniques for localization in a variety of preclinical or clinical applications that need both high speed and exquisite spatial resolution, such as studies of instantaneous drug and hemodynamic responses.

A notable advantage of this technology is that it considerably reduces both laser beam exposure to the living body and imaging time, reducing the patients’ strain.

Journal Reference:

Kim, J., et al. (2022) Deep learning acceleration of multiscale superresolution localization photoacoustic imaging. Light Sci Appl doi:10.1038/s41377-022-00820-w