Voice and language recognition technology are continuously evolving, resulting in the development of unique speech dialog systems like Amazon's Alexa and Apple's Siri. The integration of emotional intelligence into dialog artificial intelligence (AI) systems is an important milestone in the development of these systems.

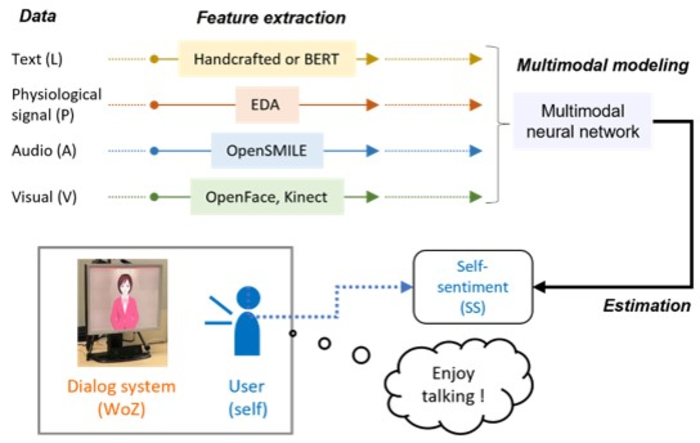

The multimodal neural network is used to predict user sentiment from multimodal features such as text, audio, and visual data. In a new study, researchers from Japan account for physiological signals in sentiment estimation while talking with the system, greatly improving the system’s performance. Image Credit: Shogo Okada from Japan Advanced Institute of Science and Technology.

The multimodal neural network is used to predict user sentiment from multimodal features such as text, audio, and visual data. In a new study, researchers from Japan account for physiological signals in sentiment estimation while talking with the system, greatly improving the system’s performance. Image Credit: Shogo Okada from Japan Advanced Institute of Science and Technology.

In addition to understanding words, a system that can identify the user’s emotional states would provide a more compassionate reaction, resulting in a more interactive experience for the user.

The gold standard for an AI dialog system with sentimental analysis is “multimodal sentiment analysis,” which is a collection of algorithms. These approaches are critical for human-centered AI systems because they can automatically evaluate a person’s psychological condition based on their speech, voice color, facial expression and posture.

The method might lead to the creation of an emotionally intelligent AI with human-like characteristics that recognize the user’s feelings and responds appropriately.

Current emotion estimate approaches, on the other hand, solely consider visible data and ignore information included in non-observable signals such as physiological signals. Those signals are a potential gold mine of emotions, with the possibility of greatly increasing sentiment estimation ability.

In the latest study published in the journal IEEE Transactions on Affective Computing, scientists from Japan, led by Associate Professor Shogo Okada of the Japan Advanced Institute of Science and Technology (JAIST) and Prof. Kazunori Komatani of the Institute of Scientific and Industrial Research at Osaka University, added physiological signals to multimodal sentiment analysis for the first time.

Humans are very good at concealing their feelings. The internal emotional state of a user is not always accurately reflected by the content of the dialog, but since it is difficult for a person to consciously control their biological signals, such as heart rate, it may be useful to use these for estimating their emotional state. This could make for an AI with sentiment estimation capabilities that are beyond human.

Shogo Okada, Associate Professor, Japan Advanced Institute of Science and Technology

To evaluate the amount of satisfaction experienced by the user during the chat, the researchers studied 2468 exchanges with a dialog AI acquired from 26 participants. After then, the user was asked to rate how fun or dull the talk was.

The researchers employed the “Hazumi1911” multimodal dialog data set, which incorporated speech recognition, facial expression, voice color sensors, position detection and skin potential, a type of physiological reaction sensing, for the first time.

On comparing all the separate sources of information, the biological signal information proved to be more effective than voice and facial expression. When we combined the language information with biological signal information to estimate the self-assessed internal state while talking with the system, the AI’s performance became comparable to that of a human.

Shogo Okada, Associate Professor, Japan Advanced Institute of Science and Technology

These findings show that detecting physiological signals in humans, which are usually concealed from view, might pave the way to more emotional intelligence AI-based dialog systems, resulting in more natural and pleasant human-machine interactions.

Furthermore, by recognizing changes in everyday emotional states, emotionally intelligent AI systems might aid in the detection and monitoring of mental diseases. They might also be useful in education, where AI could determine if a student is enthusiastic and thrilled about a topic of discussion or bored, resulting in changes in teaching methods and more effective educational services.

Journal Reference:

Katada, S., et al. (2022) Effects of Physiological Signals in Different Types of Multimodal Sentiment Estimation. IEEE Transactions on Affective Computing. doi.org/10.1109/TAFFC.2022.3155604.