Artificial intelligence (AI) models capable of assessing medical images have the potential to accelerate and enhance the accuracy of cancer diagnoses, but they also could be susceptible to cyberattacks.

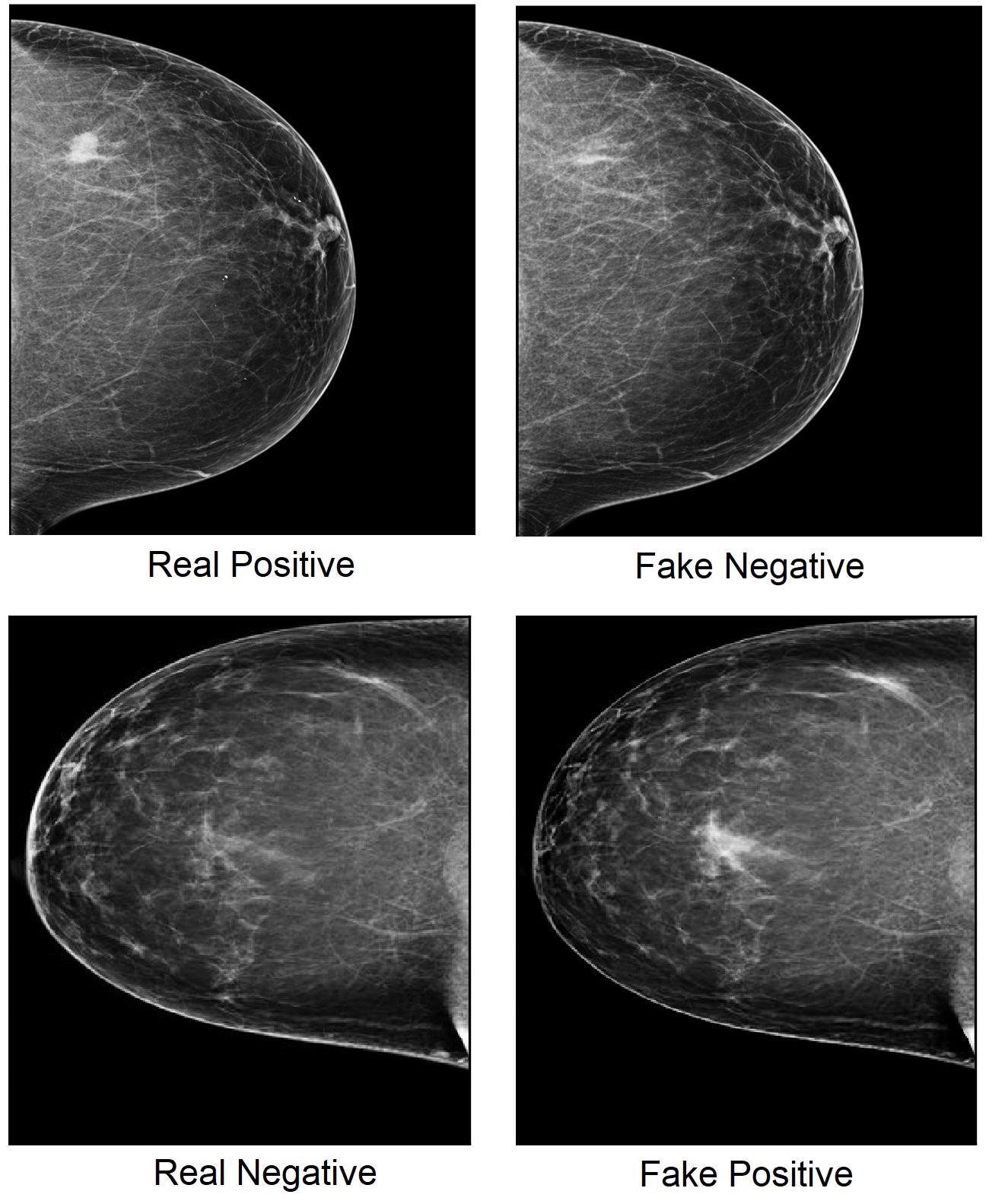

Mammogram images showing real cancer-positive (top left) and cancer-negative (bottom left) cases, with cancerous tissue indicated by white spot. A 'generative adversarial network' program removed cancerous regions from the cancer-positive image, creating a fake negative image (top right) and inserted cancerous regions to the cancer-negative image, creating a fake positive (bottom right). (Image Credit: Q. Zhou et al., Nat. Commun. 2021).

Mammogram images showing real cancer-positive (top left) and cancer-negative (bottom left) cases, with cancerous tissue indicated by white spot. A 'generative adversarial network' program removed cancerous regions from the cancer-positive image, creating a fake negative image (top right) and inserted cancerous regions to the cancer-negative image, creating a fake positive (bottom right). (Image Credit: Q. Zhou et al., Nat. Commun. 2021).

In a new study, University of Pittsburgh scientists replicated an attack that fabricated mammogram images, fooling an AI breast cancer diagnosis model as well as human breast imaging radiologist specialists.

The study, published recently in Nature Communications, draws attention to a possible safety issue for medical AI referred to as “adversarial attacks,” which seek to modify images or other inputs to make models reach false conclusions.

What we want to show with this study is that this type of attack is possible, and it could lead AI models to make the wrong diagnosis—which is a big patient safety issue. By understanding how AI models behave under adversarial attacks in medical contexts, we can start thinking about ways to make these models safer and more robust.

Shandong Wu, Ph.D., Senior Study Author and Associate Professor of Radiology, Biomedical Informatics and Bioengineering, University of Pittsburgh

AI-based image recognition technology for cancer diagnosis has progressed rapidly in the last few years, and several breast cancer models have gained the approval of the U.S. Food and Drug Administration. According to Wu, these tools can quickly screen mammogram images and detect those most probable to be cancerous, helping radiologists be more accurate and efficient.

But these technologies are also at risk from cyber threats, such as adversarial attacks. Possible motivations for such attacks include insurance fraud from health care providers seeking to increase revenue or companies trying to alter clinical trial results in their favor.

Adversarial attacks on medical images range from minute manipulations that alter the AI’s conclusion but are unnoticeable to the human eye, to more advanced versions that target sensitive contents of the image, such as cancerous areas — rendering them more likely to deceive a human.

To comprehend how AI would act under this more multifaceted type of adversarial attack, Wu and his team employed mammogram images to build a model for identifying breast cancer. First, the scientists taught a deep learning algorithm to differentiate cancerous and benign cases with an accuracy of more than 80%.

Next, they created a so-called “generative adversarial network” (GAN) — a computer program that produces false images by introducing or taking away cancerous regions from negative or positive images, respectively, and then they verified how the model categorized these adversarial images.

Out of the 44 positive images made to appear negative by the GAN, 42 were categorized as negative by the model, and out of 319 negative images made to appear positive, 209 were categorized as positive. Overall, the model was deceived by 69.1% of the false images.

In the second segment of the experiment, the scientists asked five human radiologists to differentiate whether mammogram images were fake or real. The radiologists accurately detected the images’ authenticity with a precision of between 29% and 71%, depending on the individual.

Certain fake images that fool AI may be easily spotted by radiologists. However, many of the adversarial images in this study not only fooled the model, but they also fooled experienced human readers. Such attacks could potentially be very harmful to patients if they lead to an incorrect cancer diagnosis.

Shandong Wu, Ph.D., Senior Study Author and Associate Professor of Radiology, Biomedical Informatics and Bioengineering, University of Pittsburgh

Wu is also the director of the Intelligent Computing for Clinical Imaging Lab and the Pittsburgh Center for AI Innovation in Medical Imaging.

According to Wu, the subsequent step is creating ways to strengthen the AI models against adversarial attacks.

One direction that we are exploring is ‘adversarial training’ for the AI model. This involves pre-generating adversarial images and teaching the model that these images are manipulated.

Shandong Wu, Ph.D., Senior Study Author and Associate Professor of Radiology, Biomedical Informatics and Bioengineering, University of Pittsburgh

With the possibility of AI being incorporated into the medical infrastructure, Wu said that cybersecurity education also is imperative to guarantee that hospital technology systems and personnel are aware of possible risks and have technical solutions to safeguard patient data and halt malware.

We hope that this research gets people thinking about medical AI model safety and what we can do to defend against potential attacks, ensuring AI systems function safely to improve patient care.

Shandong Wu, Ph.D., Senior Study Author and Associate Professor of Radiology, Biomedical Informatics and Bioengineering, University of Pittsburgh

Other authors involved in this study were Qianwei Zhou, Ph.D., of Pitt and Zhejiang University of Technology in China; Margarita Zuley, M.D., Bronwyn Nair, M.D., Adrienne Vargo, M.D., Suzanne Ghannam, M.D., and Dooman Arefan, Ph.D., all of Pitt and UPMC; Yuan Guo, M.D., of Pitt and Guangzhou First People’s Hospital in China; Lu Yang, M.D., of Pitt and Chongqing University Cancer Hospital in China.

This study received support from the National Institutes of Health (NIH)/National Cancer Institute (grant #1R01CA218405), the National Science Foundation (NSF) (grant #2115082), the NSF/NIH joint program (grant #1R01EB032896) and the National Natural Science Foundation of China (grant #61802347).

Journal Reference:

Zhou, Q., et al. (2021) A machine and human reader study on AI diagnosis model safety under attacks of adversarial images. Nature Communications. doi.org/10.1038/s41467-021-27577-x.