A quick and precise reading of an X-ray or some other medical images can be crucial to a patient’s health and could even save a life. Therefore, achieving such an evaluation relies on the availability of a skilled radiologist. However, a quick response is not always possible.

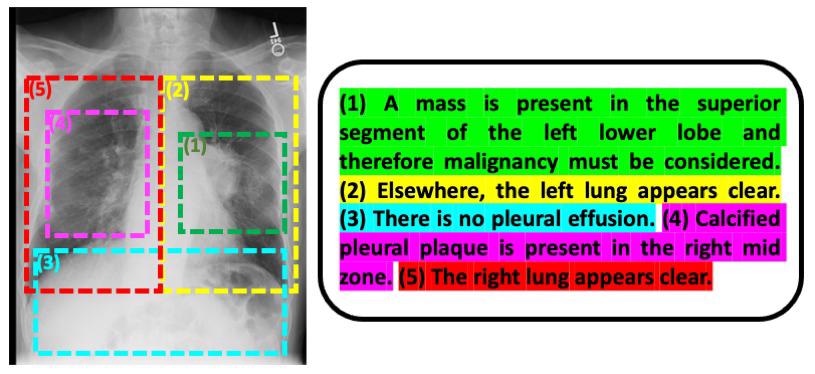

An example image-text pair of a chest radiograph and its associated radiology report. Image Credit: MIT CSAIL.

An example image-text pair of a chest radiograph and its associated radiology report. Image Credit: MIT CSAIL.

Accordingly, Ruizhi “Ray” Liao, a postdoc and a recent PhD graduate at MIT’s Computer Science and Artificial Intelligence Laboratory (CSAIL) states, “we want to train machines that are capable of reproducing what radiologists do every day.”

Liao is the first author of a new study, authored by other scientists at Massachusetts Institute of Technology (MIT) and Boston-area hospitals, being presented this fall at MICCAI 2021, an international conference conducted on medical image computing.

Despite the concept of using computers to interpret images is known already, the MIT-headed group is drawing on an underutilized resource — the vast body of radiology reports that accompany medical images, written by radiologists in regular clinical practice — to enhance the interpretive capabilities of machine learning algorithms.

Furthermore, the team is making use of an idea gathered from an information theory known as mutual information. It is a statistical measure of the interdependence of two diverse variables to increase the effectiveness of their method.

The functioning of the theory is explained as follows: First, a neural network is trained to identify the extent of a disease, like pulmonary edema, by being presented with various X-ray images of patients’ lungs, together with a doctor’s rating of the severity of each case. That data is encapsulated inside a collection of numbers.

An individual neural network performs the same for text, placing its data as a diverse collection of numbers. Then, a third neural network combines the information between images and text in a coordinated manner to optimize the mutual information between the two datasets.

When the mutual information between images and text is high, that means that images are highly predictive of the text and the text is highly predictive of the images.

Polina Golland, Professor and Principal Investigator at Computer Science and Artificial Intelligence Laboratory, Massachusetts Institute of Technology

Liao and Golland, together with their collaborators have initiated another innovation that gives various benefits: Instead of working from complete images and radiology reports, they break the reports down to separate sentences and the portions of those images that the sentences relate to.

Estimates the severity of the disease more accurately than if you view the whole image and whole report. And because the model is examining smaller pieces of data, it can learn more readily and has more samples to train on.

Polina Golland, Professor and Principal Investigator at Computer Science and Artificial Intelligence Laboratory, Massachusetts Institute of Technology

As far as this project is concerned, Liao finds the computer science aspects of it fascinating and, therefore, the main motivation for him is “to develop technology that is clinically meaningful and applicable to the real world.”

For this reason, a pilot program is currently being carried out at the Beth Israel Deaconess Medical Center to see the way MIT’s machine learning model could impact the way doctors who manage heart failure patients make decisions, particularly in an emergency room setting where speed is essential.

Golland feels that the model could possess very wide applicability.

It could be used for any kind of imagery and associated text — inside or outside the medical realm. This general approach, moreover, could be applied beyond images and text, which is exciting to think about.

Polina Golland, Professor and Principal Investigator at Computer Science and Artificial Intelligence Laboratory, Massachusetts Institute of Technology

The paper was written by Liao along with MIT CSAIL postdoc Daniel Moyer and Golland; Miriam Cha and Keegan Quigley from MIT Lincoln Laboratory; William M. Wells from Harvard Medical School and MIT CSAIL; and clinical collaborators Seth Berkowitz and Steven Horng from Beth Israel Deaconess Medical Center.

The study was financially supported by the NIH NIBIB Neuroimaging Analysis Center, Wistron, MIT-IBM Watson AI Lab, MIT Deshpande Center for Technological Innovation, MIT Abdul Latif Jameel Clinic for Machine Learning in Health (J-Clinic) and MIT Lincoln Lab.