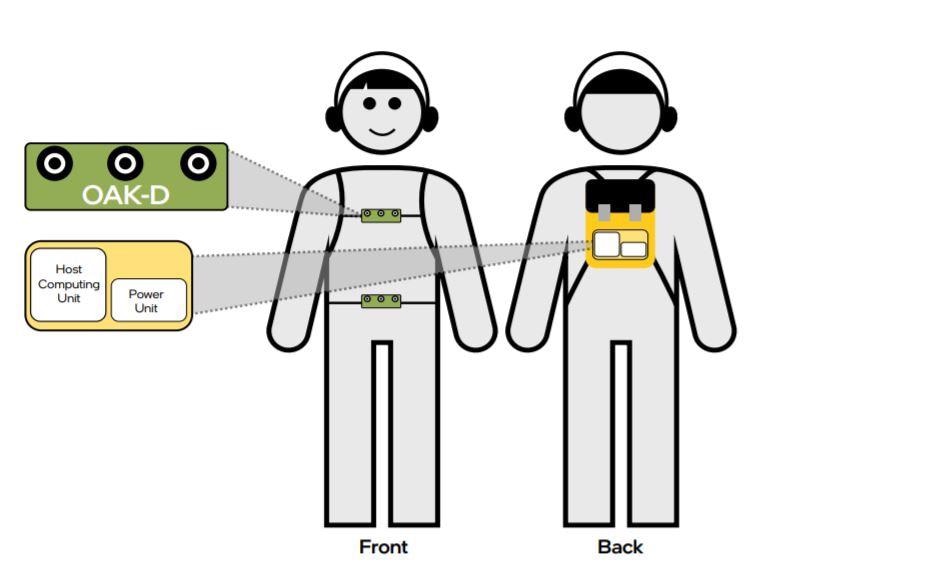

The system design. Image Credit: Intel

A newly designed backpack relies on AI to help its visually-impaired wearer to navigate the world around them.

For the visually-impaired, navigating the everyday world, and in particular public spaces, can be a constant and profound challenge. This can make even mundane tasks that many of us take for granted a real struggle.

With the World Health Organisation (WHO) estimating that there are 285 million visually impaired people worldwide, this struggle is a major problem that needs to be tackled.

Thankfully help could be at hand thanks to an innovative new backpack designed by University of Georgia researcher Jagadish Mahendran and his team.

Inspired by a meeting with a visually impaired friend and discussing the challenges they faced, Mahendran has developed a system that can detect obstacles like traffic lights, crosswalks, moving objects, changing elevations and more.

The navigation system consists of a backpack holding a lightweight computer unit and GPS, a vest jacket that has a 4K camera and a fanny-pack that conceals a small battery capable of powering the system for 8 hours.

To build the system, Mahendran — an AI designer — used OpenCV’s Artificial Intelligence Kit with Depth (OAK-D) which is powered by Intel.

Last year when I met up with a visually impaired friend, I was struck by the irony that while I have been teaching robots to see, there are many people who cannot see and need help. This motivated me to build the visual assistance system.

Jagadish K. Mahendran, Computer Vision/Artificial Intelligence Engineer, University of Georgia

Mahendran and his team’s system won the 2020 OpenCV Spatial AI competition and is detailed in a case study¹ published by intel.

Intel AI-Powered Backpack Helps Visually Impaired Navigate World

Video Credit: YouTube/ Intel Newsroom

A Navigation System With Depth

Mahendran’s system is not the first device designed to assist the visually-impaired to navigate their surroundings. Other options do exist, these include GPS enabled and voice-activated smartphone apps, camera-equipped walking canes, and even the humble guide dog.

All of these systems are, however, fairly limited in how they can assist the visually-impaired, with the camera systems in particular lacking depth perception.

Mahendran’s backpack navigation system comes with a Bluetooth-enabled earpiece that allows the wearer to communicate voice commands and queries. As the system responds to this vocal input, it feeds back information to the earpiece about the environment and obstacles around the user.

Depth is one thing that the system certainly doesn’t lack. The cutting-edge AI running on Intel’s Movidius VPU and the Intel® Distribution of OpenVINO™ toolkit forms an advanced neural network and handles information streaming from the vest-mounted Luxonis OAK-D spatial AI camera. This allows it to provide advanced computer vision, a real-time depth map and color information.

Navigating the Everyday World with Audio Feedback

The case study lays out the benefits this interface should have for the visually-impaired. Including but not limited to:

• Allowing users to walk more safely on a city sidewalk.

• Giving the wearer information to better avoid obstacles, such as trash barrels, low-hanging branches, and other pedestrians.

• Providing information regarding the location of traffic and street signs.

• Allowing the user to stop at, and cross, crosswalks safely.

• Warning wearers of elevation changes, such as a step up to or down from a curb.

• Instructing the system to perform actions, such as describing the current scene.

“As the user moves through their environment, the system audibly conveys information about common obstacles including signs, tree branches, and pedestrians. It also warns of upcoming crosswalks, curbs, staircases, and entryways,” says Mahendran.

The researcher goes even goes further, describing what a typical user could expect to experience on a normal everyday journey whilst wearing his system.

If the user is walking down the sidewalk and is approaching a trash bin, the system can issue a verbal warning of ‘left,’ ‘right,’ or ‘center,’ indicating the relative position of the receptacle. When the user is approaching a corner, the system will describe what’s ahead by saying ‘Stop sign’ or ‘Enabling crosswalk,’ or both.

Jagadish K. Mahendran Computer Vision/Artificial Intelligence Engineer, University of Georgia

“Similarly, if the user is approaching an area where bushes or branches overhang the sidewalk, the system will issue a notice such as ‘Top, front,’ warning of something in the way.” Mahendran added.

Whilst the team’s system certainly tops assistance currently available for the visually-impaired, they believe this is just the tip of the iceberg in what such technology could ultimately achieve.

Next Steps for the Team

A team called Mira has been formed, which is a group of volunteers from various backgrounds including people who are visually impaired. The project is growing with a mission to provide an open-source AI-based visual assistance system for free. The team is currently in the process of raising funds for their initial phase of testing.

References

1. ‘A Vision System for the Visually Impaired,’ Intel,[https://www.intel.com/content/www/us/en/homepage.html]

Disclaimer: The views expressed here are those of the author expressed in their private capacity and do not necessarily represent the views of AZoM.com Limited T/A AZoNetwork the owner and operator of this website. This disclaimer forms part of the Terms and conditions of use of this website.