Jul 16 2020

For humans, it might be simple to pick up a can of soft drink. However, this could be a complex task for robots since they have to locate the object, determine its shape, identify the accurate amount of strength to use, and grasp the object without a slip.

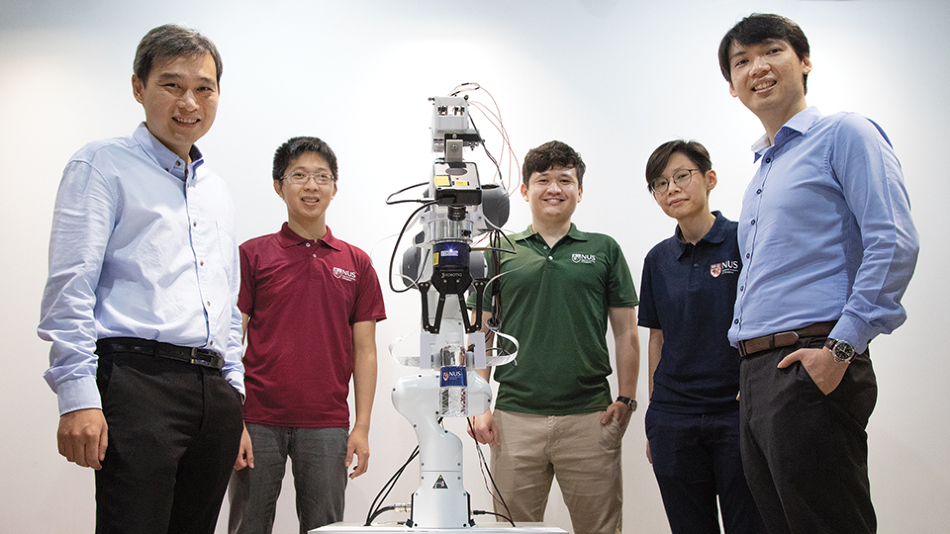

The NUS research team behind the novel robotic system integrated with event-driven artificial skin and vision sensors was led by Assistant Professor Harold Soh (left) and Assistant Professor Benjamin Tee (right). With them are team members (second from left, to right) Mr Sng Weicong, Mr Tasbolat Taunyazov, and Dr See Hian Hian. Image Credit: National University of Singapore.

The NUS research team behind the novel robotic system integrated with event-driven artificial skin and vision sensors was led by Assistant Professor Harold Soh (left) and Assistant Professor Benjamin Tee (right). With them are team members (second from left, to right) Mr Sng Weicong, Mr Tasbolat Taunyazov, and Dr See Hian Hian. Image Credit: National University of Singapore.

A majority of the existing robots work mainly based on visual processing, restricting their capabilities. To carry out more complex tasks, robots must have an exceptional sense of touch and the capability to rapidly and intelligently process sensory information.

Recently, a group of materials engineers and computer scientists from NUS has developed and exhibited an amazing approach to make robots smarter. They created a sensory integrated artificial brain system that simulates biological neural networks, which can work using a power-efficient neuromorphic processor such as Intel’s Loihi chip.

This innovative system combines artificial skin and vision sensors, providing robots the ability to make accurate conclusions about the objects they grasp by using the data captured by the touch and vision sensors in real-time.

The field of robotic manipulation has made great progress in recent years. However, fusing both vision and tactile information to provide a highly precise response in milliseconds remains a technology challenge. Our recent work combines our ultra-fast electronic skins and nervous systems with the latest innovations in vision sensing and AI for robots so that they can become smarter and more intuitive in physical interactions.

Benjamin Tee, Assistant Professor, Department of Materials Science and Engineering, National University of Singapore

Tee is the co-lead author of this study along with Assistant Professor Harold Soh from NUS Computer Science.

The results of this multidisciplinary study were presented at the renowned conference Robotics: Science and Systems in July 2020.

Human-Like Sense of Touch for Robots

Robots enabled by a human-like sense of touch could exhibit considerably improved functionality, and can even be used for new applications. For instance, in a factory setting, robotic arms powered with electronic skins could easily adapt to various items by using tactile sensing to recognize and grasp even unfamiliar objects with an accurate amount of pressure to avoid slipping.

The new robotic system developed by the NUS team is equipped with an advanced artificial skin called Asynchronous Coded Electronic Skin (ACES). Created in 2019 by Asst Prof. Tee and his colleagues, this innovative sensor identifies touches over 1,000 times more rapid when compared to the human sensory nervous system. Moreover, it can identify the texture, shape, and hardness of objects 10 times quicker than the blink of an eye.

Making an ultra-fast artificial skin sensor solves about half the puzzle of making robots smarter. They also need an artificial brain that can ultimately achieve perception and learning as another critical piece in the puzzle.

Benjamin Tee, Assistant Professor, Department of Materials Science and Engineering, National University of Singapore

Asst Prof. Tee also belongs to the NUS Institute for Health Innovation & Technology.

A Human-Like Brain for Robots

To achieve new levels of innovation in robotic perception, the NUS researchers investigated neuromorphic technology—an area of computing that involves mimicking the neural structure and operation of the human brain—to process sensory data obtained from the artificial skin.

Both Asst Prof. Tee and Asst Prof. Soh are members of the Intel Neuromorphic Research Community (INRC). Therefore, it was a natural choice to employ Intel’s Loihi neuromorphic research chip for the newly developed robotic system.

As part of the preliminary experiments, the artificial skin was fitted to a robotic hand, which was used to read braille, passing the tactile data to Loihi through the cloud to transform the micro bumps sensed by the hand into a semantic meaning. Loihi realized the accuracy of more than 92% in classifying the Braille letters but consumed 20 times less power compared to a normal microprocessor.

Assistant Prof. Soh’s group enhanced the perception capabilities of the new robotic system by integrating both touch and vision data in a spiking neural network. As part of their experiments, they tasked a robot fitted with both vision sensors and artificial skin to classify different opaque containers with varying amounts of liquid. Furthermore, they tested the ability of the system to identify rotational slip, which is crucial for stable grasping.

In both the tests, the spiking neural network that employed both touch and vision data could detect object slippage and classify objects. The classification was found to be 10% more accurate when compared to that of a system with only vision.

With the help of a technique created by Asst Prof. Soh’s team, the neural networks could also classify the sensory data while it was being gathered, in contrast to the traditional approach in which data is classified only if it has been fully accumulated.

The team also demonstrated the efficiency of neuromorphic technology: the sensory data was processed by Loihi 21% faster compared to a top-performing graphics processing unit (GPU), although it used about 45 times less power.

We’re excited by these results. They show that a neuromorphic system is a promising piece of the puzzle for combining multiple sensors to improve robot perception. It’s a step towards building power-efficient and trustworthy robots that can respond quickly and appropriately in unexpected situations.

Harold Soh, Assistant Professor, Department of Computer Science, National University of Singapore

“This research from the National University of Singapore provides a compelling glimpse to the future of robotics where information is both sensed and processed in an event-driven manner combining multiple modalities,” noted Mr Mike Davies, Director of Intel’s Neuromorphic Computing Lab.

Mr Davies added that “The work adds to a growing body of results showing that neuromorphic computing can deliver significant gains in latency and power consumption once the entire system is re-engineered in an event-based paradigm spanning sensors, data formats, algorithms, and hardware architecture.”

This study was financially supported by the National Robotics R&D Programme Office (NR2PO), a set-up that promotes the robotics ecosystem in Singapore by funding research and development (R&D) to improve the readiness of robotics technologies and solutions.

The main considerations for NR2PO’s R&D investments include the prospects for impactful applications in the public sector, as well as the ability to develop differentiated capabilities for the industry.

Next Steps

As a next step, Assistant Prof. Tee and Asst Prof. Soh intend to further advance their innovative robotic system for applications in the food manufacturing and logistics industries, which face a high demand for robotic automation, specifically going forward in the post-COVID era.

NUS invention: Intelligent sensing for robots

Video Credit: National University of Singapore.