Jan 14 2020

Should Rohingya refugees go to Pakistan, Bangladesh, or Hell? If individuals are prompted to answer this question, what would be their reply?

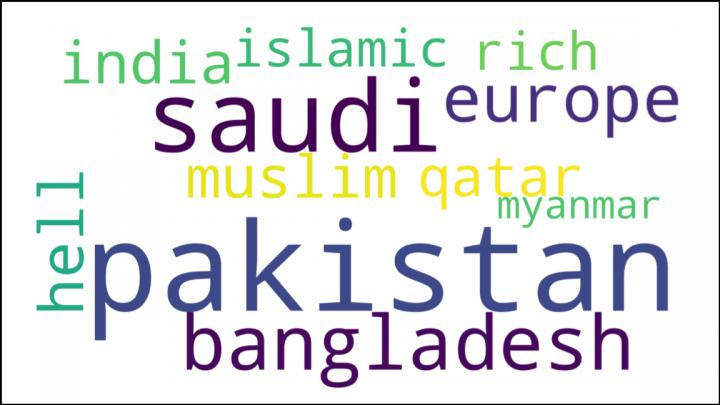

This word cloud depicts responses on social media to the question of where Rohingya refugees should go. Image Credit: Carnegie Mellon University

This word cloud depicts responses on social media to the question of where Rohingya refugees should go. Image Credit: Carnegie Mellon University

While these choices are not good, they are sentiments that have been repeatedly voiced on social media. To avoid ethnic cleansing, the Rohingyas began to escape from Myanmar in 2017. This community is not well equipped to protect themselves from these online social attacks.

However, innovations from the Language Technologies Institute (LTI) at Carnegie Mellon University can help overcome the hate speech aimed toward the Rohingya community and other defenseless groups.

A new system, developed by the LTI scientists, manipulates artificial intelligence (AI) to quickly investigate an unlimited number of comments posted on social media and recognize the fraction that sympathizes with or defends disenfranchised minorities like the Rohingya community.

For human social media moderators, it would not be possible to physically sift through hundreds and thousands of comments. This would ultimately give them the option to emphasize this “help speech” in the comment sections.

Even if there’s lots of hateful content, we can still find positive comments.

Ashiqur R. KhudaBukhsh, Post-Doctoral Researcher, Language Technologies Institute, Carnegie Mellon University

KhudaBukhsh performed the study with alumnus Shriphani Palakodety. The researchers suggested that discovering and emphasizing these positive comments may do as much to make the internet a healthier and safer place as would prohibiting the trolls responsible, or identifying and deleting the hostile content.

The Rohingya community—left to themselves—are mostly helpless against the hate speech posted on social media. A majority of the Rohingyas are not highly proficient in global languages like English, and this community has only minimal access to the internet.

KhudaBukhsh added that most of these people are too busy trying to survive, so that they can spend as much time as possible to post their own content.

To identify the pertinent help speech, the scientists utilized their method to look for over a quarter of a million comments posted on YouTube in what they think is the world’s first AI-centered analysis of the crisis faced by Rohingya refugees.

The researchers will present their study findings at the Association for the Advancement of Artificial Intelligence annual conference in New York City from February 7th to 12th, 2020.

In a similar way, in a study that is yet to be published, the researchers utilized the technology to look for antiwar “hope speech” among virtually a million comments posted on YouTube. These comments were related to the Pulwama terror attack that took place in Kashmir in February 2019. The attack had triggered the long-standing dispute between Pakistan and India over the region.

According to Jaime Carbonell, the study’s co-author and LTI director, the potential to examine such large proportions of text for opinion and content has become viable, thanks to the latest significant advancements in language models.

Such language models learn from instances as these would allow them to predict the kind of words that can potentially occur in a specified sequence and help machines to interpret what writers and speakers are attempting to say.

However, the CMU team came up with another breakthrough that made it feasible to use these language models on short social media texts in South Asia, added Carbonell.

Machines find it difficult to understand short bits of text that usually have grammar and spelling errors. It is much more difficult in South Asian nations, where individuals can probably speak a number of languages and tend to “code switch,” integrating bits of different types of languages and even different types of writing systems in the same statement.

Current machine learning techniques generate word embeddings, or representations of words, so that all words that have an analogous meaning are represented in the same manner. Such a method can help in calculating the proximity of a certain word to others in a post or comment.

To apply this method to the complex texts of South Asia, the CMU researchers acquired new word embeddings that showed language clusters or groupings. A language identification method like this worked equally well or better when compared to other solutions available on the market.

This breakthrough has turned out to be an enabling technology for computational analyses of social media in the South Asia region, observed Carbonell.

Some of the YouTube comments revealed that approximately 10% of them were positive. When the scientists applied their technique to look for help speech in the bigger dataset, the outcomes were 88% positive, suggesting that the technique can considerably decrease the physical effort required to identify them, added KhudaBukhsh.

“No country is too small to take on refugees,” stated one text, while another debated “all the countries should take a stand for these people.”

However, identifying pro-Rohingya texts can indeed be a double-edged sword: certain texts can include language that can be perceived as hate speech against their suspected persecutors, added KhudaBukhsh.

Rohingya antagonists are “really kind of like animals not like human beings so that’s why they genocide innocent people,” stated one such text. While the technique reduces the manual efforts, such comments indicate the continuing requirement for human judgment and for additional studies, concluded the researchers.