Oct 11 2019

“Just think about what a fly can do,” states Professor Pavan Ramdya, whose lab at EPFL’s Brain Mind Institute, together with the lab of Professor Pascal Fua in EPFL’s Institute for Computer Science, directed the research. “A fly can climb across terrain that a wheeled robot would not be able to.”

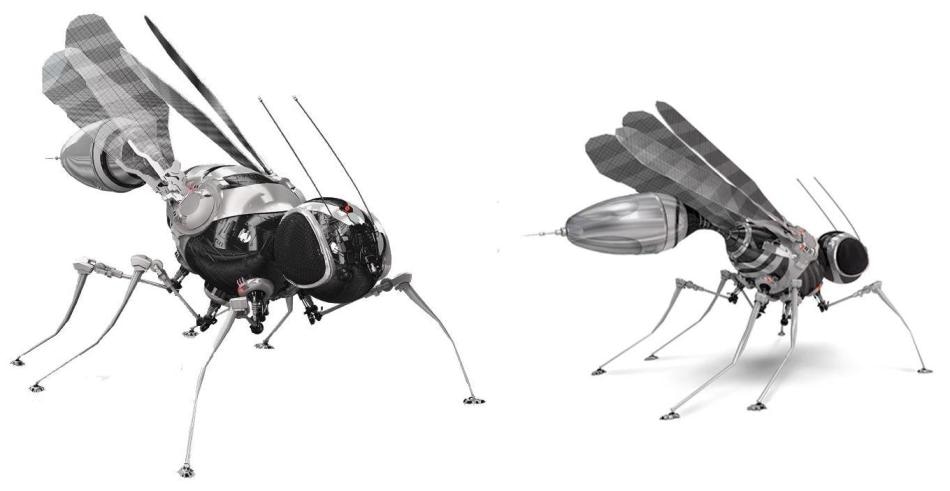

Concept design of fly-robots.(Image credit: P. Ramdya, EPFL)

Concept design of fly-robots.(Image credit: P. Ramdya, EPFL)

Flies are not exactly appealing to people. However, there is a surprising path to redemption: robots. It so happens that flies have certain abilities and features that can help develop a new design for robotic platforms.

Unlike most vertebrates, flies can climb nearly any terrain. They can stick to walls and ceilings because they have adhesive pads and claws on the tips of their legs. This allows them to basically go anywhere. That’s interesting also because if you can rest on any surface, you can manage your energy expenditure by waiting for the right moment to act.

Pavan Ramdya, Professor, Brain Mind Institute, EPFL

It was this concept of exploiting the principles underlying the behavior of flies to develop the design of robots that triggered the development of DeepFly3D. DeepFly3D is a motion-capture platform for the fly Drosophila melanogaster, a model insect that is almost universally used across the field of biology.

In the experimental framework developed by Ramdya, seven cameras capture all the movements of a fly that walks on top of a miniature floating ball—such as a tiny treadmill. The top side of the fly is attached to a fixed stage so that it always stays in place while walking on the ball. However, the fly “believes” that it is moving untethered.

Then, the captured camera images are processed by DeepFly3D, a deep-learning software created by Semih Günel, a PhD student who works with the labs of both Ramdya and Fua.

This is a fine example of where an interdisciplinary collaboration was necessary and transformative. By leveraging computer science and neuroscience, we’ve tackled a long-standing challenge.

Pavan Ramdya, Professor, Brain Mind Institute, EPFL

The significance of DeepFly3D is that it can deduce the 3D pose of the fly—as well as other animals. This implies that it can automatically estimate and make behavioral measurements at an unparalleled resolution for a range of biological applications.

There is no need for manual calibration of the software, and it applies camera images to automatically spot and rectify any mistakes it creates in its calculations of the fly’s pose. Lastly, it also makes use of active learning to enhance its own functioning.

DeepFly3D paves a path for accurate and efficient modeling of the poses, movements, and joint angles of a fruit fly in three dimensions. This might prompt the need for an established approach to model 3D pose automatically in other organisms as well.

The fly, as a model organism, balances tractability and complexity very well. If we learn how it does what it does, we can have important impact on robotics and medicine and, perhaps most importantly, we can gain these insights in a relatively short period of time.

Pavan Ramdya, Professor, Brain Mind Institute, EPFL