Researchers from the Universidad Carlos III de Madrid (UC3M) have published a paper presenting the outcomes of study into interactions between deaf people and robots, in which they were able to program a humanoid — known as TEO — to interact in sign language.

(Image credit: Universidad Carlos III de Madrid)

(Image credit: Universidad Carlos III de Madrid)

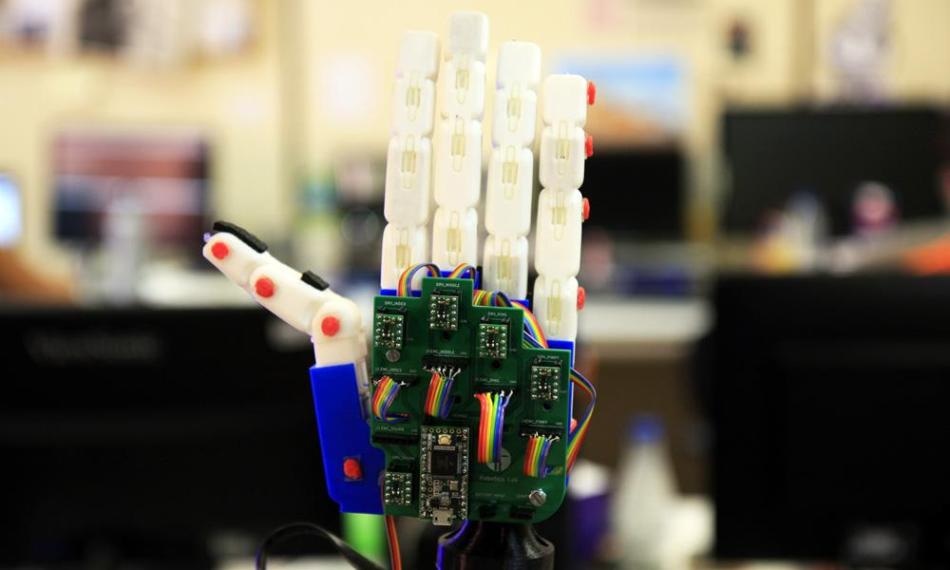

For a robot to be able to “learn” sign language, it is essential to integrate various fields of engineering like neural networks, artificial intelligence, and artificial vision, as well as underactuated robotic hands.

One of the main new developments of this research is that we united two major areas of Robotics: complex systems (such as robotic hands) and social interaction and communication.

Juan Víctores, Researcher in Robotics Lab, Department of Systems Engineering and Automation, UC3M

The first thing the researchers performed as part of their study was to denote, via a simulation, the particular location of each phalanx in order to portray specific signs from the Spanish Sign Language. Later, they tried to mimic this position with the robotic hand, attempting to make the movements comparable to those that could be created by a human hand.

“The objective is for them to be similar and, above all, natural. Various types of neural networks were tested to model this adaptation, and this allowed us to choose the one that could perform the gestures in a way that is comprehensible to people who communicate with sign language,” the scientists describe.

Finally, the researchers established that the system worked by interacting with potential end-users.

The deaf people who have been in contact with the robot have reported 80 percent satisfaction, so the response has been very positive.

Jennifer J. Gago, Researcher in Robotics Lab, Department of Systems Engineering and Automation, UC3M

The experiments were conducted with TEO (Task Environment Operator), a humanoid robot for home use created in the Robotics Lab of the UC3M. Thus far, TEO has learned the fingerspelling alphabet of sign language, and also a very basic vocabulary associated with household chores, the scientist notes.

One of the problems presently faced by the researchers in order to continue developing this system is “the rendering of more complex gestures, using complete sentences,” says another member of the Robotics Lab team, Bartek Lukawski. The robot could subsequently be used by the around13,300 people in Spain who use sign language to converse.

The wider goal is enabling such robots to become household assistants that can help with serving food, ironing (TEO also does this), folding clothes, and communicating with users in domestic environments.

Jennifer J. Gago further states, “These robotic hands could be implemented in other humanoids and they could be used in other environments and circumstances.”

“The really important thing is that all of these technologies, all of these developments that we contribute to, are geared towards including all members of society. It is a way of envisaging technology as an aid to inclusion, both of minorities and of majorities, within a democracy,” Juan Víctores reiterates.