Jun 21 2019

A new study performed at the University of Kansas reveals that machine learning has the potential to identify insects that spread Chagas, an incurable disease, with high precision, using normal digital photos.

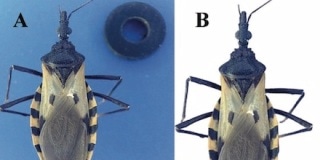

An example image of an individual of Triatoma dimidiata. (A) Raw image and (B) final image with background removed digitally. (Image credit: Khalighifar, et al.)

An example image of an individual of Triatoma dimidiata. (A) Raw image and (B) final image with background removed digitally. (Image credit: Khalighifar, et al.)

The concept is to offer a new tool to public health officials in places where Chagas is prevalent to curb the spread of the disease and to ultimately provide identification services directly to the general public.

Specifically, Chagas is disgusting since majority of the affected people are not aware that they have been infected. However, the Centers for Disease Control and Prevention reports that of the eight million people infected by Chagas across the globe, nearly 20% to 30% are hit by with heart rhythm abnormalities later in their lives, which can lead to sudden death; dilated hearts that do not pump blood efficiently; or a dilated colon or esophagus.

Most frequently, the disease is caused when triatomine bugs—commonly known as “kissing bugs”—bite people and deliver the parasite Trypanosoma cruzi into their bloodstreams. Chagas is more common in rural areas of Mexico, South America, and Central America.

A latest project at KU, known as the Virtual Vector Project, made attempts to allow public health officials to detect triatomine that carry Chagas with their smartphones, with the help of a type of portable photo studio for capturing pictures of the bugs.

Currently, a graduate student at KU has developed that project with proof-of-concept study demonstrating the ability of artificial intelligence to identify 12 Mexican and 39 Brazilian kissing bug species with high accuracy by scrutinizing normal photos—a benefit for officials seeking to reduce the spread of Chagas disease.

Ali Khalighifar, a KU doctoral student at the Biodiversity Institute and the Department of Ecology and Evolutionary Biology, led a research group that just reported the study outcomes in the Journal of Medical Entomology. Khalighfar and his colleagues could detect the kissing bugs from normal photos by using open-source, deep-learning software from Google, known as TensorFlow, which is similar to the technology backing Google’s reverse image search.

Because this model is able to understand, based on pixel tones and colors, what is involved in one image, it can take out the information and analyze it in a way the model can understand—and then you give them other images to test and it can identify them with a really good identification rate. That’s without preprocessing—you just start with raw images, which is awesome. That was the goal. Previously, it was impossible to do the same thing as accurately and certainly not without preprocessing the images.

Ali Khalighifar, Doctoral Student, Biodiversity Institute, Department of Ecology and Evolutionary Biology, The University of Kansas

Khalighifar and his colleagues—Ed Komp, researcher at KU’s Information and Telecommunication Technology Center, Janine M. Ramsey of Mexico’s Instituto Nacional de Salud Publica, Rodrigo Gurgel-Gonçalves of Brazil’s Universidade de Brasília, and A. Townsend Peterson, KU Distinguished Professor of Ecology and Evolutionary Biology and senior curator with the KU Biodiversity Institute—trained the algorithm with 1584 images of Brazilian triatomine species and 405 images of Mexican triatomine species.

Initially, the researchers could realize “83.0 and 86.7 percent correct identification rates across all Mexican and Brazilian species, respectively, an improvement over comparable rates from statistical classifiers.” However, when the information related to the geographic distributions of the kissing bugs was added into the algorithm, the accuracy of identification was boosted to 98.9% for the Brazilian species and 95.8% for the Mexican species.

Khalighifar said that the algorithm-based technology could enable public health officials and others to recognize triatomine species with an unmatched accuracy level, to gain better insights into the disease vectors on the ground.

“In the future, we’re hoping to develop an application or a web platform of this model that is constantly trained based on the new images, so it’s always being updated, that provides high-quality identifications to any interested user in real time,” he stated.

Currently, Khalighifar is using a similar method with TensorFlow for instant identification of frogs based on their calls and mosquitoes based on their wing sound.

I’m working right now on mosquito recordings. I’ve shifted from image processing to signal processing of recordings of the wing beats of mosquitoes. We get the recordings of mosquitoes using an ordinary cell phone, and then we convert them from recordings to images of signals. Then we use TensorFlow to identify the mosquito species. The other project that I’m working right now is frogs, with Dr. Rafe Brown at the Biodiversity Institute. And we are designing the same system to identify those species based on the calls given by each species.

Ali Khalighifar, Doctoral Student, Biodiversity Institute, Department of Ecology and Evolutionary Biology, The University of Kansas

Although artificial intelligence is widely portrayed in general as a job-killing threat or even an existential threat to people, Khalighifar stated that the study revealed how AI could be advantageous to researchers who study biodiversity.

“It’s amazing—AI really is capable of doing everything, for better or for worse,” he stated. “There are uses appearing that are scary, like identifying Muslim faces on the street. Imagine, if we can identify frogs or mosquitoes, how easy it might be to identify human voices. So, there are certainly dark sides of AI. But this study shows a positive AI application—we’re trying to use the good side of that technology to promote biodiversity conservation and support public health work.”