May 16 2019

José Altuve of the Houston Astros steps up to the plate on a 3-2 count, observes the pitcher and the situation, gets the consent from third base, follows the ball’s release, swings… and gets a single up the middle. This was just another trip to the plate for the three-time American League batting champ.

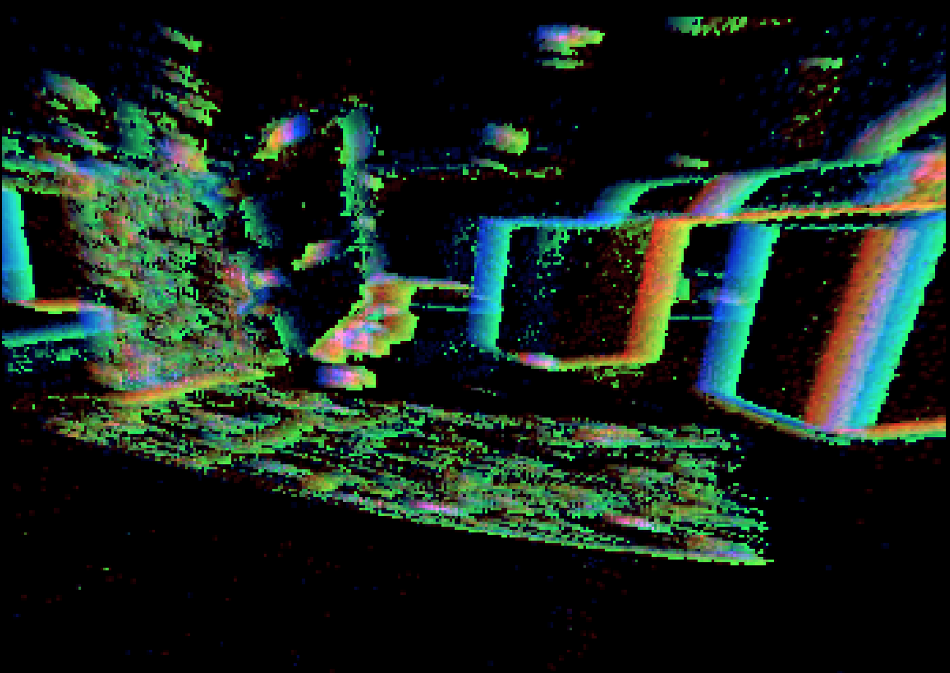

The researchers’ lab, as seen by the dynamic vision sensor. (Graphic courtesy of Perception and Robotics Group, University of Maryland.)

The researchers’ lab, as seen by the dynamic vision sensor. (Graphic courtesy of Perception and Robotics Group, University of Maryland.)

In the same scenario, could a robot get a hit? Not probable.

Altuve has perfected natural reflexes, many years of practice, knowledge of the pitcher’s predispositions, and insight of the trajectories of different pitches. What he observes, hears, and feels effortlessly blends with his brain and muscle memory to time the swing that yields the hit. The robot, on the other hand, has to use a linkage system to gradually coordinate data from its sensors with its motor skills. And it cannot remember anything. Strike three!

However, there could be hope for the robot. A paper by University of Maryland scientists recently published in the journal Science Robotics deals with a new method of merging perception and motor commands using the so-called hyperdimensional computing theory, which could essentially alter and enhance the common artificial intelligence (AI) task of sensorimotor representation—how agents like robots convert what they sense into what they perform.

“Learning Sensorimotor Control with Neuromorphic Sensors: Toward Hyperdimensional Active Perception” was written by Computer Science Ph.D. students Anton Mitrokhin and Peter Sutor, Jr.; Cornelia Fermüller, an associate research scientist with the University of Maryland Institute for Advanced Computer Studies; and Computer Science Professor Yiannis Aloimonos. Mitrokhin and Sutor are advised by Aloimonos.

Integration is the most critical challenge facing the robotics domain. A robot’s sensors and the actuators that move it are individual systems, connected together by a central learning mechanism that deduces a needed action given sensor data, or vice versa.

The awkward three-part AI system—each part communicating in its own language—is a slow way to get robots to achieve sensorimotor tasks. The subsequent step in robotics will be to combine a robot’s perceptions with its motor capabilities. This fusion, called “active perception,” would offer a more efficient and faster way for the robot to finish tasks.

In the team’s new computing model, a robot’s operating system would be grounded on hyperdimensional binary vectors (HBVs), which exist in a sparse and very high-dimensional space. HBVs can signify disparate discrete things—for instance, a concept, a sound, a single image, or an instruction; sequences composed of discrete things; and groupings of discrete things and sequences. They can represent all these types of information in an expressively constructed way, binding each modality together in long vectors of 1s and 0s with equal dimension. In this system, sensory input, action possibilities, and other information dwell in the same space, are in the same language, and are fused, forming a kind of memory for the robot.

The Science Robotics paper denotes the first time that perception and action have been combined.

A hyperdimensional framework can convert any sequence of “instants” into new HBVs, and group existing HBVs together, all in the same vector length. This is a natural technique to develop semantically important and informed “memories.” The encoding of more and more information consecutively results in “history” vectors and the ability to remember. Signals turn into vectors, indexing translates to memory, and learning occurs through clustering.

The robot’s memories of what it has detected and done in the past could lead it to anticipate future perception and impact its future actions. This active perception would allow the robot to become more independent and better prepared to finish tasks.

“An active perceiver knows why it wishes to sense, then chooses what to perceive, and determines how, when and where to achieve the perception,” says Aloimonos. “It selects and fixates on scenes, moments in time, and episodes. Then it aligns its mechanisms, sensors, and other components to act on what it wants to see, and selects viewpoints from which to best capture what it intends.”

“Our hyperdimensional framework can address each of these goals.”

Applications of the Maryland study could stretch beyond robotics. The final goal is to be able to achieve AI itself in a primarily different way: from theories to signals to language. Hyperdimensional computing could offer a faster and more efficient substitute model to the iterative neural net and deep learning AI techniques presently applied in computing applications such as visual recognition, data mining, and translating images to text.

Neural network-based AI methods are big and slow, because they are not able to remember. Our hyperdimensional theory method can create memories, which will require a lot less computation, and should make such tasks much faster and more efficient.

Anton Mitrokhin, Computer Science Ph.D. Student, University of Maryland