Apr 16 2019

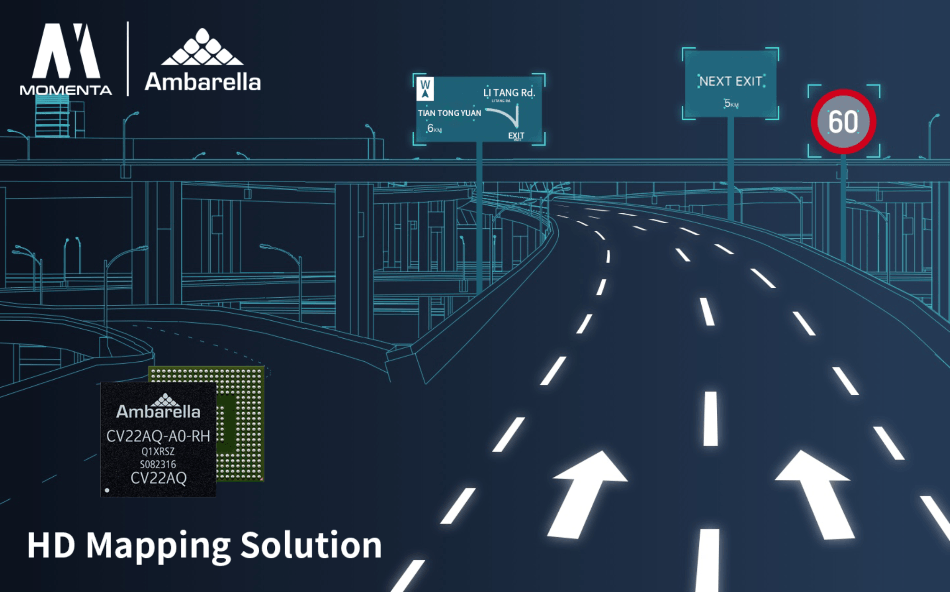

Ambarella, Inc. a Santa Clara, California-based manufacturer of high-resolution video processing and computer vision semiconductors, and Momenta, an autonomous driving technology company based in Suzhou, China, recently reported a joint HD mapping platform for autonomous vehicles. The joint solution leverages Ambarella’s CV22AQ CVflow computer vision system-on-chip (SoC) and Momenta’s deep learning algorithms to deliver HD map solutions, including localization for autonomous vehicles, mapping, and map updates through crowdsourcing.

Image credit: Ambarella, Inc.

Image credit: Ambarella, Inc.

HD map is essential to autonomous driving systems. Ambarella’s CV22AQ CVflow computing platform has made it easier for Momenta to deploy and upgrade our HD map software and algorithm on embedded systems. Our HD map can automatically build maps, perform localization, and update through crowdsourcing. We look forward to continuing our work with Ambarella to deliver a wide range of autonomous driving software.

Xudong Cao, CEO, Momenta.

We are pleased to partner with Momenta to provide a powerful and open HD map platform. The CV22AQ’s performance and advanced image processing help enable the full potential of Momenta’s advanced AI algorithms.

Fermi Wang, CEO, Ambarella.

Momenta’s vision-based HD semantic mapping solution is extremely scalable and production-ready. Through crowdsourcing, the solution can form a closed feedback loop of AI, big data, and HD map updates. Based on localization, Momenta learns changes in the map elements and offers regular updates to the cloud.

An advanced 10-nanometer process is used to manufacture the CV22AQ, providing the ultra-low power consumption needed for the development of compact automotive systems. Its CVflow design provides real-time processing of up to 8 megapixel resolution video at 30 frames per second (fps) to achieve high-precision deep learning based object recognition.

The high-performance image signal processor (ISP) of the CV22AQ delivers greater image quality in low-light conditions, while high dynamic range (HDR) processing extracts more image detail in high-contrast conditions — further improving the system’s computer vision abilities.

Using CV22AQ, Momenta can use a single monocular camera input to produce two individual video outputs, one for vision sensing (perception of traffic signs, lanes, and other objects), and the other for feature point extraction for self-localization and mapping (SLAM) and optical flow algorithms.

Ambarella delivers a full set of tools to assist customers to easily port their neural networks to the CV22AQ SoC. Based on the tool chain, Momenta is able to rapidly migrate deep learning perception models to embedded platforms and realize an exact output.