During harvest season, a significant labor workforce is required to meet fruit picking targets. However, with high labor costs and seasonal staff shortages, the fresh fruit industry is turning to agricultural robots to meet demands. While this reduces stress on orchards, for a harvesting robot, accurately locating fruit remains a complex challenge.

Image Credit: mythja/Shutterstock.com

Red Green Blue Depth (RGBD) cameras are considered a promising method to improve robotic perception and are a low cost, practical improvement for agricultural robots. In a study published to the MDPI journal Remote Sensing, a novel approach is proposed to improve the accuracy of fruit localization using RGBD cameras.

Methodology

The fruit recognition methods can be categorized into three types: deep-convolutional-neural-network-based, single-feature-based, and multi-feature-based.

At present, the deep convolutional neural network (DCNN) has become the commonly used fruit recognition method. Many network models—YOLOv3, LedNet, Faster RCNN, Mask RCNN, YOLACT, and YOLACT-edge—were efficiently used in locating apple fruits (Table 1).

Table 1. The summary of deep learning methods of apple detection. Source: Li, et al., 2022

| Networks Model |

Precision (%) |

Recall (%) |

mAP (%) |

F1-Score (%) |

Reference |

| Improved YOLOv3 |

97 |

90 |

87.71 |

— |

[4] |

| LedNet |

85.3 |

82.1 |

82.6 |

83.4 |

[5] |

| Improved R-FCN |

95.1 |

85.7 |

— |

90.2 |

[11] |

| Mask RCNN |

85.7 |

90.6 |

— |

88.1 |

[8] |

| DaSNet-v2 |

87.3 |

86.8 |

88 |

87.3 |

[12] |

| Faster RCNN |

— |

— |

82.4 |

86 |

[13] |

| Improved YOLOV5s |

83.83 |

91.48 |

86.75 |

87.49 |

[14] |

mAP represents mean Average Precision; F1-score means the balanced F-score F1=2⋅precision×recall/(precision+recall).

As the DCNN-based method is easily flexible to different kinds of fruits and can generalize to complex environments, it is employed in this study to propose the fruit recognition algorithm.

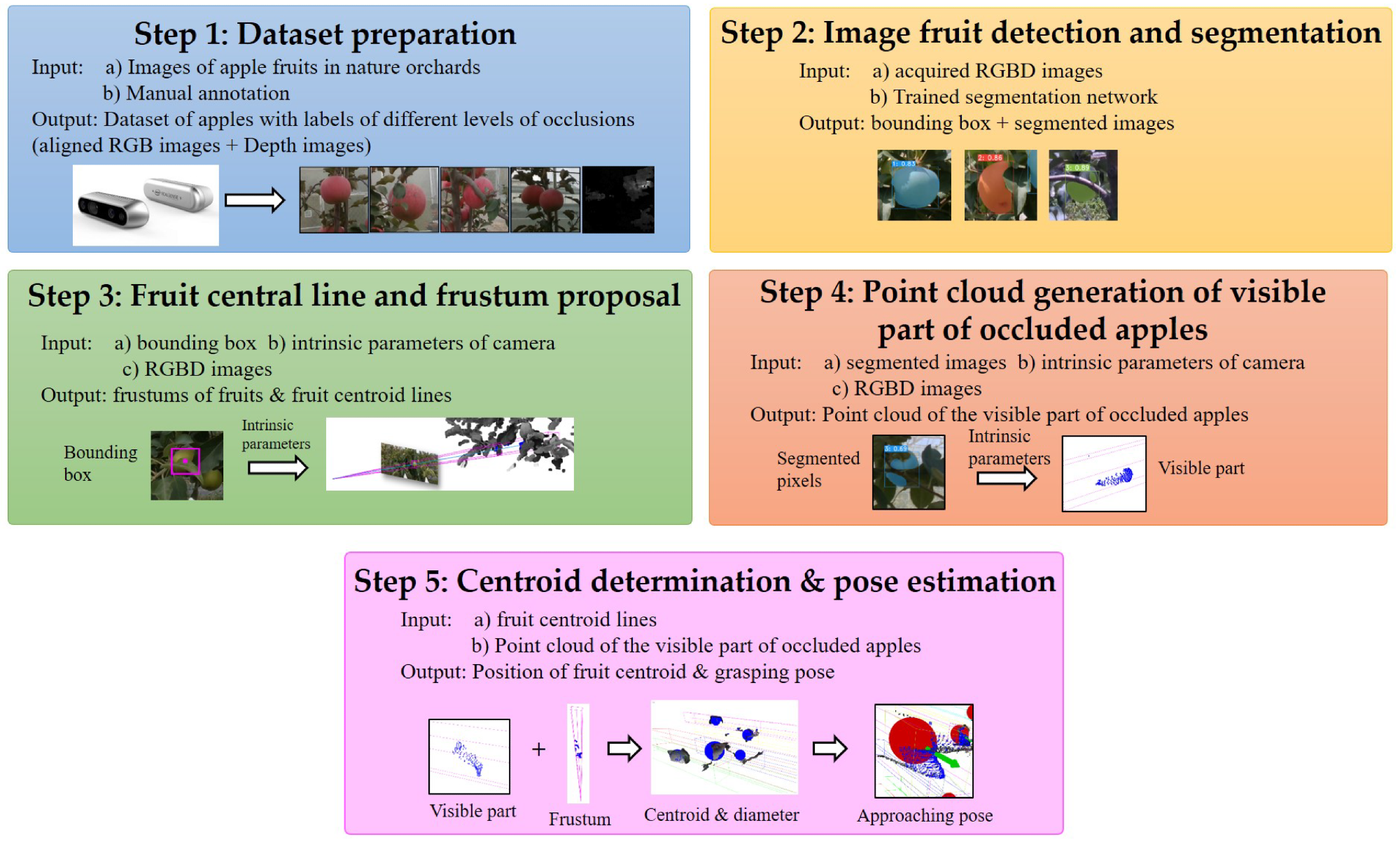

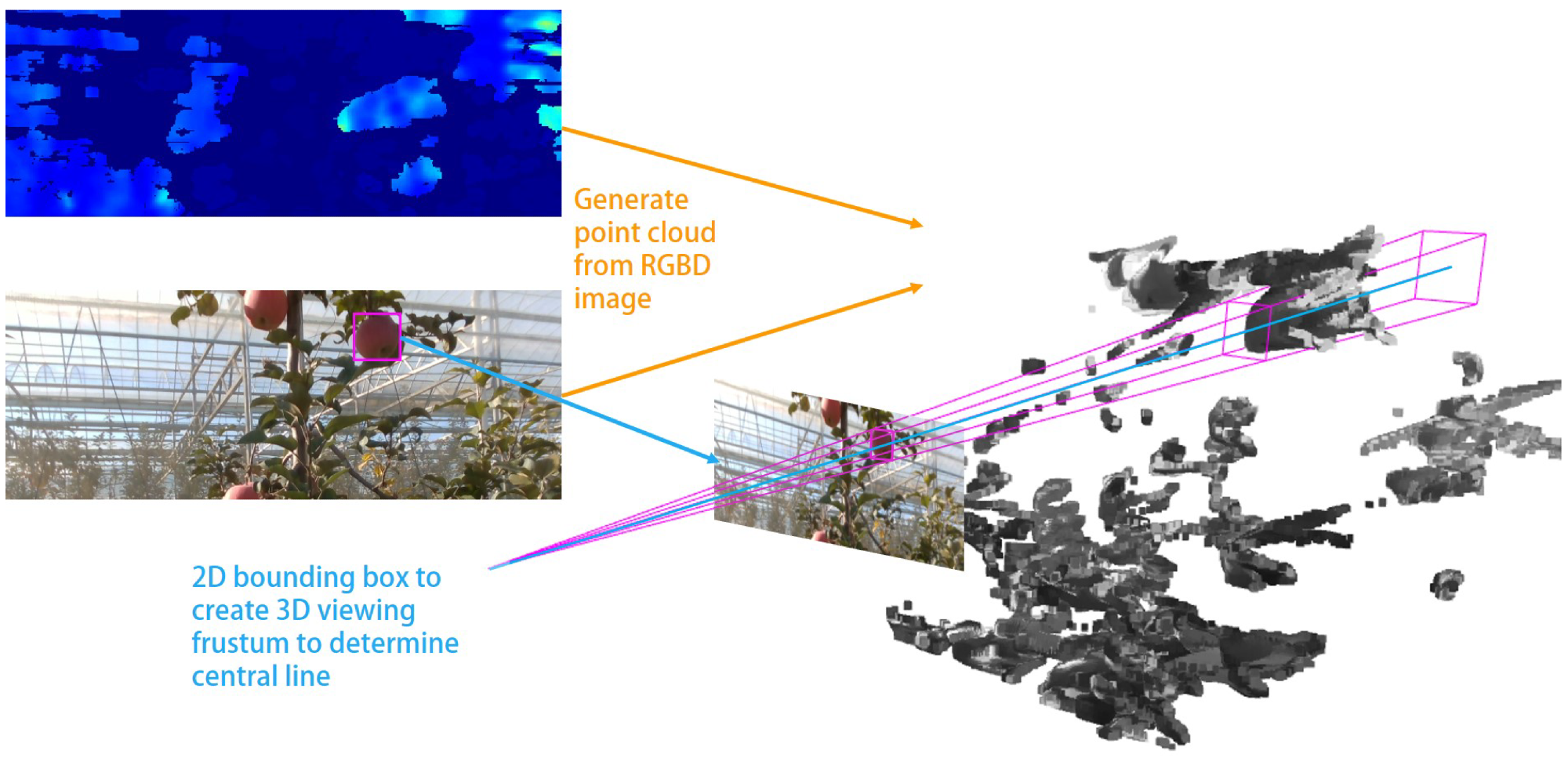

The suggested detection and localization method for occluded apple fruits depends on deep learning and a point-cloud-processing algorithm, which is illustrated in Figure 1. The flow of the proposed approach includes the attainment of the fruits’ sensing images, recognition of apple fruit, fruit central line and frustum proposal, the point cloud generation for the visible parts of apples, and the identification of the size, centroid, and pose.

Figure 1. The overall workflow of the proposed method for occluded apple localization. Image Credit: Li, et al., 2022

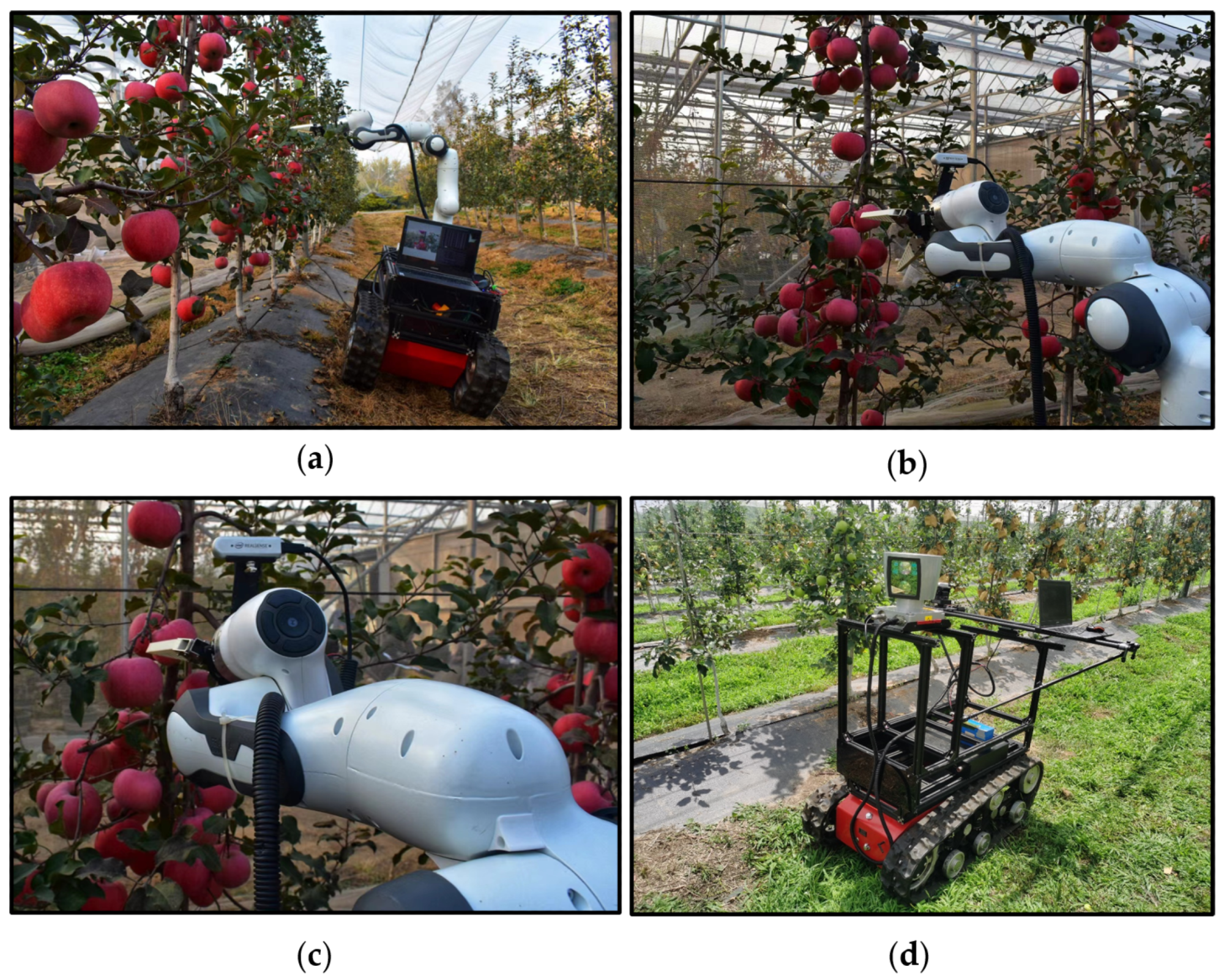

The proposed method was implemented based on the hardware platform, as depicted in Figure 2 (a–c).

Figure 2. Hardware platform of the robotic system in the orchard experiments. (a–c) are the hardware platform of the robotic system; (d) is the verification platform with a Realsense D435i and a 64-line LiDAR to acquire the true values of fruits. Image Credit: Li, et al., 2022

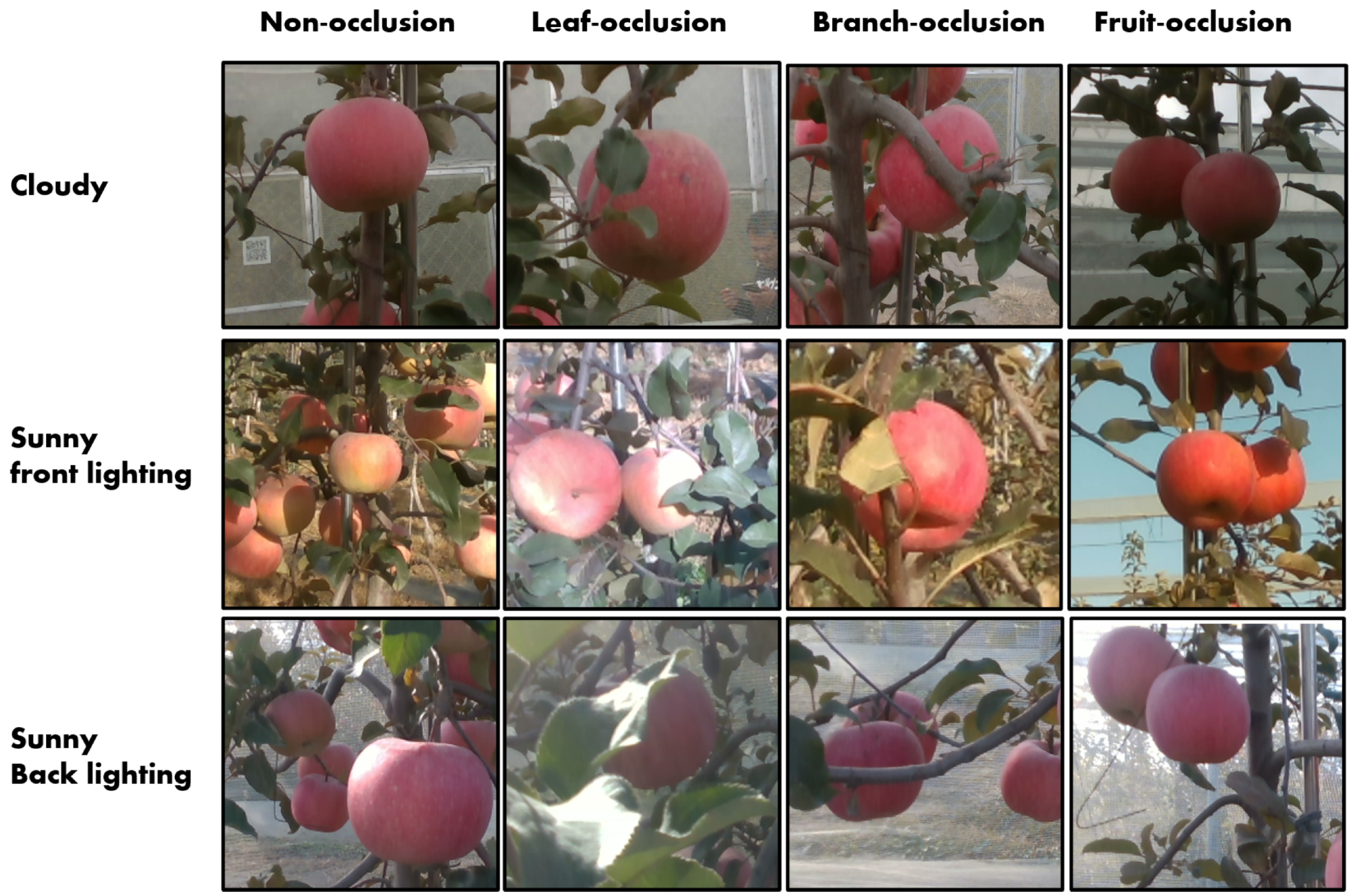

Two types of image datasets were used in this study to train the segmentation network for the suggested apple localization algorithm. One was the open-source MinneApple dataset and the other dataset was RGBD apple dataset obtained by the Realsense D435i RGBD camera. As illustrated in Figure 3, various conditions were considered in the preparation.

Figure 3. The apple fruits under different illuminations and occlusions. Image Credit: Li, et al., 2022

The dataset was split in a ratio of 4:1, for the training and validation sets, respectively. The study employed pre-trained models to implement transfer learning. The results of the comparison are given in Table 2.

Table 2. Performance comparisons of different networks on apple instance segmentation. Source: Li, et al., 2022

| |

|

Average Precision (AP) |

FPS |

| Network |

Backbone |

Non-Occlusion |

Leaf-Occlusion |

Branch-Occlusion |

Fruit-Occlusion |

| Model |

|

Bbox |

Mask |

Bbox |

Mask |

Bbox |

Mask |

Bbox |

Mask |

| Mask |

ResNet-50 |

38.14 |

40.12 |

29.56 |

28.14 |

9.1 |

6.14 |

13.2 |

8.85 |

17.3 |

| RCNN |

ResNet-101 |

38.39 |

39.62 |

25.13 |

25.96 |

7.61 |

5.23 |

9.96 |

7.88 |

14.5 |

| MS |

ResNet-50 |

38 |

38.12 |

27.04 |

25.27 |

4.88 |

6.36 |

9.03 |

8.29 |

17.1 |

| RCNN |

ResNet-101 |

39.09 |

40.66 |

27.98 |

24.97 |

7.52 |

7.05 |

10.99 |

10.36 |

13.6 |

| YOLACT |

ResNet-50 |

43.53 |

44.27 |

26.29 |

26.08 |

16.67 |

13.35 |

15.33 |

14.61 |

35.2 |

| ResNet-101 |

39.85 |

41.48 |

28.07 |

27.56 |

11.61 |

11.31 |

21.22 |

21.88 |

34.3 |

YOLACT

++ |

ResNet-50 |

42.62 |

44.03 |

32.06 |

35.49 |

18.17 |

13.8 |

21.48 |

20.28 |

31.1 |

| ResNet-101 |

43.98 |

45.06 |

34.23 |

36.17 |

15.46 |

13.49 |

11.52 |

13.33 |

29.6 |

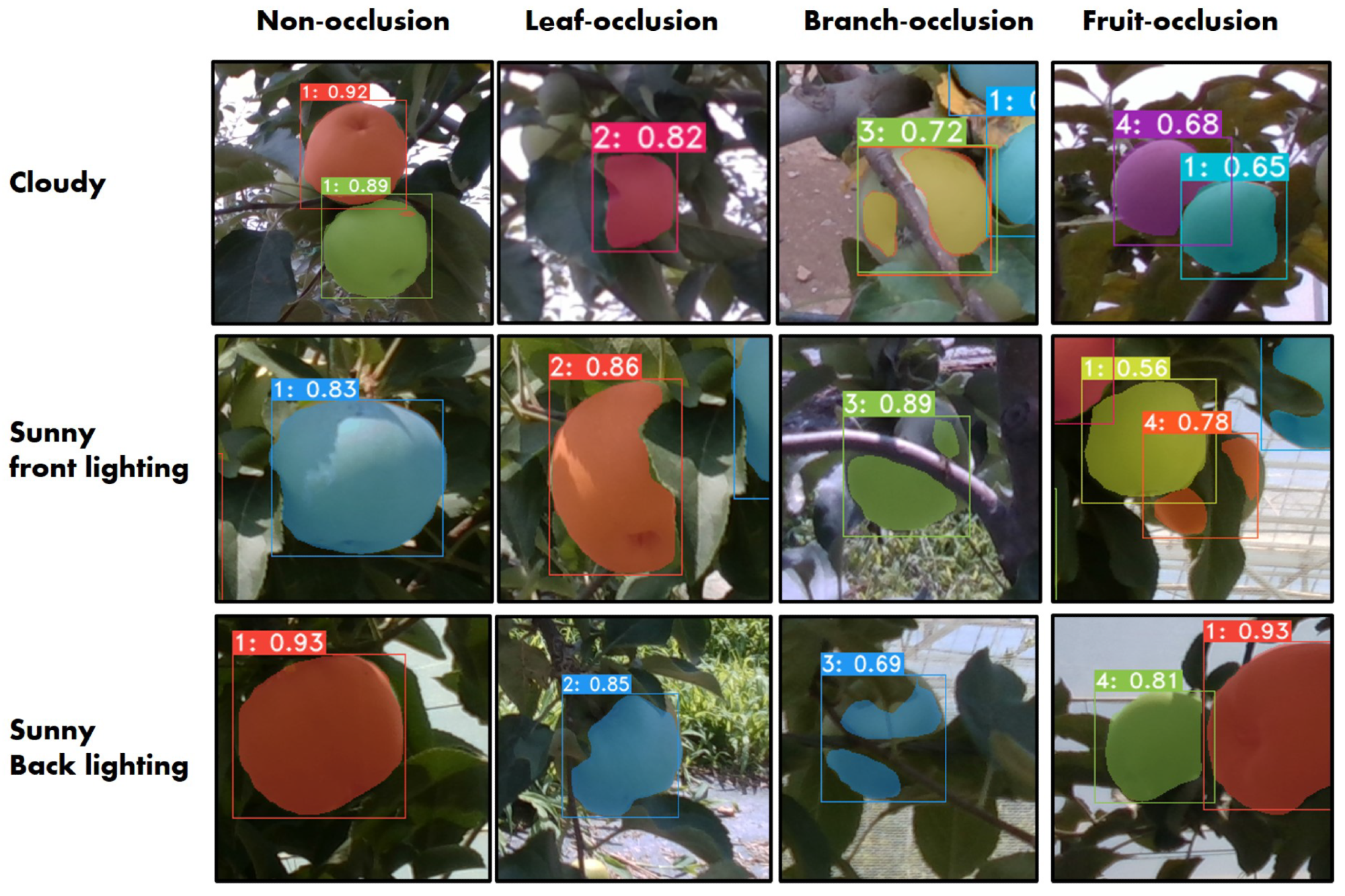

Figures 4, 5, 6 provide the comparative test results of the networks. Depending on the analysis, YOLACT++ (ResNet-101) is chosen as the instance segmentation network for apples in this study, and certain test results are demonstrated in Figure 7.

Figure 4. Performance of the detection and segmentation of YOLACT++ network in cloudy conditions. (a) ResNet-50; (b) ResNet-101. Image Credit: Li, et al., 2022

Figure 5. Performance of the detection and segmentation of YOLACT++ network in front light in sunny conditions. (a) ResNet-50; (b) ResNet-101. Image Credit: Li, et al., 2022

Figure 6. Performance of the detection and segmentation of YOLACT++ network in back lighting in sunny conditions. (a) ResNet-50; (b) ResNet-101. Image Credit: Li, et al., 2022

Figure 7. Detection results of YOLACT++ (ResNet-101) under different illuminations and occlusions. The numbers on the bounding boxes denote the classes of the targets, Class 1: non-occluded, Class 2: leaf-occluded, Class 3: branch/wire-occluded, Class 4: fruit-occluded. Image Credit: Li, et al., 2022

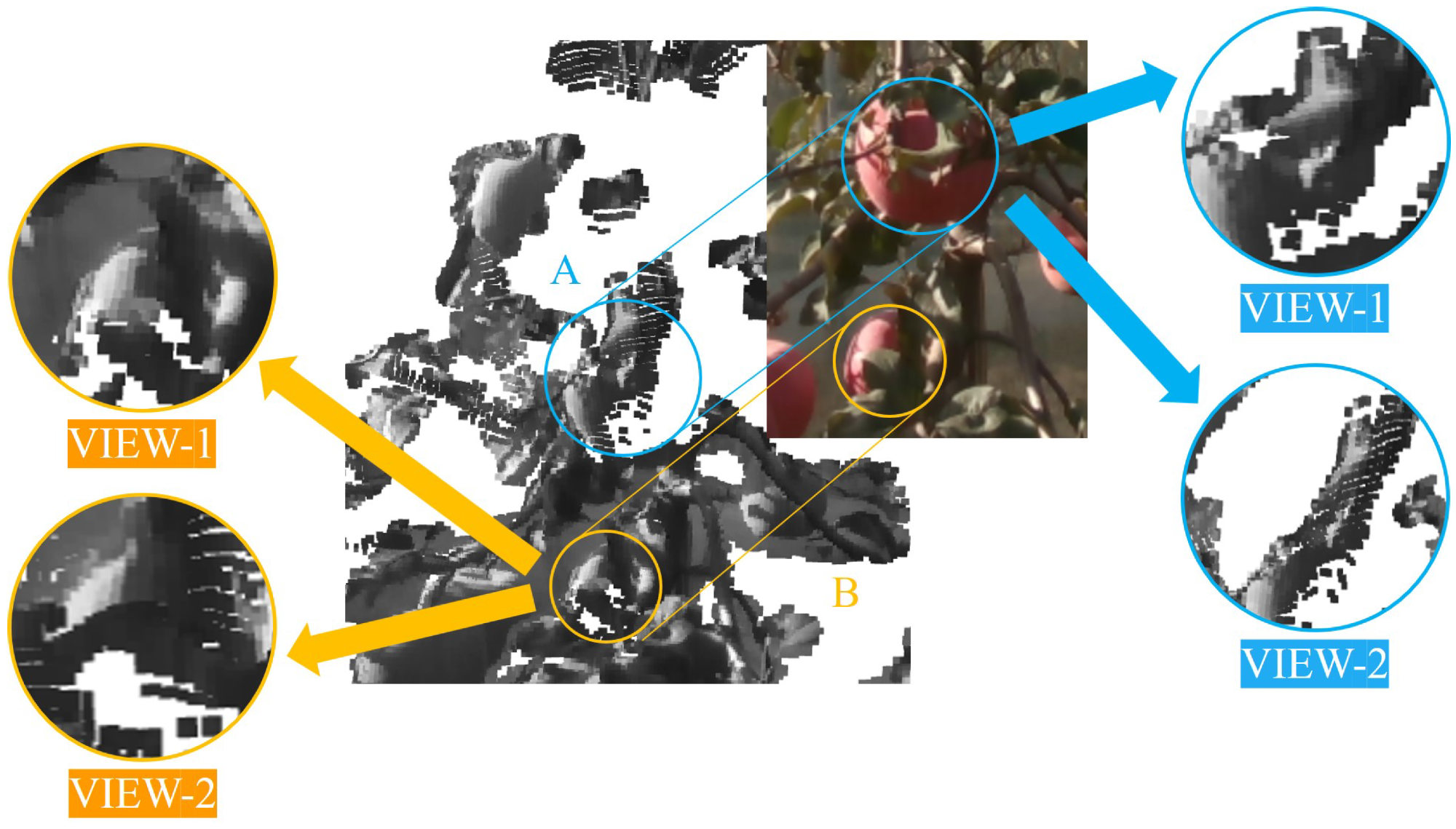

In Figure 8, it can be seen that Target A and Target B had a poor depth filling rate to various extents, and can be seen in two different views. Point clouds on the Target A and Target B surface were not spherical, resulting in difficulties in the analysis of morphological features.

Figure 8. Distortion and fragments of the point clouds of the partially occluded fruits (Target A and Target B). Image Credit: Li, et al., 2022

To resolve this issue, the study proposes a pipeline for fruit high-precision localization with occlusions.

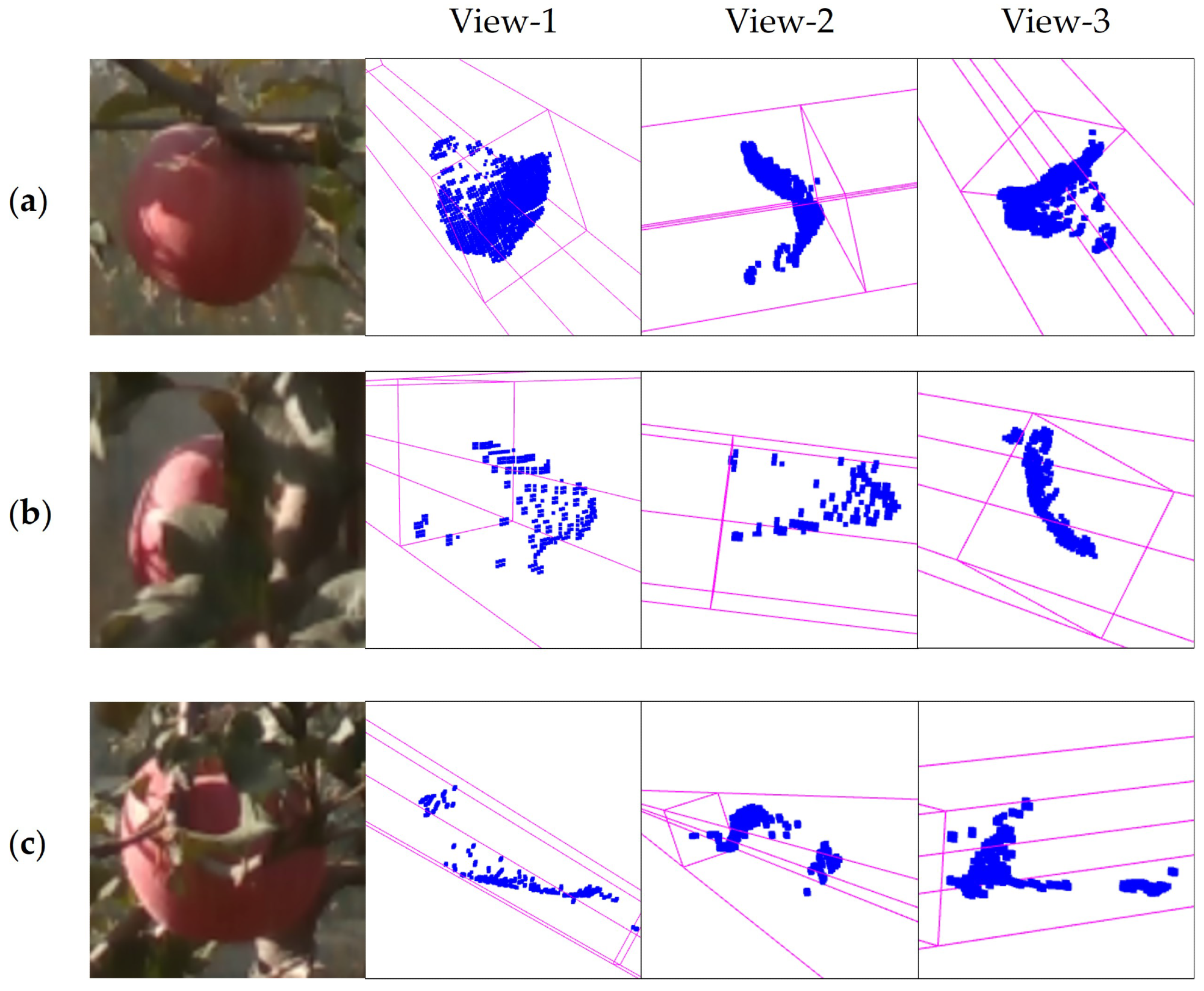

The fundamental problem for a robotic harvest is determining the fruit’s center. however, there are outliers and deficiencies in the point clouds due to the sensors’ characteristics and mask segmentation inaccuracies, resulting in distorted and fragmentary results in a scenario where the acquired point clouds (Figure 9).

Figure 9. distortion and fragments of the targets’ point clouds. a) Non-occluded fruits’ point clouds with little distortion and good completeness; (b) leaf-occluded fruits’ point clouds with considerable distortion and good completeness; (c) leaf-occluded fruits’ point clouds with considerable distortion and fragments. Image Credit: Li, et al., 2022

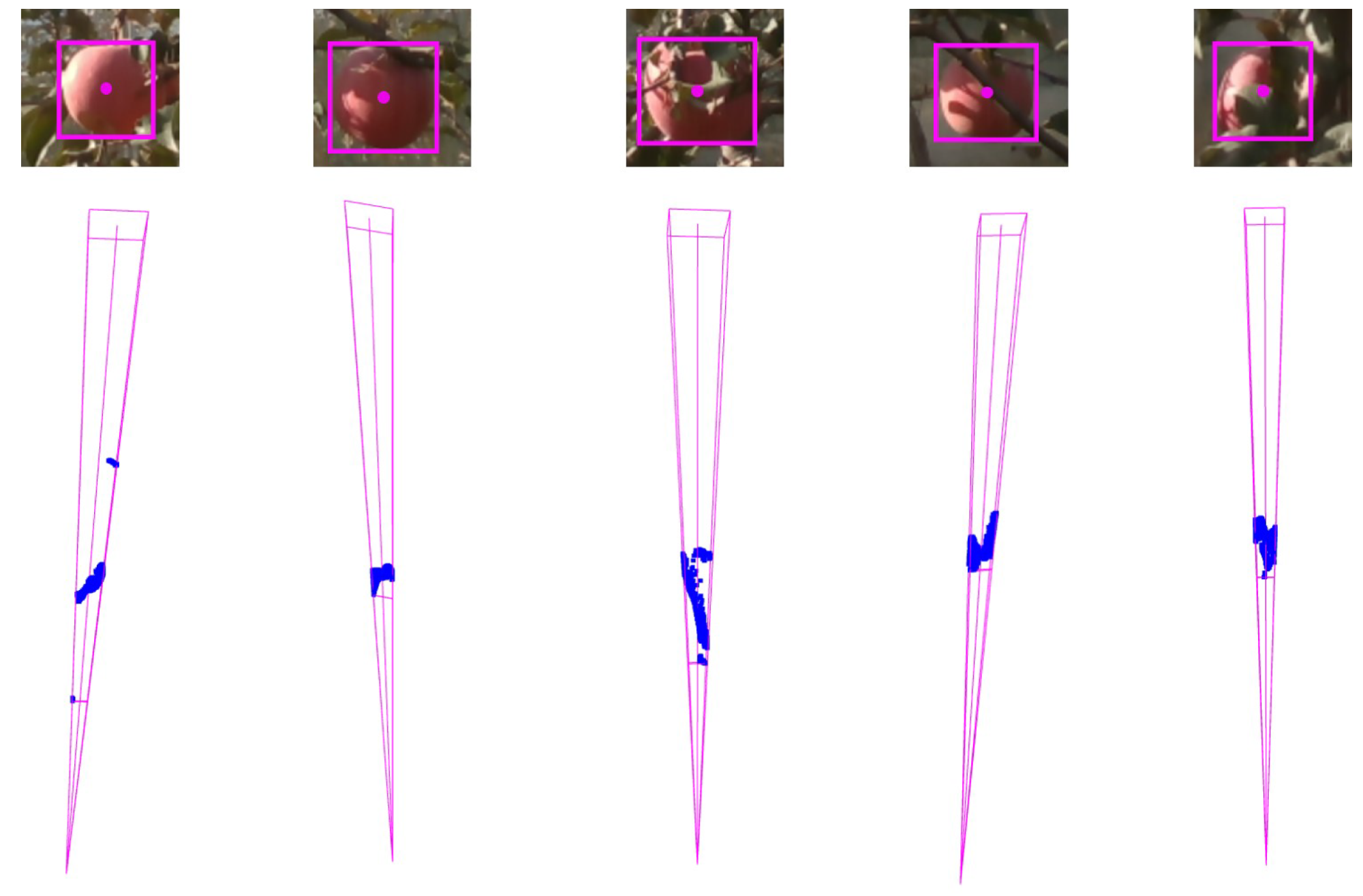

To obtain the coordinate on the z-axis, the bounding boxes of fruits on the RGB image can be lifted to a frustum and a 3D central line, by using the aligned depth image and the RGB camera’s intrinsic parameter matrix, as illustrated in Figure 10.

Figure 10. 2D bounding boxes on RGB images and their corresponding point cloud frustums. Image Credit: Li, et al., 2022

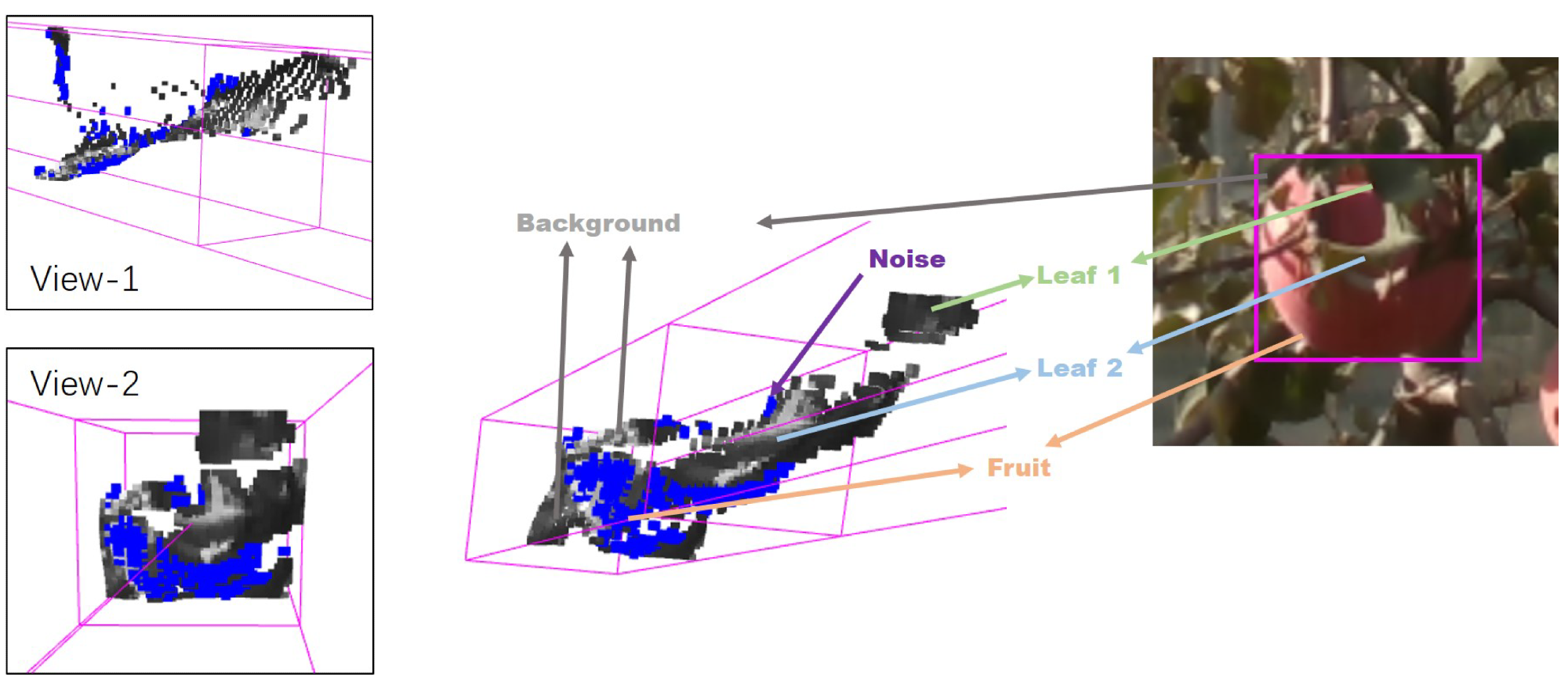

In the frustum, there are point clouds of non-fruit, like the background, leaves and branches as depicted in Figure 11. t is essential to filter the point cloud of the fruit from non-fruit objects. Two steps are performed to end this issue.

- Generation of point clouds under the fruits’ masks, as shown in Figure 12.

- Selection of the most likely point cloud.

Figure 11. The point cloud in a frustum of partially occluded fruits, including point clouds of fruits (in blue), leaves, the background, and noise. View 1 and View 2 are two different angles of view of the point cloud: View 1 (left front); View 2 (front). Image Credit: Li, et al., 2022

Figure 12. The generating process of point clouds and frustum from RGBD images. Image Credit: Li, et al., 2022

Results and Discussion

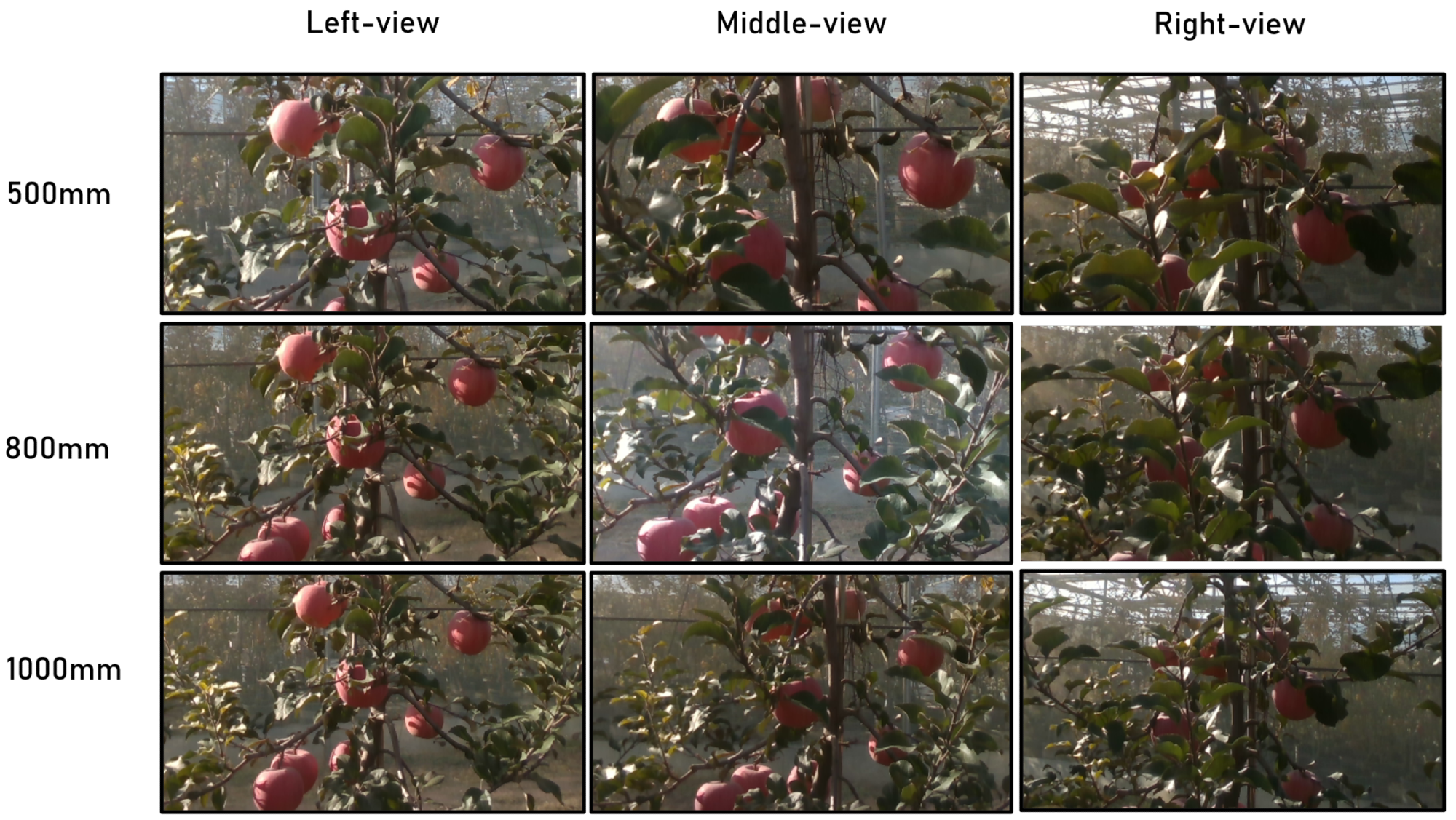

The study conducted three groups of tests at the distances taken from the row of trees and at the view of the right, left, and middle of the target tree, as illustrated in Figure 13.

Figure 13. The fruits of the comparison tests at three different distances and views. Image Credit: Li, et al., 2022

Table 3 shows the estimating errors of the center and radius using the proposed method and the bounding-box-based method in the total amount and different testing groups.

Table 3. The experimental results of the locating performance with the proposed method and the bounding-box-based method. Source: Li, et al., 2022

| |

|

Group 1 |

Group 2 |

Group 3 |

Total |

| |

|

bbx mtd. |

our mtd. |

bbx mtd. |

our mtd. |

bbx mtd. |

our mtd. |

bbx mtd. |

our mtd. |

| Center |

Max. error (mm) |

49.65 |

47.24 |

49.01 |

49.07 |

48.46 |

42.80 |

49.65 |

49.07 |

| Min. error (mm) |

2.24 |

0.05 |

4.79 |

0.19 |

0.67 |

1.65 |

0.67 |

0.05 |

| Med. error (mm) |

17.16 |

5.69 |

24.43 |

8.25 |

21.44 |

14.94 |

19.77 |

8.03 |

| Mean error (mm) |

18.36 |

9.15 |

24.43 |

13.73 |

22.70 |

17.74 |

21.51 |

12.36 |

| Std. error (mm) |

11.09 |

9.99 |

13.55 |

13.10 |

13.15 |

10.38 |

12.74 |

11.59 |

| Radius |

Max. error (mm) |

14.47 |

1.21 |

27.11 |

6.72 |

14.84 |

5.44 |

27.11 |

6.72 |

| Min. error (mm) |

0.01 |

0.54 |

0.08 |

1.18 |

0.14 |

0.26 |

0.01 |

0.26 |

| Med. error (mm) |

4.01 |

0.98 |

3.40 |

1.37 |

4.26 |

2.22 |

3.97 |

1.18 |

| Mean error (mm) |

4.78 |

0.96 |

4.77 |

1.48 |

4.61 |

2.41 |

4.74 |

1.43 |

| Std. error (mm) |

3.20 |

0.14 |

4.89 |

0.69 |

3.83 |

1.04 |

3.90 |

0.84 |

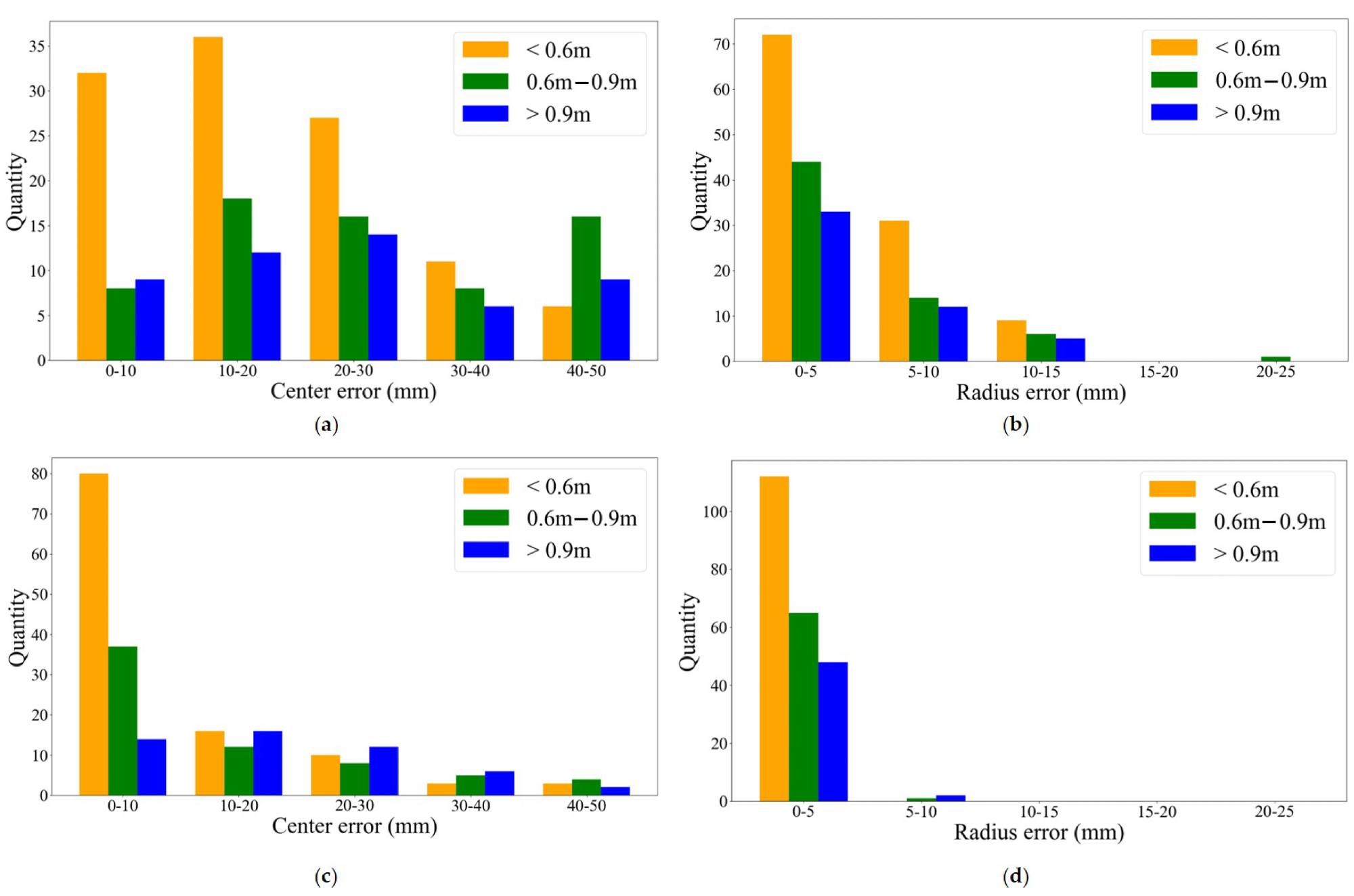

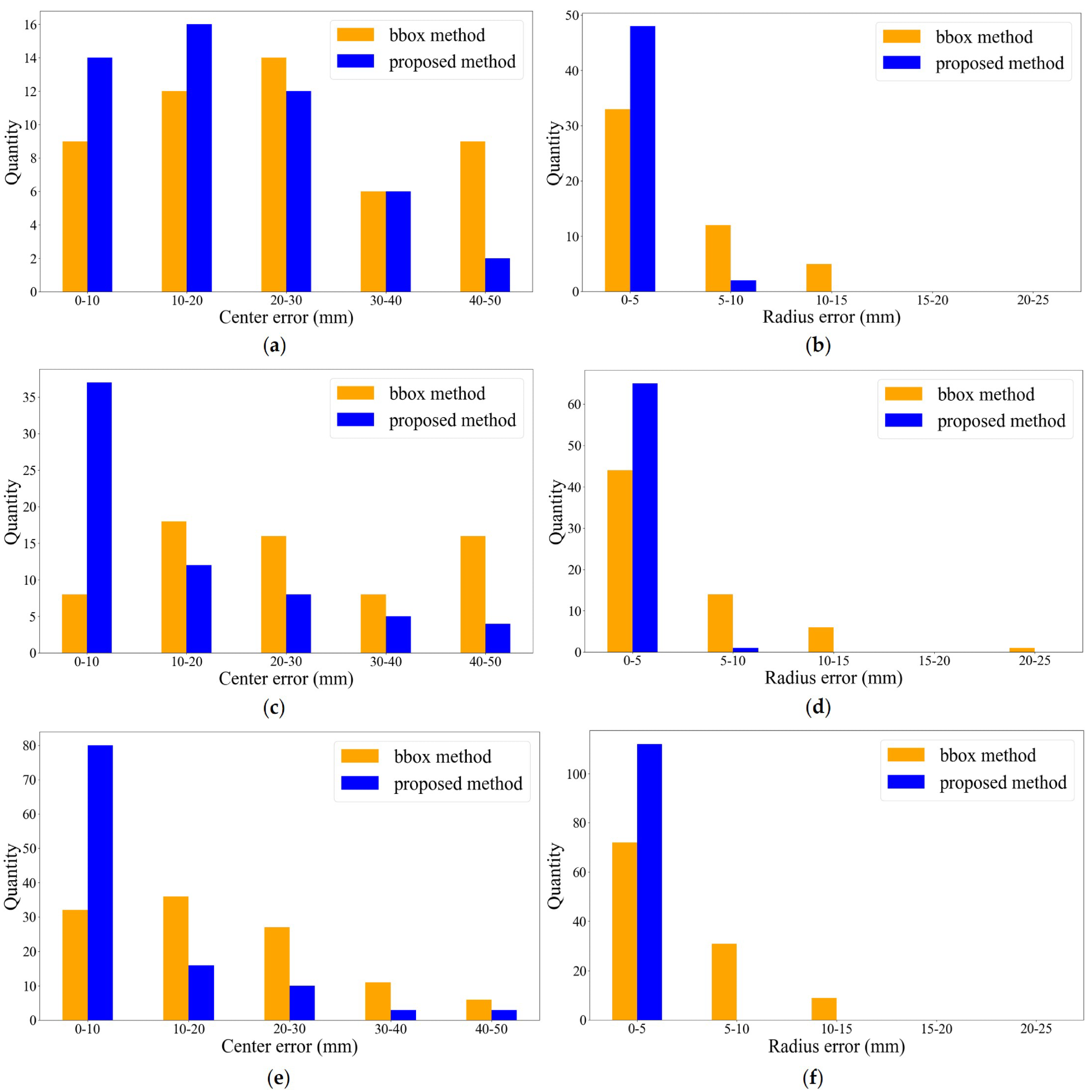

Figures 14 and 15 are presented to explain the relationship between distances and center/radius error, where the x-axis represents the errors divided into five groups and the y-axis represents the number of samples accordingly.

Figure 14. Quantities’ distributions of the center errors and radius errors with different sensing distances. a, b) are with the bounding-box-based method; (c, d) are with the proposed method. Image Credit: Li, et al., 2022

Figure 15. Quantities’ distributions of the center errors and radius errors with the proposed method and the bounding-box-based method. a, b) are the results of Group 1; (c, d) are the results of Group 2; (e, f) are the results of Group 3. Image Credit: Li, et al., 2022

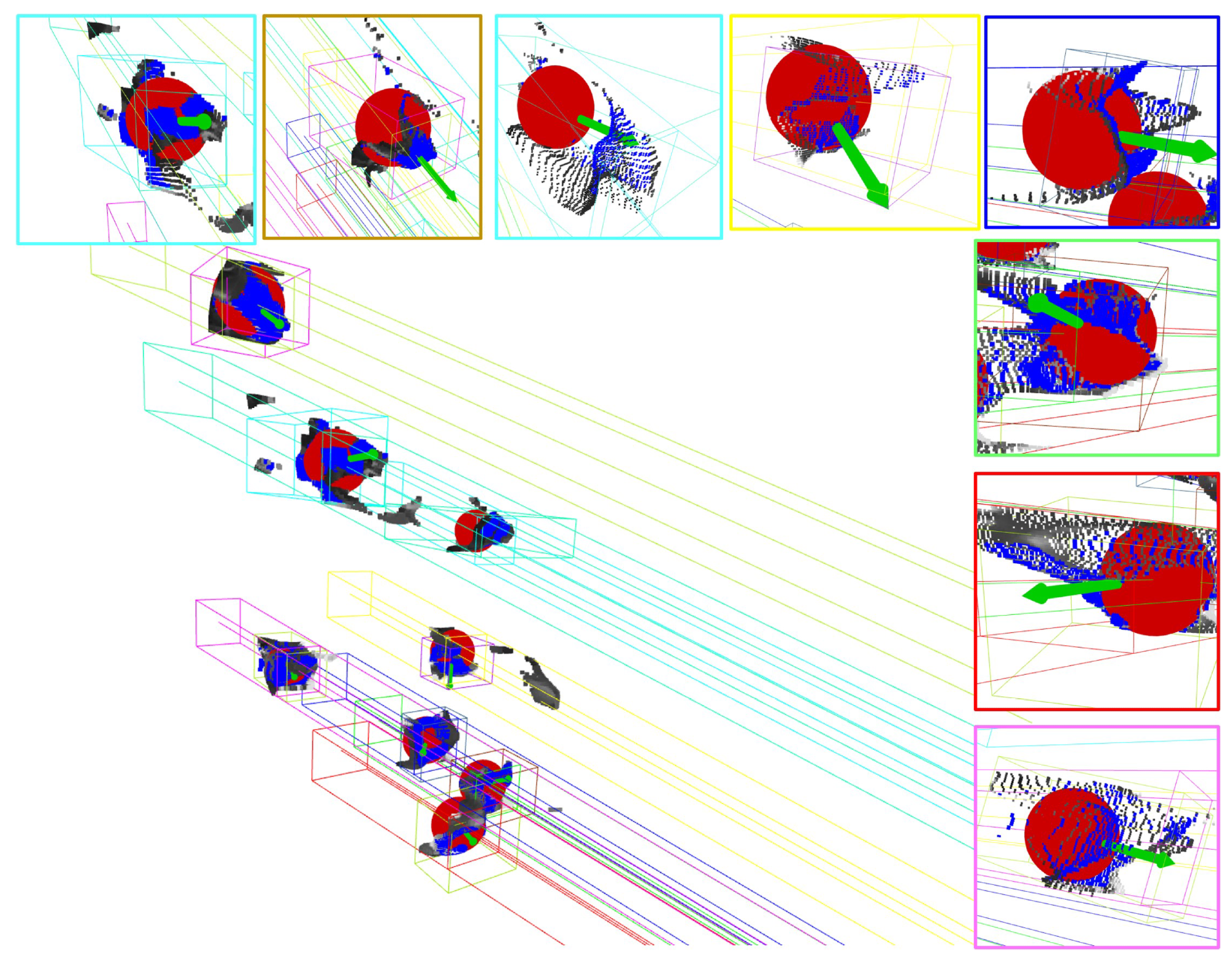

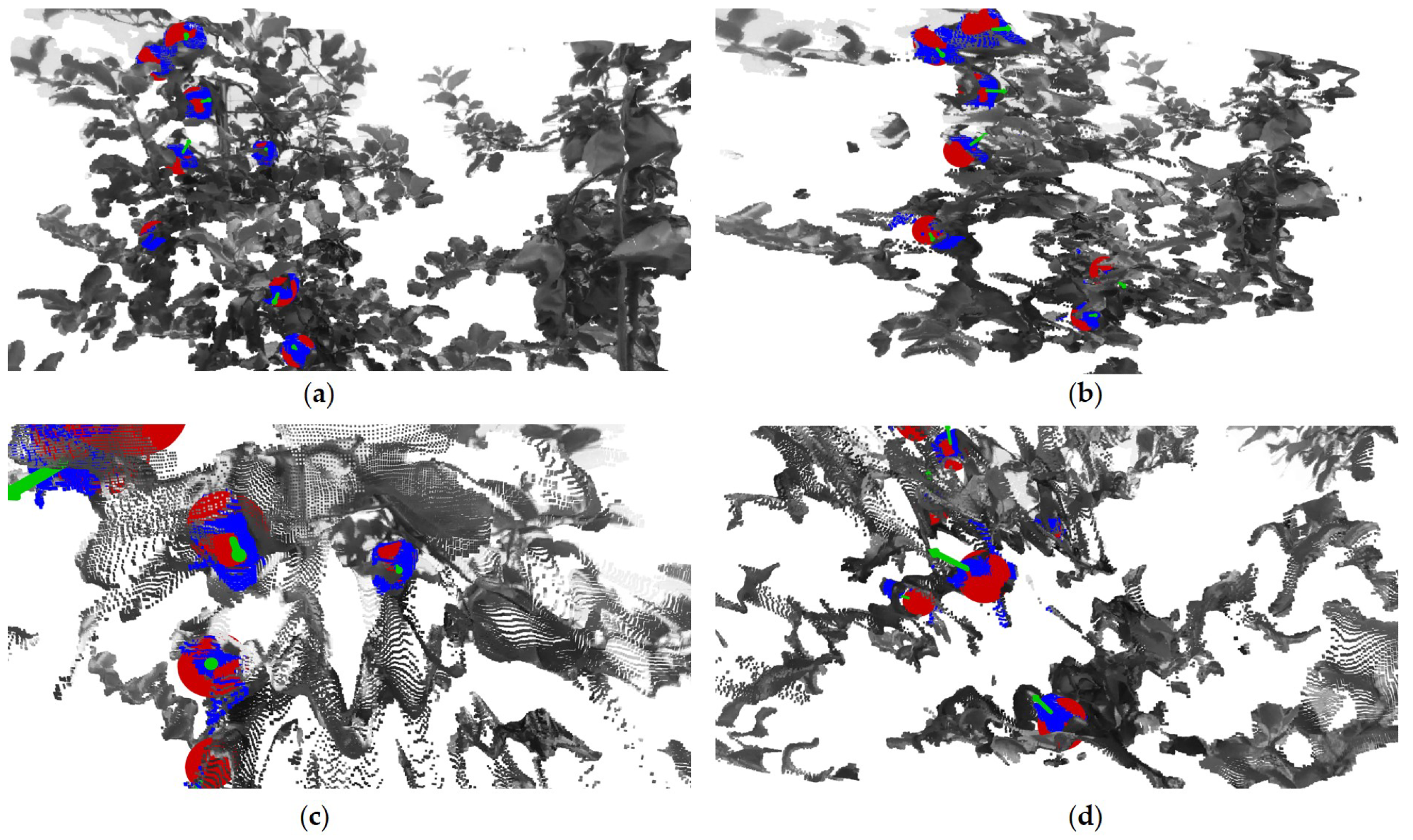

Figure 16 and Figure 17 also show experimental results of localization and approaching vector estimations.

Figure 16. The fit spheres of fruits and their approaching vectors at 800 mm. Image Credit: Li, et al., 2022

Figure 17. The localizations and estimations of approaching vectors of fruits with the proposed method. a–d) demonstrate four samples of experimental results with different apple trees. Image Credit: Li, et al., 2022

The study determined that the performance of fruit location accuracy was also based on the precision of central line extraction depending on 2D bounding boxes and 2D masks in our experiments.

Furthermore, the results of the experiment revealed that the depth data filling rate went through degradation in the back-lighting condition, owing to the impact of RGBD sensor performance. The localization precision under good lighting conditions outdid the case with the back-lighting illumination by this proposed method.

Conclusion

This study investigated the problem of localizing apple fruits for harvesting robots under occluded conditions. It also proposed a network for a frustum-based processing pipeline and apple fruit target instance segmentation for point clouds generated from RGBD images.

In the case of partial occlusion, the experimental results in orchards revealed that the proposed method enhanced the performance of fruits’ localization. The success of the suggested method offers the following benefits: (a) strength to the low fill rate in an open-air setting; (b) precision when a partial occlusion is present; and (c) offering the forthcoming direction for the robotic gripper to pick for the reference.

Journal Reference:

Li, T., Feng, Q., Qiu, Q., Xie, F., Zhao, C. (2022) Occluded Apple Fruit Detection and Localization with a Frustum-Based Point-Cloud-Processing Approach for Robotic Harvesting. Remote Sensing, 14(3), p. 482. Available Online: https://www.mdpi.com/2072-4292/14/3/482.

References and Further Reading

- Zhang, Z & Heinemann, P H (2017) Economic analysis of a low-cost apple harvest-assist unit. HortTechnology, 27, pp.240–247. doi.org/10.21273/HORTTECH03548-16.

- Zhuang, J., et al. (2019) Computer vision-based localisation of picking points for automatic litchi harvesting applications towards natural scenarios. Biosystems Engineering, 187, pp.1–20. doi.org/10.1016/j.biosystemseng.2019.08.016.

- Ji, W., et al. (2012) Automatic recognition vision system guided for apple harvesting robot. Computers & Electrical Engineering, 38, pp.1186–1195. doi.org/10.1016/j.compeleceng.2011.11.005.

- Zhao, D., et al. (2019) Apple positioning based on YOLO deep convolutional neural network for picking robot in complex background., Transactions of the Chinese Society of Agricultural Engineering, 35, pp.164–173.

- Kang, H & Chen, C (2020) Fast implementation of real-time fruit detection in apple orchards using deep learning. Computers and Electronics in Agriculture, 168, p.105108. doi.org/10.1016/j.compag.2019.105108.

- Gené-Mola, J., et al. (2019) Multi-modal deep learning for Fuji apple detection using RGBD cameras and their radiometric capabilities. Computers and Electronics in Agriculture, 162, pp.689–698. doi.org/10.1016/j.compag.2019.05.016.

- Fu, L., et al. (2020) Faster R–CNN–based apple detection in dense-foliage fruiting-wall trees using RGB and depth features for robotic harvesting. Biosystems Engineering, 197, pp.245–256. doi.org/10.1016/j.biosystemseng.2020.07.007.

- Gené-Mola, J., et al. (2020) Fruit detection and 3D location using instance segmentation neural networks and structure-from-motion photogrammetry. Computers and Electronics in Agriculture, 169, p.105165. doi.org/10.1016/j.compag.2019.105165.

- Quan, L., et al. (2021) An Instance Segmentation-Based Method to Obtain the Leaf Age and Plant Centre of Weeds in Complex Field Environments. Sensors, 21, p.3389. doi.org/10.3390/s21103389.

- Liu, H., et al. (2021) Real-time Instance Segmentation on the Edge, arXiv:cs.CV/2012.12259.

- Dandan, W & Dongjian, H (2019) Recognition of apple targets before fruits thinning by robot based on R-FCN deep convolution neural network. Transactions of the Chinese Society of Agricultural Engineering, 35, pp.156–163.

- Kang, H & Chen, C (2020) Fruit detection, segmentation and 3D visualisation of environments in apple orchards. Computers and Electronics in Agriculture, 171, p.105302. doi.org/10.1016/j.compag.2020.105302.

- Zhang, J., et al. (2020) Multi-class object detection using faster R-CNN and estimation of shaking locations for automated shake-and-catch apple harvesting. Computers and Electronics in Agriculture, 173, p.105384. doi.org/10.1016/j.compag.2020.105384.

- Yan, B., et al. (2021) A Real-Time Apple Targets Detection Method for Picking Robot Based on Improved YOLOv5. Remote Sensing, 13, p.1619. doi.org/10.3390/rs13091619.

- Zhao, Y., et al. (2016) A review of key techniques of vision-based control for harvesting robot. Computers and Electronics in Agriculture, 127, pp.311–323. doi.org/10.1016/j.compag.2016.06.022.

- Buemi, F., et al. (1996) The agrobot project. Advances in Space Research, 18, pp.185–189. doi.org/10.1016/0273-1177(95)00807-Q.

- Kitamura, S & Oka, K (2005) Recognition and cutting system of sweet pepper for picking robot in greenhouse horticulture. In Proceedings of the IEEE International Conference Mechatronics and Automation, Niagara Falls, ON, Canada, 29 July–1 August, 4, pp.1807–1812.

- Xiang, R., et al. (2014) Recognition of clustered tomatoes based on binocular stereo vision. Computers and Electronics in Agriculture, 106, pp.75–90. doi.org/10.1016/j.compag.2014.05.006.

- Plebe, A & Grasso, G (2001) Localization of spherical fruits for robotic harvesting. Machine Vision and Applications, 13, pp.70–79. doi.org/10.1007/PL00013271.

- Gongal, A., et al. (2016) Apple crop-load estimation with over-the-row machine vision system. Computers and Electronics in Agriculture, 120, pp.26–35. doi.org/10.1016/j.compag.2015.10.022.

- Grosso, E & Tistarelli, M (1995) Active/dynamic stereo vision. IEEE Transactions on Pattern Analysis and Machine Intelligence, 17, pp.868–879. doi.org/10.1109/34.406652.

- Liu, Z., et al. (2019) Improved kiwifruit detection using pre-trained VGG16 with RGB and NIR information fusion. IEEE Access, 8, pp.2327–2336. doi.org/10.1109/ACCESS.2019.2962513.

- Tu, S., et al. (2020) Passion fruit detection and counting based on multiple scale faster R-CNN using RGBD images. Precision Agriculture, 21, pp.1072–1091. doi.org/10.1007/s11119-020-09709-3.

- Zhang, Z., et al. (2018) A review of bin filling technologies for apple harvest and postharvest handling. Applied Engineering in Agriculture, 34, pp 687–703. doi.org/10.13031/aea.12827.

- Milella, A., et al. (2019) In-field high throughput grapevine phenotyping with a consumer-grade depth camera. Computers and Electronics in Agriculture, 156, pp.293–306. doi.org/10.1016/j.compag.2018.11.026.

- Arad, B., et al. (2020) Development of a sweet pepper harvesting robot. Journal of Field Robotics. 37, pp.1027–1039. doi.org/10.1002/rob.21937.

- Zhang, Y., et al. (2019) Segmentation OF apple point clouds based on ROI in RGB images. Inmateh Agricultural Engineering, 59, pp.209–218. doi.org/10.35633/inmateh-59-23.

- Lehnert, C., et al. (2016) Sweet pepper pose detection and grasping for automated crop harvesting. In Proceedings of the 2016 IEEE International Conference on Robotics and Automation (ICRA), Stockholm, Sweden, 16–21 May; pp.2428–2434.

- Lehnert, C., et al. (2017) Autonomous Sweet Pepper Harvesting for Protected Cropping Systems. IEEE Robotics and Automation Letters, 2, pp.872–879. doi.org/10.1109/LRA.2017.2655622.

- Yaguchi, H., et al. (2016) Development of an autonomous tomato harvesting robot with rotational plucking gripper. In Proceedings of the 2016 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Daejeon, Korea, 9–14 October 2016; pp.652–657.

- Lin, G., et al. (2019) Guava Detection and Pose Estimation Using a Low-Cost RGBD Sensor in the Field. Sensors, 19, p.428. doi.org/10.3390/s19020428.

- Tao, Y & Zhou, J (2017) Automatic apple recognition based on the fusion of color and 3D feature for robotic fruit picking. Computers and Electronics in Agriculture, 142, pp.388–396. doi.org/10.1016/j.compag.2017.09.019.

- Kang, H., et al. (2020) Visual Perception and Modeling for Autonomous Apple Harvesting. IEEE Access, 8, pp.62151–62163. doi.org/10.1109/ACCESS.2020.2984556.

- Häni, N., et al. (2020) A benchmark dataset for apple detection and segmentation, IEEE Robotics and Automation Letters, 5, pp.852–858. doi.org/10.1109/LRA.2020.2965061.

- Keskar, N S & Socher, R (2017) Improving generalization performance by switching from adam to sgd. arXiv 2017, arXiv:1712.07628.

- Sahin, C., et al. (2020) A review on object pose recovery: From 3d bounding box detectors to full 6d pose estimators. Image and Vision Computing, 96, p.103898. doi.org/10.1016/j.imavis.2020.103898.

- Magistri, F., et al. (2021) Towards In-Field Phenotyping Exploiting Differentiable Rendering with Self-Consistency Loss. In Proceedings of the 2021 IEEE International Conference on Robotics and Automation (ICRA), Xi’an, China, 30 May–5 June 2021; pp.13960–13966.

- Bellocchio, E., et al. (2020) Combining domain adaptation and spatial consistency for unseen fruits counting: A quasi-unsupervised approach. IEEE Robotics and Automation Letters, 5, pp.1079–1086. doi.org/10.1109/LRA.2020.2966398.

- Ge, Y., et al. (2020) Symmetry-based 3D shape completion for fruit localisation for harvesting robots. Biosystems Engineering, 197, pp.188–202. doi.org/10.1016/j.biosystemseng.2020.07.003.