Synthesizing artificial speech has been a common technology that we have seen in our society for years now. However, with the advancements in speech recognition and generation, it is now possible to endow this artificial speech with ‘emotion’.

Image Credit: Lightspring/Shutterstock.com

The methods that allow this have been developed by researchers at Hitachi R&D Group and University of Tsukuba in Japan, in a trial investigating what effects emotional speech has on the moods of elderly participants. They showed that appropriate emotional speech, in line with the body’s circadian rhythm, positively affected mood.

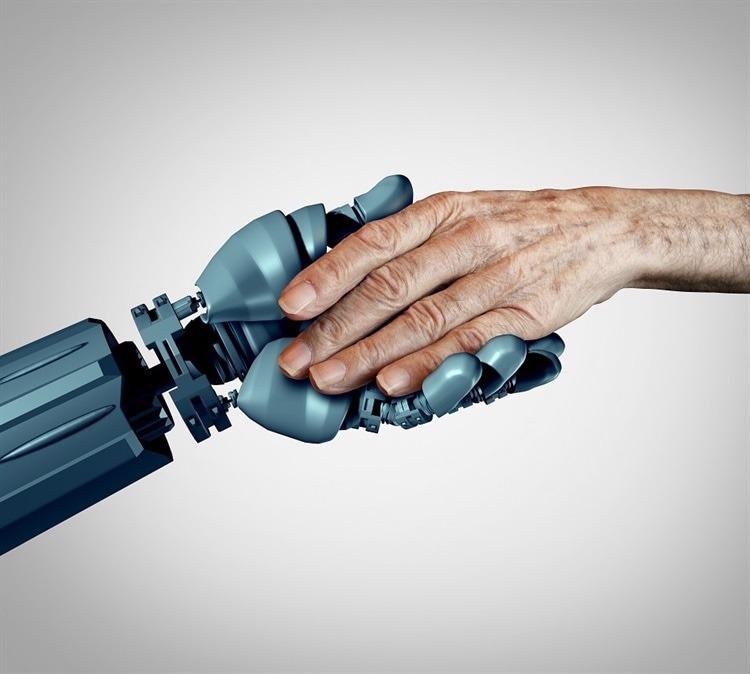

Merging AI and Robotics

The robotics and artificial intelligence (AI) field has merged quite significantly over the past decade. This combination of technology has seen more dexterous robotic systems become developed, capable of interacting within an environment with accuracy and skill.

While most of these technologies are seen in the manufacturing industry, we are now seeing an increase in robotics for healthcare, primarily in caregiving settings. This is due to the growing worldwide elderly population, who commonly require dedicated care during their day-to-day life. However, with increased stresses on healthcare systems globally, we are seeing that there are simply not enough resources to provide the services that these individuals require.

Studies have shown that loneliness can have profoundly negative effects on mental health, leading to diseases such as depression, anxiety and dementia. As such, it can be seen how important it is that we start to address the issue of the availability of care.

Developments in Care Robots

Care robots have been developed in various settings, commonly used to complete manual tasks such as patient transportation or delivery of meals. However, in situations where a more human touch is required, these devices are lacking.

One approach has been to use robotic devices with artificial human-like skin and make human-like facial emotions. Devices such as these have successfully taught children with autism how to attribute different facial features to their underlying emotions.

Oher approaches aim to target the audible emotional features heard in speech to promote positive effects in patients.

Researchers at Hitachi R&D Group and the University of Tsukuba in Japan have developed such a system, containing an AI model that can generate human-like speech, which sounds like natural speech but can also replicate different emotions heard in voice speech.

The speech is intended to imitate a professional caregiver. The intention is for models like this to be used in systems that can provide companionship, support, and guidance for those who need it.

How the Model Works

A system capable of generating emotional speech must first be trained to do so by providing it with samples of emotional speech.

This is then used to train an emotion recognizer to classify the emotion and a speech synthesis model to generate emotional speech. Once trained, the speech synthesis model and emotion recognizer combine in a synthesizer model that can generate emotional speech.

The emotion recognizer serves to guide the speech synthesizer depending on a ‘target emotion’ – which will be determined by the needs of the user.

In the investigation, the users of this system were elderly patients. The aim was to see how this system would affect their mood when the emotional speech was aligned with the body’s natural sleep-wake cycle (the circadian rhythm).

The participants were asked to listen to speech synthesized by the model at different points of the day and then self-report how this made them feel. They were asked to evaluate how the speech affected their arousal levels (how awake or tired they were) and how the speech affected their valance levels (how positive or negative an emotion is).

These two metrics, valance and arousal, are common measures used to quantify and classify different emotional states. The results of the study showed that particular speech samples were able to make the participants feel more energetic during the daytime, while others proved to be soothing enough to calm them down during the night.

These results show that not only is it possible to have a computer system synthesise speech in line with different emotions, but it is also able to affect the physiology of the person that is using it. When aligned with the circadian rhythm of the person, this provides a system that is likely to be very useful in helping people get up in the morning and fall asleep,

Future Outlook

This research output provides the basis for further development in human-computer interaction within care settings. Further research could see these devices put into robotic systems to provide 24/7 care.

With these devices already having the ability to detect emotion in speech, it is not a far stretch to see systems in the future that are emotionally reactive to our own speech input.

Continue reading: Evaluating a Social Robot's Ability to Express Emotions via Touch.

References and Further Reading

Homma, T., Sun, Q., Fujioka, T., Takawaki, R., Ankyu, E., Nagamatsu, K., Sugawara, D. and Harada, E.T., (2021) Emotional Speech Synthesis for Companion Robot to Imitate Professional Caregiver Speech. Available at: https://arxiv.org/abs/2109.12787

Killeen, C., (2002) Loneliness: an epidemic in modern society. Journal of advanced nursing, 28(4), pp.762-770. Available at: https://doi.org/10.1046/j.1365-2648.1998.00703.x

Broekens, J., Heerink, M. and Rosendal, H., (2009) Assistive social robots in elderly care: a review. Gerontechnology, 8(2), pp.94-103. Available at: https://psycnet.apa.org/record/2009-10609-006

Disclaimer: The views expressed here are those of the author expressed in their private capacity and do not necessarily represent the views of AZoM.com Limited T/A AZoNetwork the owner and operator of this website. This disclaimer forms part of the Terms and conditions of use of this website.