Social robots have become a likely future for industries requiring human interaction. Because of this, many researchers are striving to design robots with realistic emotional expression, particularly through facial expressions, full-body gestures, and voice.

Image Credit: OlegDoroshin/Shutterstock.com

For many people, affective touch is crucial for expressing emotions; placement and type can convey basic emotions like joy and sadness. Accurately modeling this behavior can help researchers design more realistic social robots.

A new study published in the journal Frontiers in Robotics and AI has evaluated the reception of touching behaviors from a social robot for the first time. A model enabling the android, named ERICA, to express complex emotions was implemented in the context of expressing both heartwarming and horror emotions.

Methodology and Experiment

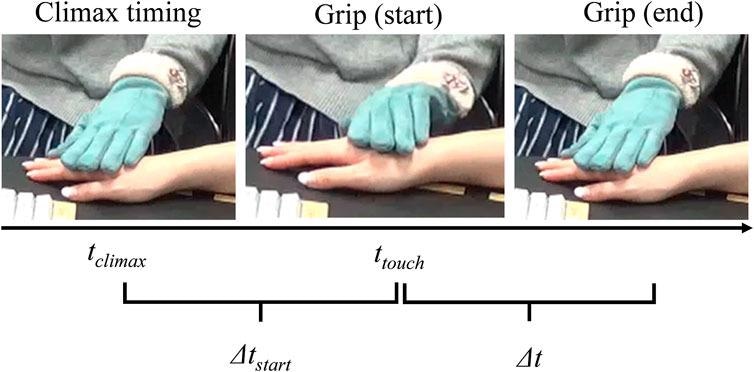

The study modeled touch behaviors to express heartwarming and horror emotions, and participants identified appropriate touch (grip) timing and durations using a robot. Timing and duration of the robot's touch behavior were modified by participants themselves to reproduce a natural feeling while watching heartwarming and horror video stimuli together (see Figure 1).

Participant and ERICA watch a video. Image Credit: Shiomi, et al., (2021)

To investigate whether the approach is helpful to reproduce a different kind of emotion, researchers also examined a negative emotion as a counterpart to the target (positive) emotion. Horror was chosen due to its ability to trigger various emotions, including fear, surprise and disgust. Video stimuli were used to provoke the emotions.

An android with a feminine appearance, ERICA, was used. The frequency of her motor control system for all the actuators was 50 ms, and ERICA wore gloves to avoid mismatched feelings between appearance and touch.

The participant's right hand was gripped by ERICA's while watching the video stimuli together.

The implementation process requires four kinds of parameters: (1) the most appropriate climax timing of that video, tclimax; (2) the timing at which the robot should start its grip as a reaction (or anticipation) to the climax, ttouch; (3) the grip's duration ∆t; and (4) ∆tstart (i.e., tclimax – ttouch), which is the difference between the touch and climax to extract the timing features (see Figure 2).

Illustration of tclimax, ttouch, ∆t, and ∆tstart. Image Credit: Shiomi, et al., (2021)

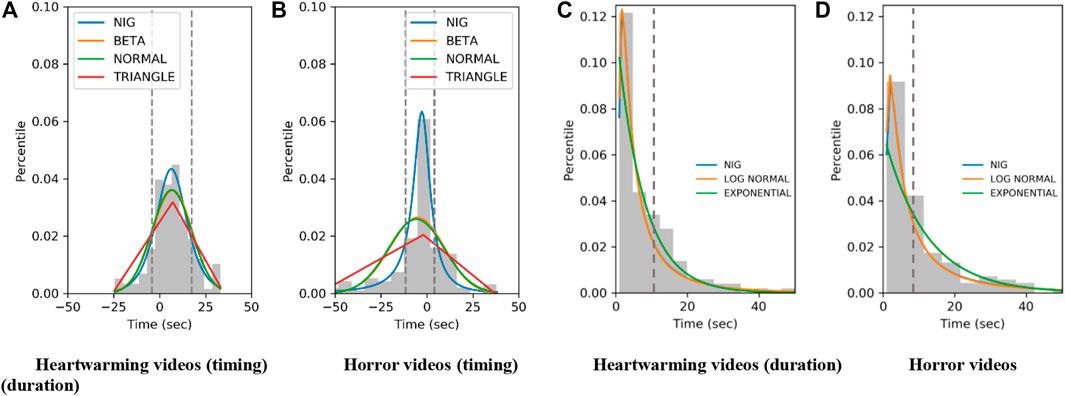

Figure 3 shows the histograms and the fitting results with the NIG functions.

Histograms of grip timing and duration and fitting results with functions. (A) Heartwarming videos (timing). (B) Horror videos (timing). (C) Heartwarming videos (duration). (D) Horror videos (duration). Image Credit: Shiomi, et al., (2021)

Touch timing and duration models were executed based on the data collection of human-robot touch-interaction settings to identify suitable touch timings and durations concerning conveying emotions. According to the proposed model, touching before the climax with a relatively long duration is ideal for expressing horror, and touching after a climax with a relatively short duration is ideal for heartwarming emotion.

If the modeling is accurate, the robot's touch would be perceived as more natural, and participants would be more likely to feel empathy towards the robot.

A total of 16 people (eight females and eight males) aged between 21 and 48, averaging 34 years, and with diverse backgrounds were recruited. The experiment had a within-participants design.

For comparison and investigation of the perceived naturalness of ERICA's touch behaviors, the participants compared the naturalness of touch and naturalness of touch timing.

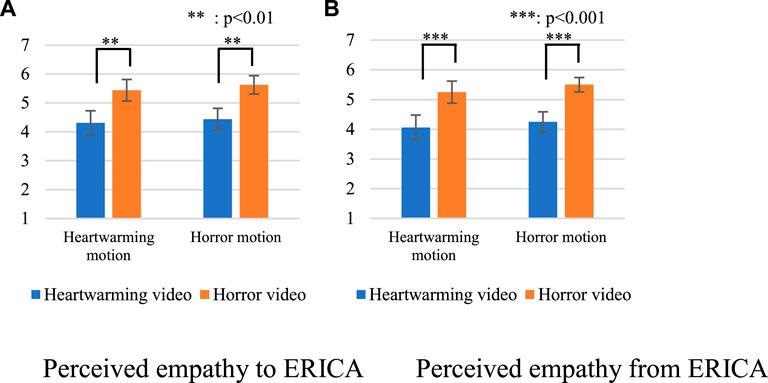

Participants were also asked about their perceived empathy with ERICA concerning perceived empathy to ERICA and perceived empathy from ERICA.

The only emotional signal from the robot was her grip behavior; she uttered nothing throughout the whole experiment and maintained a neutral facial expression.

In addition, the participants were asked to choose the top two perceived emotions (Q5/Q6) from Ekman's six basic emotions. The participants selected one emotion from six candidates by using radio buttons.

Results

Table 1 shows the integrated number of perceived emotions.

Table 1. Perceived emotion from robot's touch. Source: Shiomi, et al., (2021)

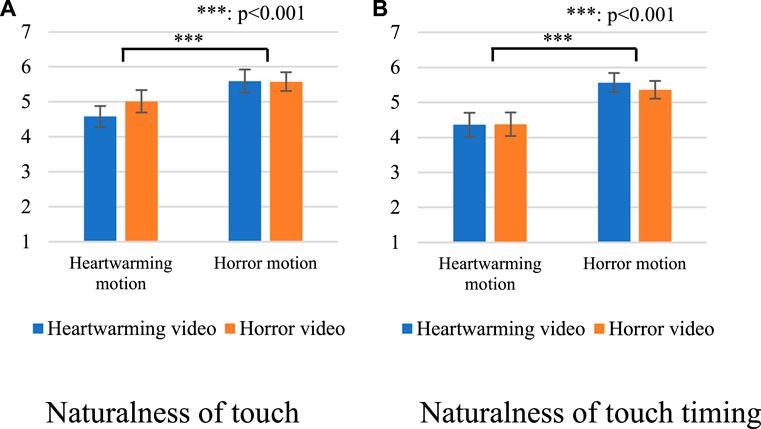

Below shows the questionnaire results of the naturalness of touch.

Questionnaire results with all videos. (A) Naturalness of touch. (B) Naturalness of touch timing. Image Credit: Shiomi, et al., (2021)

B illustrates the results of the naturalness of the touch timing. These results indicate that the participants assessed the touches higher with the horror NIG irrespective of the video categories. Below illustrates the results of the perceived empathy to ERICA.

Questionnaire results about perceived empathy. (A) Perceived empathy to ERICA. (B) Perceived empathy from ERICA. Image Credit: Shiomi, et al., (2021)

B depicts the results of the perceived empathy from ERICA. These results indicate that participants felt empathy with the robot when they watched horror videos.

The experiment results demonstrated a better impression invoked by the horror NIG model where the participants and the robot watched both horror and heartwarming videos together. This result offers design implications for the touch behavior of a robot.

Firstly, as a touch behavior implementation for a social robot, touch timing prior to a climax offers better impressions compared to touch timing following the climax, at least in a touch-interaction scenario in which videos were the only external emotional stimuli that people wanted to watch with the robot.

Secondly, the results indicate that the direct use of the parameters observed from human behaviors might overlook improved parameters for the behavior designs of robots in emotional interaction contexts.

Furthermore, though the estimated emotions by participants corresponded with the video categories, there were no major effects for perceived empathy. The expressions of emotions only by touching might be implicit. Feeling and sharing another's emotions are vital to perceive empathy. Thus, such implicit expressions might not be adequate to increase the perceived empathy.

Conclusion

Affective touch is a vital part of expressing emotions for social robots. This study implemented and assessed a touch behavior model in experiments with human participants, which expresses heartwarming and horror emotions with a robot.

The developed model was assessed by experimenting with 16 participants to analyze the effectiveness of the model using heartwarming/horror videos as emotional stimuli. The horror NIG model has benefits irrespective of the video types, that is, people preferred a touch timing before climax for both videos. This knowledge will contribute to the emotional touch-interaction design of social robots.

Continue reading: Could Service Robots be the Future of Social Distanced Hospitality?

Journal Reference:

Shiomi, M., Zheng, X., Minato, T., Ishiguro, H. (2021) Implementation and Evaluation of a Grip Behavior Model to Express Emotions for an Android Robot. Frontiers in Robotics and AI. Available at: https://www.frontiersin.org/articles/10.3389/frobt.2021.755150/full.

References and Further Reading

- Alenljung, B., et al. (2018) Conveying Emotions by Touch to the Nao Robot: A User Experience Perspective. Multimodal Technologies and Interaction, 2(4), p. 82. doi.org/10.3390/mti2040082.

- Bickmore, T. W., et al. (2010) Empathic Touch by Relational Agents. IEEE Transactions on Affective Computing, 1(1), pp. 60–71. doi.org/10.1109/t-affc.2010.4.

- Cabibihan, J-J & Chauhan, S S (2017) Physiological Responses to Affective Tele-Touch during Induced Emotional Stimuli. IEEE Transactions on Affective Computing, 8(1), pp. 108–118. doi.org/10.1109/taffc.2015.2509985.

- Cameron, D., et al. (2018) The Effects of Robot Facial Emotional Expressions and Gender on Child-Robot Interaction in a Field Study. Connection Science, 30(4), pp. 343–361. doi.org/10.1080/09540091.2018.1454889.

- Crumpton, J & Bethel, C L (2016) A Survey of Using Vocal Prosody to Convey Emotion in Robot Speech. International Journal of Social Robotics, 8(2), pp. 271–285. doi.org/10.1007/s12369-015-0329-4.

- Ekman, P (1993) Facial Expression and Emotion. American Psychologist, 48(4), pp. 384–392. doi.org/10.1037/0003-066x.48.4.384.

- Ekman, P & Friesen, W V (1971) Constants across Cultures in the Face and Emotion. Journal of Personality and Social Psychology, 17(2), pp. 124–129. doi.org/10.1037/h0030377.

- Field, T (2010) Touch for Socioemotional and Physical Well-Being: A Review. Developmental Review, 30(4), pp. 367–383. doi.org/10.1016/j.dr.2011.01.001.

- Fong, T., et al. (2003) A Survey of Socially Interactive Robots. Robotics and Autonomous Systems, 42(3–4), pp. 143–166. doi.org/10.1016/S0921-8890(02)00372-X.

- Ghazali, A. S., et al. (2018) Effects of Robot Facial Characteristics and Gender in Persuasive Human-Robot Interaction. Frontiers in Robotics and AI, 5, p. 73. doi.org/10.3389/frobt.2018.00073.

- Glas, D. F., et al. (2016) Erica: The Erato Intelligent Conversational Android. In: Proceedings of the 2016 25th IEEE International Symposium on Robot and Human Interactive Communication (RO-MAN), New York, NY, USA, August 2016 (IEEE), pp. 22–29.

- Hashimoto, T., et al. (2006) Development of the Face Robot SAYA for Rich Facial Expressions. In: Proceedings of the 2006 SICE-ICASE International Joint Conference, Busan, Korea (South), October 2006 (IEEE), pp. 5423–5428.

- Hertenstein, M. J., et al. (2009) The Communication of Emotion via Touch. Emotion, 9(4), pp. 566–573. doi.org/10.1037/a0016108.

- Kanda, T., et al. (2007) A Two-Month Field trial in an Elementary School for Long-Term Human–Robot Interaction. IEEE Transactions on Robotics, 23(5), pp. 962–971. doi.org/10.1109/TRO.2007.904904.

- Kanda, T., et al. (2010) A Communication Robot in a Shopping Mall. IEEE Transactions on Robotics, 26(5), pp. 897–913. doi.org/10.1109/TRO.2010.2062550.

- Lee, J W & Guerrero, L K (2001) Types of Touch in Cross-Sex Relationships between Coworkers: Perceptions of Relational and Emotional Messages, Inappropriateness, and Sexual Harassment. Journal of Applied Communication Research, 29(3), pp. 197–220. doi.org/10.1080/00909880128110.

- Leite, I., et al. (2013a) Social Robots for Long-Term Interaction: A Survey. International Journal of Social Robotics, 5(2), pp. 308–312. doi.org/10.1007/s12369-013-0178-y.

- Leite, I., et al. (2013b) The Influence of Empathy in Human–Robot Relations. International Journal of Human-Computer Studies, 7(3), pp. 250–260. doi.org/10.1016/j.ijhcs.2012.09.005.

- Lim, A., et al. (2012) Towards Expressive Musical Robots: a Cross-Modal Framework for Emotional Gesture, Voice and Music. Journal on Audio, Speech, and Music Processing, 2012(2012), p. 3. doi.org/10.1186/1687-4722-2012-3.

- Lithari, C., et al. (2010) Are Females More Responsive to Emotional Stimuli? A Neurophysiological Study across Arousal and Valence Dimensions. Brain Topography, 23(1), pp. 27–40. doi.org/10.1007/s10548-009-0130-5.

- Rossi, S., et al. (2017) User Profiling and Behavioral Adaptation for HRI: A Survey. Pattern Recognition Letters, 99, pp. 3–12. doi.org/10.1016/j.patrec.2017.06.002.

- Takada, T & Yuwaka, S (2020) Persistence of Emotions Experimentally Elicited by Watching Films. Bulletin of Tokai Gakuen University, 25, pp. 31–41.

- Tielman, M., et al. (2014) Adaptive Emotional Expression in Robot-Child Interaction. In: Proceedings of the 2014 ACM/IEEE International Conference on Human-Robot Interaction, pp. 407–414.

- Tokaji, A (2003) Research for Determinant Factors and Features of Emotional Responses of "kandoh" (The State of Being Emotionally Moved). Japanese Psychological Research, 45(4), pp. 235–249. doi.org/10.1111/1468-5884.00226.

- Venture, G & Kulić, D (2019). Robot Expressive Motions. Journal of Human-Robot Interaction, 8(4), pp. 1–17. doi.org/10.1145/3344286.

- Wang, S & Ji, Q (2015) Video Affective Content Analysis: A Survey of State-Of-The-Art Methods. IEEE Transactions on Affective Computing, 6(4), pp. 410–430. doi.org/10.1109/taffc.2015.2432791.

- Wang, X.-W., et al. (2014) Emotional State Classification from EEG Data Using Machine Learning Approach. Neurocomputing, 129, pp. 94–106. doi.org/10.1016/j.neucom.2013.06.046.

- Weining, W & Qianhua, H (2008) A Survey on Emotional Semantic Image Retrieval. In: Proceedings of the 2008 15th IEEE International Conference on Image Processing, San Diego, CA, USA, October 2008 (IEEE), pp. 117–120.

- Willemse, C. J. A. M., et al. (2018) Communication via Warm Haptic Interfaces Does Not Increase Social Warmth. Journal on Multimodal User Interfaces, 12(4), pp. 329–344. doi.org/10.1007/s12193-018-0276-0.

- Willemse, C. J. A. M., et al. (2017) Affective and Behavioral Responses to Robot-Initiated Social Touch: Toward Understanding the Opportunities and Limitations of Physical Contact in Human–Robot Interaction. Frontiers in ICT, pp. 4–12. doi.org/10.3389/fict.2017.00012.

- Yagi, S., et al. (2020) Perception of Emotional Gait-like Motion of Mobile Humanoid Robot Using Vertical Oscillation. In: Proceedings of the Companion of the 2020 ACM/IEEE International Conference on Human-Robot Interaction, Cambridge, United Kingdom, 23 March (IEEE), pp. 529–531. doi.org/10.1145/3371382.3378319.

- Zheng, X., et al. (2019) How Can Robot Make People Feel Intimacy through Touch. Journal of Robotics and Mechatronics, 32(1), pp. 51–58. doi.org/10.20965/jrm.2020.p0051.

- Zheng, X., et al. (2020) Modeling the Timing and Duration of Grip Behavior to Express Emotions for a Social Robot. IEEE Robotics and Automation Letters, 6(1), pp. 159–166. doi.org/10.1109/lra.2020.3036372.

- Zheng, X., et al. (2019) What Kinds of Robot's Touch Will Match Expressed Emotions. IEEE Robotics and Automation Letters, 5(1), pp. 127–134. doi.org/10.1109/lra.2019.2947010.